Why ChatGPT will not replace conversational platforms and chatbots for business?

ChatGPT gained popularity quickly, surpassing one million users less than a week after its launch. Users utilize ChatGPT to find answers to questions, write texts, and complete coursework and homework. ChatGPT is developed on a Large Language Model (LLM) and is designed to respond to virtually anything asked. LLM is a neural network deeply trained on historical data and used for natural language processing tasks such as text generation, translation, paraphrasing, summarization, classification, and more.

Goal-oriented chatbots created on conversational platforms are aimed at solving specific business tasks such as answering customer questions, collecting feedback, and assisting with recruitment. Although ChatGPT and Conversational AI platforms and bots are somewhat similar, they serve different purposes and are not directly interchangeable, but rather complementary. Conversational AI platforms are used to create and deploy smart chatbots and virtual assistants that solve specific business tasks by interacting with users in natural language.

These platforms are built on tools that provide chatbots with functionality useful for business: a module for designing and testing virtual assistants, analytics to track metrics and evaluate the bot's performance, and a module for setting up integrations with corporate systems. While ChatGPT can be used to generate responses for chatbots and virtual assistants, it lacks sufficient features to fully replace traditional platforms for creating AI bots.

Key limitations of ChatGPT as a smart virtual assistant include a lack of integrations for transactional tasks. ChatGPT is suitable for answering simple questions, but business customer chatbots need to handle more complex inquiries specific to that business and requiring integration with internal corporate systems. For example, virtual assistants handle tasks such as rescheduling a doctor's appointment, clarifying order status, rescheduling delivery dates, collecting customer feedback, and entering responses into CRM, and checking the balance of vacation days.

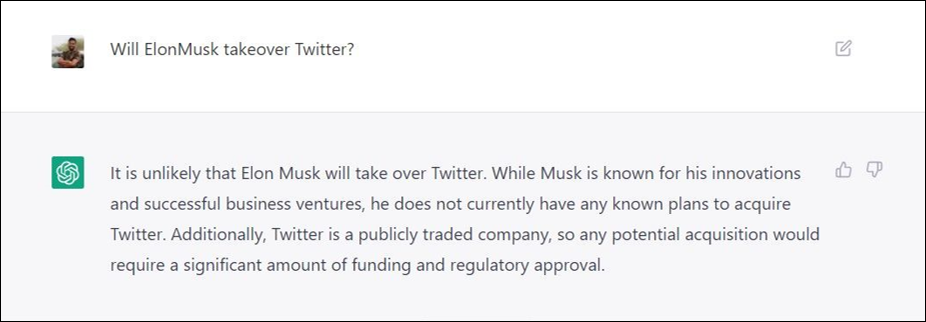

LLM and ChatGPT generate responses that may be appropriate in certain contexts, but there is no guarantee that such responses will always be factually correct. They are machine learning models developed to create text from large data sets but do not have access to external knowledge or an understanding of the real world. As a result, they may generate responses based on incorrect, outdated, or inappropriate information for the context in which they are used.

OpenAI acknowledges that ChatGPT "sometimes writes plausible-sounding but incorrect or nonsensical answers." This can lead to customer dissatisfaction and legal issues for businesses. The capabilities of traditional conversational platforms allow for precise, legally correct formulations for bot responses, involve copywriters, and provide up-to-date information from corporate systems.

ChatGPT is not suitable for on-premise deployment. Placing the LLM or GPT-3 model in a closed loop would be difficult due to the size and complexity of the model, requiring powerful servers with high-speed network connections and sufficient memory. There are also issues with confidentiality, security, and cross-border data transfer. Virtual assistants are designed to manage a significant amount of confidential information, and many AI conversational platforms prioritize security, which includes the right tools to ensure it. In the case of ChatGPT, legislation regarding personal data is not well developed.

How ChatGPT can expand the capabilities of conversational platforms: By integrating with LLM, conversational platforms, including the chatme.ai platform, expand their functionality and accelerate bot development. Below are examples of cases in which ChatGPT complements familiar conversational platforms:

- Clustering dialogues: Quickly cluster historical dialogues and identify the most frequent themes (intents) on which virtual assistants should consult users.

- Generating training and testing data: Using large language models, large volumes of test data can be automatically generated for testing NLU bots before they are launched and interact with real users.

- Replenishing the list of training phrases: Generating new training phrases to improve recognition quality.

- Rich small talk and human-like dialogues: Integrating goal-oriented bots with ChatGPT allows making dialogues with them more human-like, teaching bots to maintain small talk. It is also possible to generate various formulations for the same bot response, making communication more "lively."

- Translating the bot into another language: Automatically translate training, testing samples, and bot response formulations into other languages.

Thus, ChatGPT, like other LLMs by themselves, do not provide functions and capabilities sufficient for enterprise-level users. Conversational AI platforms allow creating, training, testing, and deploying chatbots in different communication channels, setting up integrations with corporate systems, retraining bots, and improving recognition quality. Such tools are more effective for solving specific tasks to support customers and business employees.