Topic modeling is gaining momentum in 2025. The 67.8 billion dollars NLP market this year, up from 47.8 billion dollars in 2024, shows rapid growth.

Medical literature now doubles every 73 days, making manual review impossible. Topic modeling turns this overload into usable insight.

A study of 1 968 PubMed abstracts on opioid risks in women found BERTopic produced clearer, more coherent clusters than LDA. These gains show how topic modeling supports faster, evidence-based decisions in healthcare.

Why Topic Modeling Matters More Than Ever

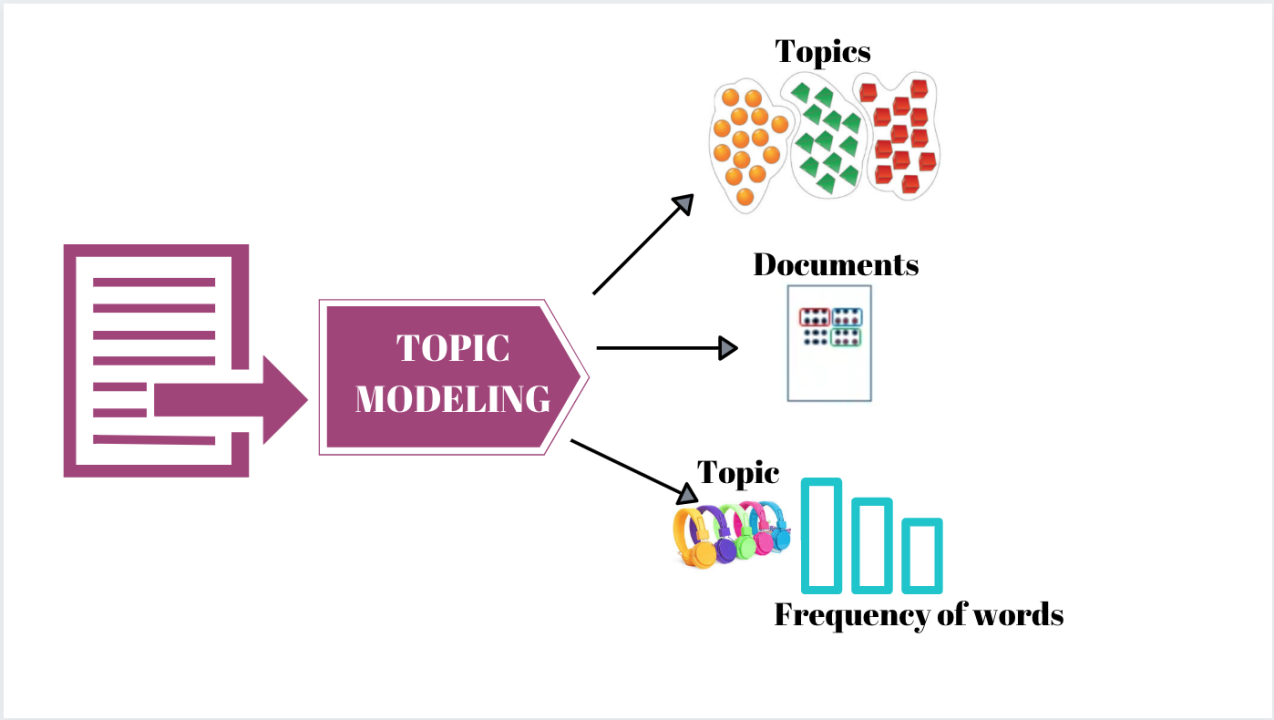

Topic modeling identifies hidden themes in collections of documents. Unlike manual review, it scales to millions of records. That matters when health systems must monitor signals in real time.

Hospitals use Python-based topic modeling to analyze physician notes for early signs of adverse events. Insurers apply it to detect fraud in claims. Governments scan public reports to monitor sentiment about vaccination campaigns. Pharmaceutical companies mine trial reports and social media posts for emerging safety concerns.

Python offers a full ecosystem of tools. NLTK helps with tokenization and stop-word removal. scikit-learn provides clustering and dimensionality reduction. BERTopic integrates transformer embeddings for semantic depth. Together these tools transform text into organized insights.

The value is measured not only in saved labor but also in improved outcomes. Detecting trends quickly can save lives, reduce costs, and improve trust. That is why topic modeling is no longer a research curiosity but a central technique in data-driven healthcare.

BERT and Context Matters

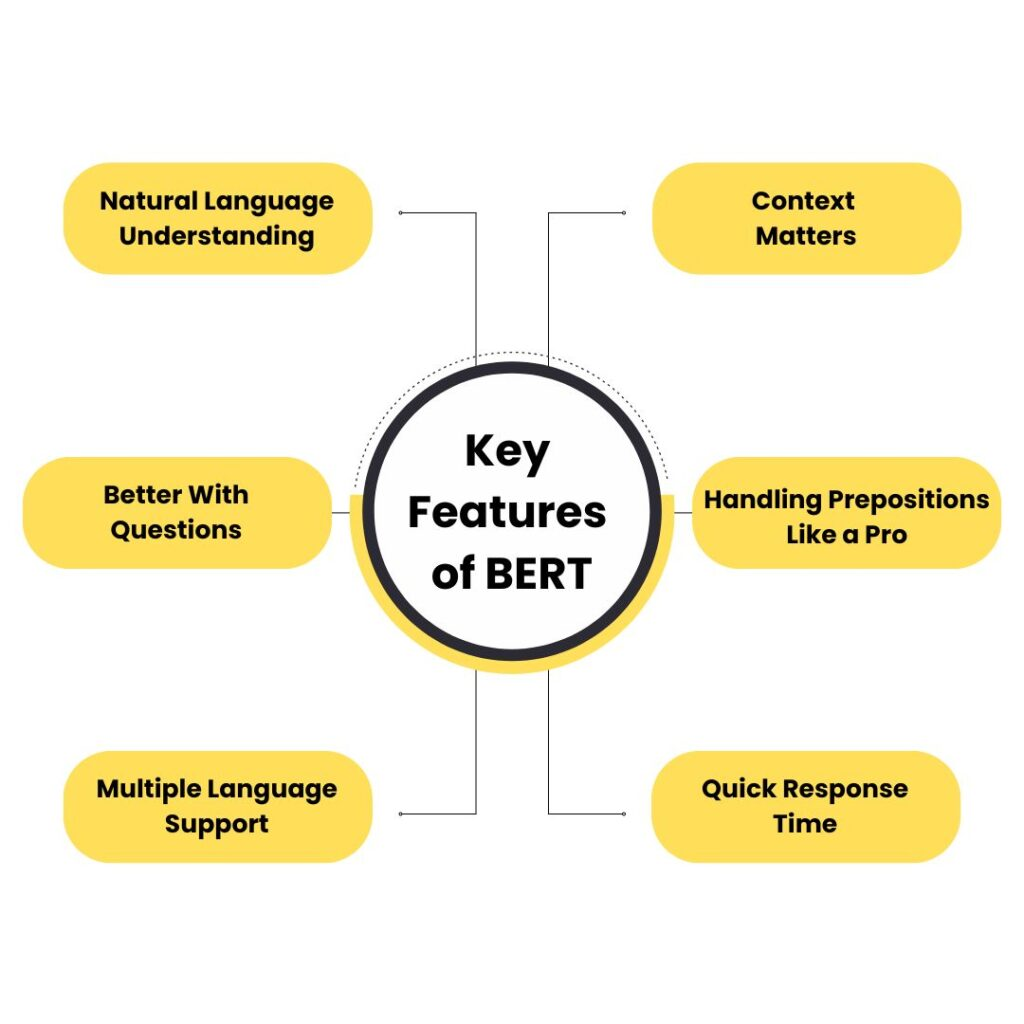

BERT remains a cornerstone of NLP in 2025. BERT processes text bidirectionally, capturing meaning from both left and right context. This allows precise interpretation of ambiguous terms. For example, the word “stroke” may mean a medical emergency or a physical action. BERT understands context and distinguishes between them.

A study in Frontiers in Artificial Intelligence showed that BERT-based models improved classification of clinical text by more than 20 % compared with traditional methods. These gains are not only statistical. They improve patient triage and risk assessment in emergency settings.

Variants such as BioBERT and ClinicalBERT specialize in medical language. They are trained on PubMed articles and electronic health records. These domain-specific embeddings increase accuracy for medical topic modeling, enabling models to understand abbreviations, medical terminology, and nuanced phrasing.

Even in 2025, BERT strikes a balance between interpretability and performance. Larger language models exist, but BERT remains trusted for transparent, reproducible, and resource-efficient tasks.

BERTopic Offers Clarity in Complex Corpora

BERTopic is one of the most popular Python libraries for advanced topic modeling. It combines transformer embeddings with class-based TF-IDF to create coherent and interpretable topics.

The opioid study showed how BERTopic outperformed LDA by producing clusters that were semantically richer. The model automatically detected outliers, labeled as topic -1, which often revealed rare but interesting findings. Outliers sometimes indicated early warnings or niche discussions.

In another evaluation of healthcare app reviews, BERTopic produced more meaningful clusters than LDA. It generated topics that aligned with user concerns about privacy, usability, and clinical value. Analysts could interpret them without spending days in manual annotation.

Key features of BERTopic include:

- Ability to use embeddings from BERT, RoBERTa, or DistilBERT.

- Dynamic topic modeling that tracks how themes evolve over time.

- Visualization tools that present clusters in 2D or 3D space.

- Integration with tools like ChatGPT for auto-labeling.

These features make BERTopic attractive not only for academic work but also for industry-scale applications.

Setting Up Python Tools Thoughtfully

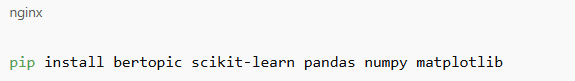

To work with BERTopic effectively you need a reliable Python environment. Install essential libraries:

- BERTopic

- scikit-learn

- NumPy

- pandas

- Matplotlib

Command for installation:

Beyond installation, careful environment management is vital. Use virtual environments to avoid version conflicts. GPU support may be required for large-scale embeddings.

Preprocessing text matters as much as modeling. Remove HTML artifacts, normalize spacing, expand medical abbreviations, and correct typos. Clean text leads to stronger embeddings. With biomedical data, consider specialized tokenizers that recognize chemical names or gene symbols.

Choice of embeddings depends on resources. If you have GPU clusters, use BioBERT or MPNet for higher semantic resolution. If resources are limited, DistilBERT or even GloVe works with careful preprocessing. The tradeoff is clear: heavier models yield better nuance but require more compute.

Working with Domain Specific Data

For experiments, the 20-newsgroups dataset is widely used. It contains 18 846 documents covering varied topics. But in healthcare, domain-specific data is essential.

Hospitals analyze anonymized discharge summaries. Pharma companies study clinical trial reports. Researchers explore thousands of abstracts from PubMed. Each dataset brings its own challenges.

Exploratory analysis is the first step. Inspect a sample of documents. Identify irregular formats, abbreviations, and domain jargon. Standardize common terms like “MI” for myocardial infarction or “DM” for diabetes mellitus. Apply stop-word removal with NLTK.

A case study of 100 000 health app reviews showed that proper preprocessing improved topic stability. Without cleaning, clusters were noisy and hard to interpret. With cleaning, themes became coherent and actionable.

The lesson is clear. No model compensates for poor preprocessing. Attention to detail before modeling defines the success of the project.

Visualization Enhances Interpretation

Extracted topics must be visualized to be understood. UMAP reduces embeddings to two or three dimensions. Matplotlib or Plotly renders scatter plots with colors for topics.

Visualization provides intuitive understanding. Analysts can see which clusters overlap, how many documents belong to each theme, and where outliers lie. For example, vaccine safety and dosage clusters may overlap, reflecting intertwined discussions.

Time-based visualization adds another dimension. By plotting topic prevalence month by month, analysts can detect emerging issues early. In COVID-19 research, visualizations revealed how themes shifted from vaccines to long COVID symptoms over time.

Visual tools do not replace metrics. They complement coherence scores and diversity measures. Together they create a robust understanding of results.

Best Practices and Deep Challenges

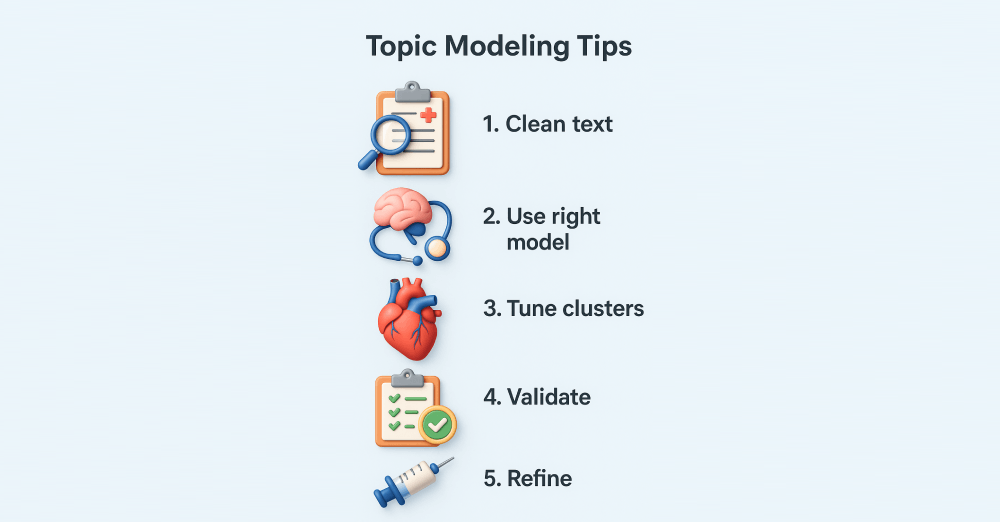

Several practices maximize success in topic modeling:

- Preprocessing rigor: Normalize spelling, handle medical abbreviations, and remove non-informative tokens.

- Embedding choice: Use domain-specific models when possible. BioBERT or ClinicalBERT capture medical language better than general BERT.

- Clustering tuning: Adjust HDBSCAN parameters. Small clusters capture rare signals but may fragment topics. Large clusters create broad themes. Balance is key.

- Evaluation: Use coherence metrics like NPMI and human expert review. Numbers alone cannot validate medical topics.

- Iterative refinement: Repeat preprocessing and clustering. Topic modeling is not one run but a cycle of refinement.

Challenges remain. Topics may overlap, making boundaries fuzzy. Outliers may distract or mislead. Interpretation requires expertise and sometimes manual merging. These issues remind us that topic modeling is not fully automated insight. It is a tool that augments human judgment.

Integration with Broader AI Tools

Topic modeling rarely stands alone. It integrates with larger AI pipelines.

One example is retrieval-augmented generation. Clusters from BERTopic can feed into the Graphlogic Generative AI platform. This creates conversational systems that deliver structured thematic answers. Instead of vague replies, systems can ground their output in topics detected from corpora.

Results can then be delivered in speech using the Graphlogic Text-to-Speech API. That makes findings accessible to clinicians, administrators, or patients in natural voice. For busy professionals, hearing insights can be faster than reading technical charts.

These integrations show the future direction: not just modeling text but embedding topic insights into workflows, communication, and decision support.

Future Outlook for Topic Modeling

By 2030 topic modeling will likely evolve into multimodal modeling. That means combining text with images, genomic data, and structured health records. BERT and BERTopic may integrate with models that read both text and images.

We will see greater automation in labeling. Already ChatGPT assists with auto-labeling of topics. In future, systems will create high quality summaries with minimal human input.

Privacy preserving topic modeling will also grow. Techniques such as federated learning and differential privacy will let hospitals share insights without sharing raw patient data. This will expand collaboration while protecting confidentiality.

Finally, topic modeling will merge with predictive analytics. Instead of only describing themes, models will forecast which topics are likely to grow. For public health, that could mean predicting the next major outbreak discussion from online signals.

Conclusion

Python topic modeling has become indispensable in 2025. With BERT and BERTopic, analysts can handle millions of documents and uncover coherent, interpretable themes. Hospitals, governments, and businesses rely on it to surface insights quickly.

The integration with conversational AI and text-to-speech makes results accessible beyond specialists. With careful preprocessing, proper embedding choices, and iterative refinement, topic modeling delivers not just clusters but actionable knowledge.

The future promises multimodal analysis, predictive topic forecasting, and privacy-preserving collaboration. Topic modeling is no longer a niche tool. It is now a pillar of modern healthcare and technology research, shaping decisions in an age of overwhelming information.

FAQ

You need basic Python knowledge. Skills in pandas, matplotlib, and scikit-learn help. No advanced math is required for initial projects.

Yes if data is deidentified and ethical approval is obtained. Processing must occur in secure environments.

If resources allow, use BioBERT or ClinicalBERT for medical data. If limited, DistilBERT or GloVe works with strong preprocessing.

Use coherence scores, diversity measures, and domain expert validation. Visualization helps but is not enough alone.

Refine preprocessing, adjust clustering, or merge topics manually. Clarity matters more than number of clusters.

Today it is text focused. By 2030 it may integrate with multimodal data including images and audio.

Yes. Hospitals use it to detect patterns in records, monitor adverse events, and analyze patient feedback. This supports better decisions.

Topics can be vague or overlapping. Outliers may distort insights. Expert review remains necessary.

Yes. Companies use it for customer feedback, legal document review, and market research.

It will remain central. Human experts need visual confirmation of clusters. Advanced visualization may move into interactive 3D dashboards.