Artificial intelligence now powers hospitals, legal offices, and classrooms. But fluency isn’t the same as understanding — and that gap remains the core challenge.

In 2025, leading models like GPT-5, GPT-4.1, Claude Opus 4, and O3 wow the world, yet their results tell a subtler story. GPT-5 reaches 91.4% on the MMLU benchmark, just above expert humans at 89.8%. But perfection is impossible: around 6.5% of questions contain flaws.

This raises the real question: will AI ever outperform specialists in medicine, chemistry, or law — or will benchmarks like MMLU keep exposing the cracks? For developers, MMLU isn’t just a scoreboard. It’s a stress test that reveals where models fail, which domains need extra training, and how far we remain from safe, human-level reasoning.

Why MMLU Is Unique

MMLU (Measuring Massive Multitask Language Understanding) was introduced in 2020 and quickly became the gold standard for evaluating comprehension across disciplines. Unlike earlier tests that focused on predicting the next word, MMLU measures reasoning, problem-solving, and specialized knowledge.

It consists of 15 908 questions across 57 subjects, sourced from academic exams, licensing tests, and online education platforms. This breadth makes it far more realistic and challenging than benchmarks like GLUE or SuperGLUE.

Subject Coverage

MMLU’s 57 subjects are divided into four broad categories, each stretching AI models in unique ways.

Humanities

Includes law, philosophy, history, and literature. These subjects demand sensitivity to nuance, context, and interpretation. A model might need to explain the legal reasoning behind a court decision, contrast ethical frameworks, or analyze symbolism in a novel. Success requires reasoning beyond surface-level memorization.

Social Sciences

Covers sociology, political science, economics, and psychology.

Here, models must grapple with human behavior, institutions, and abstract theories. They may be asked to predict the impact of a new policy, evaluate economic trade-offs, or identify psychological biases. This pushes AI closer to decision-making scenarios found in real life.

STEM

Spans physics, biology, mathematics, chemistry, and computer science.

This group emphasizes calculation and technical problem-solving. It’s not enough to recall formulas — models must apply them step by step, explain biological processes, or debug code. Multi-step reasoning in STEM remains one of the toughest challenges for AI.

Applied Fields

Includes medicine, finance, marketing, and business.

These subjects carry real-world consequences. A model may need to recommend a medical treatment, evaluate investment risks, or design a business strategy. Mistakes here aren’t just theoretical — they could affect health, money, or organizational decisions.

This diversity forces models to demonstrate true versatility — switching from abstract reasoning in philosophy, to quantitative rigor in mathematics, to evidence-based judgment in medicine. That constant shift is what makes MMLU uniquely powerful.

Performance Over Time

Over the past few years, language models have advanced at an unprecedented pace. From near-random guessing to surpassing human specialists in some areas, the progression is clear. The table below highlights the key milestones in accuracy across different generations of models.

| Model | Score % | Notes |

| Early small models | ~25 | Essentially random guessing |

| GPT-3 | 43.9 | A major leap but still far from reliable |

| GPT-4 | 86+ | Pushing into near-expert territory |

| GPT-5 | 91.4 | Surpassing most human specialists |

| Falcon-40B (open-source) | 56.9 | Progress in open models, though still behind closed systems |

This timeline reveals a striking trend: every new generation narrows the gap between humans and machines, though the curve of improvement is slowing as models approach expert-level ceilings.

Comparative Leaderboard 2025

| Model | Score % | Notes |

| GPT-5 | 91.4 | Current leader |

| GPT-4.1 | 90.2 | Strong reasoning abilities |

| O3 | 88.6 | Balanced performance |

| Claude Opus 4 | 87.4 | Competitive alternative |

| Falcon-40B | 56.9 | Best open-source model |

| Smaller LLMs | 25 | Baseline random level |

Hidden Weaknesses

The leaderboard shows undeniable progress, but also lingering fragility. GPT-5, for instance, achieves near-human accuracy in social sciences, yet in chemistry its score falls to about 64% — a reminder that domain-specific reasoning still challenges even the best models.

Human Baseline and Dataset Flaws

Human Performance

On MMLU, non-specialist humans average only 34.5%, barely above random chance. In contrast, expert humans reach nearly 90%, proving just how demanding the benchmark is. This contrast underscores a critical truth: the test doesn’t measure surface-level fluency but deep, specialized reasoning.

Flaws in the Dataset

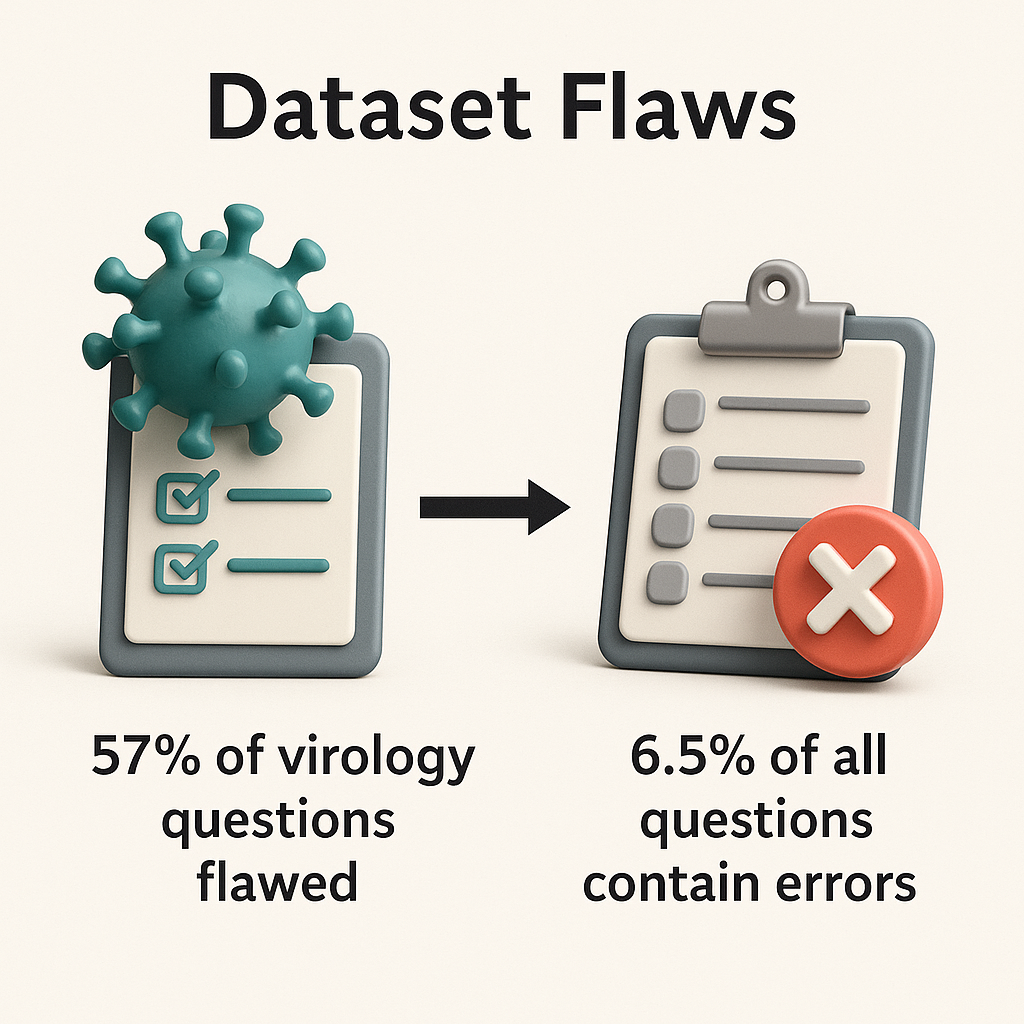

Audits uncovered significant imperfections:

- 57% of virology questions contain problems such as multiple valid answers or ambiguous wording.

- Overall, 6.5% of all MMLU questions are flawed.

These errors cap the achievable ceiling — no model or human can ever hit 100%. That means each incremental improvement above 80% represents not just progress, but progress against the very limits of the test itself.

Why It Matters

For developers and researchers, this imperfection is both a warning and a signal. Models aren’t just battling the complexity of human knowledge — they’re also being tested against an inherently noisy benchmark. The lesson: MMLU scores must be read critically, not blindly celebrated.

Trends Shaping AI Evaluation in 2025

From Static to Adaptive Benchmarks

Traditional benchmarks are reaching their limits. Once models surpass 90%, the tests stop being informative. Newer benchmarks like FrontierMath introduce adaptive difficulty, where problems become progressively harder. Early language models managed only 2%, while O3 has already reached 25.2%. This shift signals that the next generation of benchmarks will need to evolve alongside the models themselves.

Domain-Specific Evaluation

General-purpose benchmarks like MMLU are now complemented by highly specialized ones. MedQA and PubMedQA focus on medical reasoning, while LegalBench targets law. These are not academic exercises — errors in these domains could lead to misdiagnoses or flawed legal advice. The trend is clear: evaluation must move closer to the contexts where AI will actually operate.

Thinking Modes

Some models, such as GPT-OSS, allow multiple “thinking modes.” Low mode delivers quick but less accurate results, medium balances performance and runtime, and high mode aims for maximum accuracy at the cost of speed and compute. This flexibility hints at the future of AI deployment: users choosing between efficiency and depth depending on the stakes.

Open-Source Push

Open models like Falcon-40B may trail closed systems in raw accuracy, but they lead in accessibility and transparency. Researchers can study, fine-tune, and adapt these systems more freely, accelerating innovation. As trust and auditability become just as important as raw scores, open-source progress is carving out a vital role in the ecosystem.

Societal and Practical Impacts

Education

AI tutors tested on MMLU show stronger reliability in STEM fields, where accuracy matters more than eloquence. A model that scores high on reasoning benchmarks is more likely to guide students safely through complex problem-solving rather than offering misleading but fluent answers.

Medicine

Because MMLU includes clinical and biomedical questions, it pushes models closer to professional standards. A model with 90% accuracy won’t replace doctors, but paired with retrieval-augmented systems it can become a powerful assistant — helping with diagnoses, treatment guidelines, and medical literature search while keeping safety in focus.

Law and Ethics

Legal reasoning and moral judgment remain some of AI’s weakest points. By exposing these flaws, MMLU acts as a safeguard: identifying where models still misinterpret case law or fail ethical consistency before they are introduced into courts or compliance systems. This transparency is vital to avoid premature deployment in high-stakes domains.

Business and Finance

In applied fields such as finance, marketing, and enterprise strategy, benchmarks reveal whether models can handle reasoning under uncertainty. A system that fails MMLU in applied subjects should not be trusted with high-stakes decisions involving investment, risk analysis, or corporate planning.

Guidance for Developers

High benchmark scores alone don’t guarantee safety or usefulness. To move from raw numbers to real-world reliability, developers need a practical approach to testing and deployment. Here are key practices to keep in mind:

- Combine benchmarks wisely. MMLU provides breadth, but real-world deployment demands depth. Always supplement it with domain-specific tests like MedQA for medicine or LegalBench for law.

- Avoid test contamination. Never train directly on benchmark questions. Overfitting makes scores meaningless and hides a model’s true weaknesses.

- Drill down by subject. Don’t rely on a single average score. Break down performance by subject area — weaknesses in chemistry, law, or finance may be hidden in the overall result.

- Leverage thinking modes. Use configurable modes (fast, balanced, accurate) depending on application needs. Speed may matter in chatbots, while accuracy is critical in medical or legal support.

- Add retrieval for reliability. Pair generative models with retrieval-augmented systems. External knowledge sources reduce hallucinations and make outputs verifiable.

- Check for ethical consistency. Go beyond accuracy. Test whether the model handles sensitive or high-stakes scenarios fairly, especially in applied domains like law, healthcare, or hiring.

From Benchmarks to Tools

Benchmarks like MMLU help researchers measure raw capability, but they don’t solve real problems on their own. To unlock value, benchmark insights must be embedded into usable systems. This is where Graphlogic tools act as a bridge between theory and practice.

Graphlogic Generative AI & Conversational Platform

The Graphlogic Generative AI & Conversational Platform integrates retrieval-augmented generation (RAG), which grounds answers in reliable sources instead of relying on the model’s memory alone. This makes outputs more trustworthy, auditable, and context-specific.

Practical benefits:

- In medicine, doctors can query the platform for treatment guidelines or drug interactions, with references linked to trusted databases.

- In education, AI tutors trained with RAG provide students not just answers, but step-by-step reasoning supported by citations.

- In business, analysts use the system to pull data from internal documents and reports, reducing the risk of hallucinations in strategic decision-making.

Graphlogic Text-to-Speech API

The Graphlogic Text-to-Speech API converts advanced model outputs into clear, natural-sounding voice, making AI accessible beyond text. Unlike basic TTS systems, it emphasizes intonation, pacing, and clarity, enabling smoother human–AI interaction.

Practical benefits:

- In healthcare, spoken AI assistants can guide patients through medical instructions in simple, understandable language.

- In science and research, complex explanations can be delivered audibly for accessibility, supporting visually impaired professionals.

- In customer service, companies can deploy voice-based bots that sound less robotic, improving trust and engagement.

Why This Matters

Benchmarks like MMLU show us what AI can do in principle. Tools like Graphlogic’s platform and TTS API show us how to apply those capabilities safely and productively. Together, they close the gap between abstract scores and real-world impact — turning benchmark numbers into practical solutions for medicine, education, science, and enterprise.

Looking Beyond 2025

The next generation of benchmarks will not only measure whether models “know the answer.” They will test whether AI can be truthful, fair, efficient, and safe in the contexts where it matters most.

Global-MMLU and Multilingual Reach

As AI adoption spreads worldwide, evaluation cannot remain English-only. Global-MMLU expands into multilingual tasks, ensuring models reason effectively across languages and cultures. This is critical for healthcare, legal systems, and education in non-English contexts.

Medicine and Law: High-Stakes Benchmarks

Specialized evaluations are adding patient safety in medicine and moral reasoning in law. For example, benchmarks may test whether a model avoids recommending unsafe treatments or whether it can weigh ethical trade-offs in legal disputes. These additions push AI closer to the standards required for professional trust.

Multi-Modal Intelligence

The future is not just text. Multi-modal benchmarks will combine text with images, graphs, or structured data — reflecting the complexity of real-world decision-making. From diagnosing medical scans to interpreting financial charts, models will need to reason across different forms of information seamlessly.

Efficiency and Sustainability

Raw accuracy is no longer enough. The cost of running frontier models runs into millions per training cycle. New benchmarks will measure performance per dollar and per kilowatt-hour, rewarding models that achieve more with less energy. This shift could redefine “state of the art” as both powerful and sustainable.

Key Takeaways

- MMLU remains the most comprehensive benchmark in 2025, spanning 57 subjects and 15,908 questions.

- Non-specialists: ~34.5%. Experts: ~90%. GPT-5: ~91.4%.

- Strengths: progress toward human-level reasoning. Weaknesses: domains like chemistry and ethics.

- Trends: adaptive benchmarks, domain specialization, thinking modes, open-source growth.

- Practical tools like Graphlogic’s platform and TTS API help bring benchmarked AI into society safely.

Bottom line: MMLU keeps AI honest. It shows us not just what machines can do — but also what they cannot. That transparency is what makes it vital.

FAQ

MMLU stands for Measuring Massive Multitask Language Understanding, a benchmark introduced in 2020.

The dataset contains 15,908 multiple-choice questions across 57 subjects, ranging from elementary to professional level.

It was introduced by Dan Hendrycks and colleagues as a way to evaluate broad reasoning and knowledge.

Some domains require memorization, others demand calculation or ethical reasoning. Models handle factual recall better than complex reasoning, which explains uneven results.

No. Around 6.5% of the questions are flawed or ambiguous, which caps the achievable maximum. Even GPT-5 leaves nearly one in ten questions unanswered correctly.

MMLU-Pro is an advanced version of the benchmark with harder tasks and more answer options, designed to push models beyond the original test.

They often have fewer training resources and smaller datasets compared to closed corporate models. Still, open-source systems progress quickly and excel in transparency.

Yes, but expect more limited or guarded releases. Some labs already provide only subsets of benchmarks to prevent training contamination.