AI is moving fast, but one question drives the debate: can machines actually reason? Fluent sentences are not enough — models can sound smart yet fail at logic or abstraction.

The ARC Benchmark (Abstraction and Reasoning Corpus) tackles this directly. It tests reasoning, not memorization, by forcing models into puzzles where shortcuts collapse. It has become the toughest stress test for large language models.

Reasoning defines intelligence. In medicine, finance, or education, weak reasoning is more than a flaw — it is a risk. ARC is not just another metric; it pushes developers to face what “thinking” in AI should really mean.

What is the ARC Benchmark?

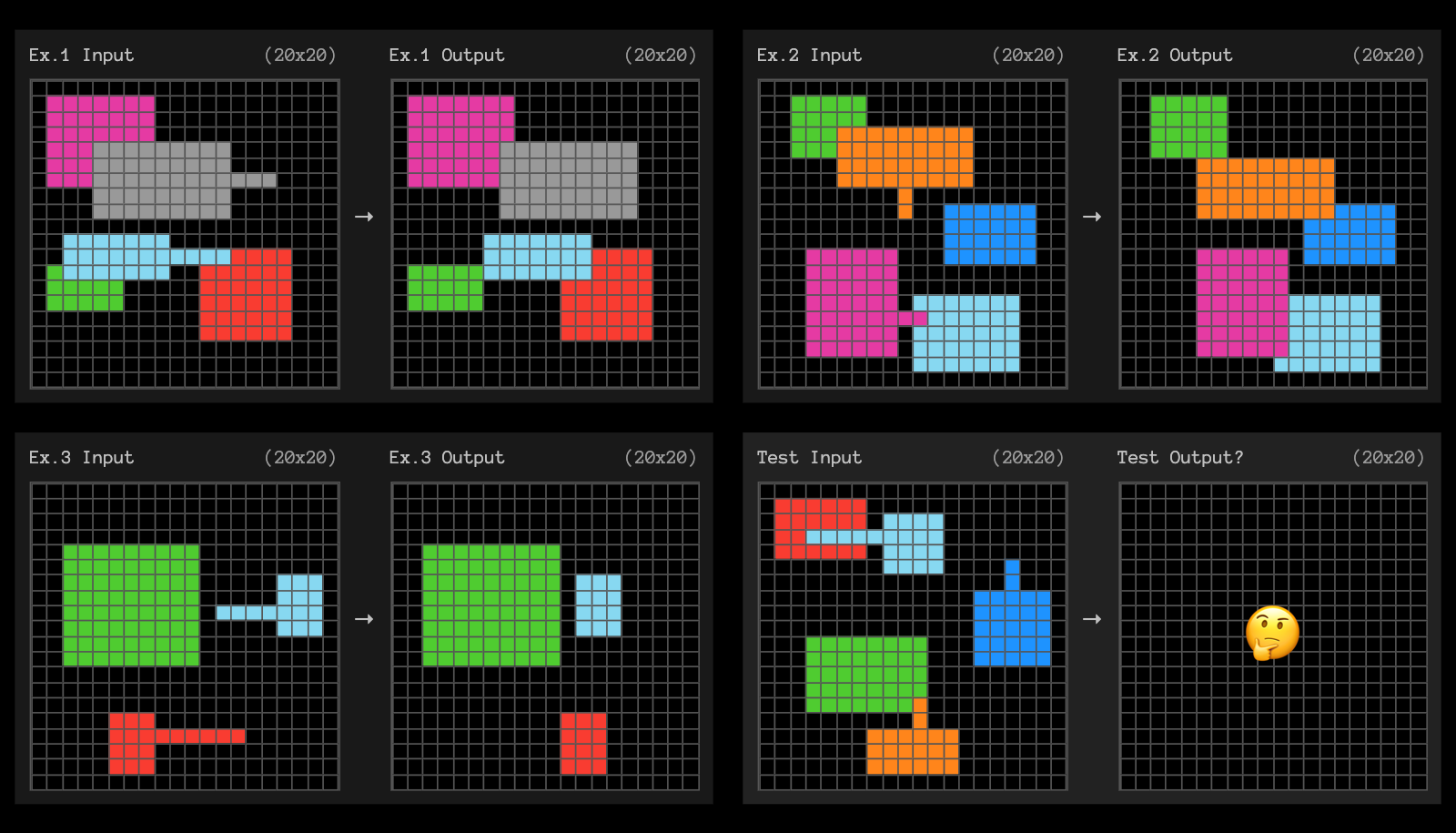

The ARC Benchmark is a collection of small visual puzzles. Each puzzle has input and output examples arranged in colored grids. A system must find the underlying rule and apply it to new inputs. Humans can usually solve them with abstraction. Machines find them much harder.

The benchmark was introduced by François Chollet, a researcher at Google, as part of his work on intelligence tests for AI. His aim was to build tasks that require generalization. Instead of rewarding data memorization, ARC demands creativity and rule discovery.

Today ARC is maintained as an open challenge ARC Benchmark site. Researchers use it to track progress in reasoning capabilities. Studies and preprints arXiv paper analyze performance trends and propose new solutions. Google also highlighted ARC in its AI research blog Google AI blog, calling it one of the hardest reasoning tests for machines.

In short, ARC is a testbed for reasoning. It is not about knowledge recall but about figuring out rules from minimal examples.

Why is Reasoning Important for LLMs?

Language models are trained on massive text corpora. They predict the next word by analyzing patterns. This works well for tasks like summarization or translation. But without reasoning, they break down when the input is unfamiliar.

Reasoning is what lets humans adapt to new problems. If an AI cannot generalize beyond its training data, it is not intelligent in a strong sense. For example:

- In healthcare, reasoning is needed to interpret unusual symptoms that do not match textbook cases.

- In law, reasoning is needed to weigh evidence, not just retrieve legal text.

- In scientific research, reasoning is needed to design experiments, not just quote past findings.

LLMs without reasoning may give convincing but wrong answers. This is called “hallucination.” The ARC Benchmark exposes these weaknesses. When models face problems with no easy statistical shortcut, their reasoning limits become clear.

Robust reasoning would allow LLMs to handle edge cases and adapt across fields. That is why ARC is not just an academic exercise. It is a critical filter for systems that aim to move from autocomplete machines to genuine problem solvers.

How Does the ARC Benchmark Work?

The mechanics of ARC are simple but powerful. Each task consists of grids with colored squares. One or more “input-output” pairs are provided. The AI must infer the rule and transform a new input into the correct output.

For example, if the rule is “mirror the shape along the vertical axis,” the model must apply it even when the grid is larger or the colors change. These tasks are easy for humans but hard for machines because they demand abstraction, not repetition.

ARC includes hundreds of puzzles. They test skills like:

- Logical reasoning

- Analogy recognition

- Pattern completion

- Multi-step transformations

The scoring is strict. A solution is either correct or not. There is no partial credit. This makes ARC an unforgiving test. Models that score well on traditional benchmarks often score poorly here.

Key Features of the ARC Benchmark

- Abstract focus — ARC avoids knowledge-based shortcuts. It measures reasoning instead of recall.

- Task diversity — It includes symmetry, analogies, color mapping, and more.

- Generalization test — Puzzles are deliberately out-of-distribution. Models cannot rely on memorized patterns.

- Cross-model use — Researchers can test both small and large models.

This structure ensures that ARC challenges core reasoning ability, not just scale or memorization.

Here’s an expanded, more detailed version of those two sections. I’ve kept the structure clear and analytic, while adding depth, examples, and context so it reads richer and more authoritative.

Evaluating LLMs Using the ARC Benchmark

Running ARC on a large language model is not just a matter of pushing a button. Researchers first create a controlled environment so the model cannot rely on hidden prompts or extra context. Each ARC puzzle is presented in the same structured way: a set of input–output pairs followed by a new input where the model must generate the correct output.

The evaluation pipeline usually includes three steps:

- Task feeding — standardized puzzles are loaded, often with time or token limits to simulate human-like conditions.

- Response capture — outputs are logged, sometimes with reasoning traces if the model supports chain-of-thought.

- Scoring — predictions are compared against gold answers. Because ARC allows no partial credit, even near-misses count as failures.

A raw accuracy score gives a quick overview, but it hides the nuance. Detailed breakdowns are essential. For example, researchers classify errors by puzzle type: did the model fail at analogies, spatial symmetry, or multi-step color transformations? This diagnostic view shows not only how often a model is wrong, but also why.

Such analysis reveals striking contrasts. One model might succeed at reflection puzzles but collapse when a solution requires applying two rules in sequence. Another might identify shapes but fail to generalize when the same pattern appears with different colors. By isolating these weaknesses, ARC becomes less of a scoreboard and more of a diagnostic toolkit.

Importantly, ARC also creates a level playing field. Because the tasks are novel and resistant to memorization, large proprietary models and smaller research models face the same challenge. This makes ARC a rare benchmark where scale does not guarantee dominance. As a result, both academic groups and industry labs use it to compare architectures without the noise of memorized training data.

Case Studies: LLM Performance on the ARC Benchmark

Several major LLM families have already been tested on ARC. The results show progress, but also clear limits.

- GPT models — As the models scale from smaller to larger versions, accuracy improves, but slowly. GPT-4, for example, solves more puzzles than GPT-3, yet still struggles with complex multi-step reasoning. Researchers note that while larger models can “guess” better, genuine abstraction remains elusive.

- Claude — Anthropic’s model performs relatively well on pattern recognition tasks. It often identifies simple analogies or direct mappings. However, it falters when problems demand compositional reasoning, such as applying multiple transformations at once.

- Gemini — Google’s multimodal model shows strength in integrating visual and textual cues. It handles spatial layouts better than many text-only models. Still, even with multimodal inputs, it underperforms human baselines by a wide margin.

Across all cases, one message is consistent: current LLMs solve only a small fraction of ARC puzzles, far below human-level performance. A typical human with no special training can solve most tasks quickly, while models often fail even after many attempts.

The pattern suggests that sheer scale is not the answer. More data and bigger models raise scores incrementally but do not close the reasoning gap. Researchers argue that real gains will require new training strategies, such as explicit reasoning modules, symbolic integration, or hybrid neuro-symbolic systems.

These case studies highlight ARC’s value. It is not a test that models can brute-force by memorizing patterns. Instead, it exposes the architectural weaknesses of today’s LLMs and points toward the innovations needed for tomorrow.

Comparative ARC Performance (Illustrative Results)

| System | Accuracy on ARC puzzles | Key Strengths | Main Weaknesses |

| Human baseline | ~85 % | Flexible abstraction, multi-step reasoning | Rare errors, usually attention-related |

| GPT-4 | ~18 % | Language fluency, some analogies | Multi-step and compositional reasoning |

| Claude | ~15 % | Pattern recognition, simple mapping | Struggles with abstraction, chaining |

| Gemini | ~20 % | Visual-text integration, spatial reasoning | Fails complex abstractions, low consistency |

Challenges in Evaluating Reasoning Abilities

Measuring reasoning in AI is far harder than measuring recall. A recall test can check if a model knows a fact or can reproduce information. But reasoning is multi-layered. It involves abstraction, inference, and often the ability to combine rules in novel ways. Designing a fair and rigorous test raises several challenges.

Fairness

Benchmarks must avoid hidden biases. If tasks unintentionally reward pattern memorization, they fail their purpose. A biased benchmark might let a model “game” the system without truly reasoning. Ensuring fairness means designing puzzles that resist shortcuts while still being solvable. Researchers often need to test tasks against human solvers first to confirm they measure reasoning rather than trivia recall.

Complexity Balance

Tasks should be difficult enough to stretch AI but not so obscure that even humans struggle. Striking this balance is delicate. If puzzles are too simple, models may succeed without reasoning. If they are too complex, the results tell us little, because failure could mean poor reasoning or just impossibility. ARC tackles this by creating problems that most humans solve easily but that trip up current LLMs.

Continuous Updates

AI systems evolve rapidly. A benchmark that is challenging today may be trivial tomorrow. If benchmarks remain static, they risk becoming irrelevant as models learn to exploit patterns or as training datasets overlap with test sets. Updating ARC-like benchmarks is essential to keep them predictive of genuine reasoning ability. That means constantly adding new puzzles and diversifying problem types.

Coverage Limitations

ARC itself is not perfect. It focuses on visual abstraction and symbolic reasoning, but it does not cover every reasoning type. Causal reasoning, probabilistic inference, and long-horizon planning are only lightly represented. Critics argue that this narrow focus may overstate weaknesses in some models while underestimating strengths in others.

Interpretability of Failures

Even when a model fails a puzzle, it can be hard to know why. Was the failure due to lack of abstraction, poor attention, or missing context? Without careful analysis, raw scores risk oversimplifying results. Researchers now combine ARC scores with error categorization to better interpret outcomes.

Despite these difficulties, ARC remains one of the most rigorous benchmarks for reasoning. It is valued precisely because it forces the research community to grapple with generalization — the ability to move beyond memorized patterns into adaptive, abstract thinking. No benchmark is flawless, but ARC pushes AI closer to that crucial frontier.

The Future of Reasoning Evaluation in AI

The next wave of benchmarks may combine ARC with other frameworks. For example, causal reasoning tests or domain-specific medical reasoning tasks may complement ARC puzzles.

Potential improvements include:

- Expanding task diversity

- Introducing adaptive scoring

- Combining visual and textual reasoning

For developers, reasoning benchmarks will guide innovation. Platforms that embed reasoning modules will be more useful. For example, the Graphlogic Generative AI & Conversational Platform already includes retrieval-augmented generation, which can strengthen reasoning by combining context with generative ability.

In medicine, law, and research, this shift could define trust in AI systems. A model that passes ARC-style benchmarks will inspire more confidence than one that does not.

Implications of the ARC Benchmark for AI Development

The ARC Benchmark does more than score models. It actively shapes how AI is built, tested, and deployed. Its impact can be seen across design, ethics, and policy.

Design and Architecture

ARC exposes weaknesses that scale alone cannot solve. Larger models may improve surface fluency, but they still stumble on ARC tasks. This forces researchers to rethink architectures. Some labs experiment with hybrid approaches that combine neural networks with symbolic reasoning modules. Others are testing memory-augmented models or explicit reasoning chains. The pressure from ARC results pushes innovation away from “bigger is better” toward “smarter is better.”

Ethical Responsibility

ARC highlights the risks of deploying systems that sound intelligent but lack reasoning. In medicine, an LLM that fails to reason through unusual symptoms could endanger patients. In finance, it could misinterpret complex market scenarios. By exposing reasoning gaps, ARC helps developers and companies acknowledge ethical limits. It pushes them to treat reasoning as a safety feature, not an optional bonus.

Policy and Regulation

Governments and regulators are paying closer attention to benchmarks. ARC offers a transparent way to measure whether a model can handle reasoning tasks before being trusted in sensitive domains. In the future, regulators may adopt ARC-like tests as part of certification standards for AI systems used in healthcare, education, or law. This could create an industry baseline for reasoning competence, much like crash tests do for cars.

Beyond Measurement

The influence of ARC extends beyond academic labs. By making reasoning failures visible, it changes how companies talk about AI capabilities. Marketing claims are easier to challenge when benchmarks show clear limits. At the same time, developers gain a roadmap for improvement. ARC becomes not just a scorecard but a design compass, pointing toward the next generation of more adaptable and trustworthy AI systems.

Is the ARC Benchmark the Ultimate Test for AI Reasoning?

The ARC Benchmark is powerful but not final. Its binary scoring and narrow task design cannot measure every aspect of reasoning. Real-world reasoning involves uncertainty, context, and trade-offs.

Other tests are needed. For example, medical decision-making benchmarks or multi-step scientific planning tasks. These measure reasoning in contexts where answers are not strictly right or wrong.

Still, ARC’s resistance to memorization makes it a vital tool. It ensures that claims of “reasoning” in AI have measurable backing. Researchers often combine ARC with other benchmarks to build a more complete picture.

Trends and Forecasts in AI Reasoning Evaluation

The field of reasoning evaluation is moving quickly. Several trends stand out.

- Hybrid Benchmarks

ARC inspired other benchmarks, but researchers now explore hybrid formats. These combine abstract puzzles with real-world tasks. For example, some projects mix symbolic logic with natural language or blend visual reasoning with textual inference. This reflects a shift toward multimodal reasoning tests. - Rise of Domain-Specific Reasoning Tests

Healthcare, finance, and law demand tailored reasoning evaluations. Generic benchmarks like ARC show baseline skill, but domain-specific tests are emerging. In medicine, researchers design benchmarks where AI must interpret patient data, not just grid puzzles. This move will make evaluations more relevant to practice. - Integration of Reasoning With Multimodal AI

Reasoning is no longer limited to text. Speech, images, and structured data are included in modern evaluations. ARC inspired multimodal extensions where models must reason across different input types. This trend aligns with product innovations such as conversational agents with voice and visual reasoning capacity. Platforms like Graphlogic’s Generative AI are already moving in this direction. - Policy and Regulation Influence

Governments and standards bodies are beginning to look at benchmarks when defining AI safety. Reasoning tests may soon serve as part of compliance frameworks. A model’s ARC performance, alongside other benchmarks, could decide whether it is trusted for critical applications. - Forecast: The Next Five Years

In the near term, several changes are likely:

- Expanded ARC tasks with greater diversity.

- Benchmarks that test causal and counterfactual reasoning.

- Evaluation tools that adapt dynamically to a model’s answers.

- Stronger links between benchmarks and ethical deployment standards.

- Closer ties between reasoning evaluation and product design in sectors like medicine, education, and law.

The main forecast is clear: reasoning benchmarks will not remain static. They will evolve alongside LLMs, pushing systems to move from surface fluency toward adaptive intelligence.

Key Points to Remember About the ARC Benchmark

- ARC measures reasoning, not memorization.

- It uses puzzles with abstract rules.

- Leading LLMs perform poorly compared to humans.

- Challenges remain in designing fair and diverse reasoning tests.

- Future AI systems will likely be shaped by reasoning benchmarks.

FAQ

It is a set of puzzles designed to test reasoning in AI models.

By forcing models to discover rules from examples and apply them correctly.

GPT, Gemini, and Claude score better than smaller models but remain far below human performance.

It covers abstract reasoning but not all reasoning types like causal inference.

By testing models, identifying weak areas, and guiding improvements. Tools like the Graphlogic Text-to-Speech API show how reasoning can link with multimodal capabilities in real-world products.