Voice cloning is changing how we hear and speak with machines. AI can now copy a human voice so well that it sounds real, not robotic. Entertainment, healthcare, and customer service are already using it, while experts warn about fraud and privacy risks. This article explains what voice cloning is, how it works, its benefits, its dangers, and where it is heading.

What is Voice Cloning

Voice cloning is the process of creating a digital copy of a human voice. It makes it possible for machines to generate speech that sounds like a particular person.

The first digital voices were early text to speech engines. They could read text but sounded unnatural. Over the last decade, deep learning has transformed this field. Neural networks now make it possible to capture subtle aspects of human speech.

Voice cloning is not only a technical achievement. It is also a way to preserve personal identity. Researchers at MIT CSAIL and Stanford HAI show that cloned voices help people with speech loss maintain emotional connection. A personalized synthetic voice can reduce the sense of alienation that often comes with illness.

Voice cloning also supports cultural preservation. Some researchers use it to keep endangered languages alive. By recording a small number of native speakers, AI can generate speech in voices that match the original dialect.

How Does AI Voice Cloning Work

The process of creating a cloned voice usually has three steps.

Data collection. Systems record voice samples. These samples capture pitch, accent, and individual speech habits. The more varied the dataset, the better the model can generalize.

Model training. Algorithms process the data. They learn the unique features of a person’s voice, including how they pronounce words, where they pause, and how they emphasize certain sounds.

Synthesis. After training, the model generates new speech. It can read new sentences in a voice that closely resembles the original.

Modern systems differ in how much data they need. Some need several hours of recordings. Others can work with less than five minutes of speech. Generative models like DeepMind’s WaveNet demonstrate how AI can replicate the complexity of human sound, including natural prosody and emotion.

Recent research also explores zero shot cloning, where the system generates a convincing voice after hearing only a short phrase. This reduces the barrier to entry but also increases security risks.

Key Technologies Behind Voice Cloning

The main technologies driving voice cloning include neural networks, spectrogram based models, and natural language processing.

WaveNet is a neural network that produces highly realistic audio waveforms. It has set the standard for speech quality in recent years. Tacotron is another model that converts text into spectrograms, which are later turned into sound. Natural Language Processing ensures that generated speech matches the meaning and context of the text.

These technologies are often combined with large language models. This allows systems not only to reproduce sound but also to generate content that fits the speaker’s style. The result is speech that sounds human both in form and in content.

Applications of Voice Cloning Technology

Voice cloning has already reached many industries.

- Entertainment. Studios use cloned voices in film dubbing, animation, and video games. Instead of hiring actors for long recording sessions, they generate new lines instantly. In 2024 several production houses reported cutting costs by over 40% by adopting AI based dubbing.

- Accessibility. People with conditions like ALS or throat cancer can preserve their voice in advance. Later they can use devices to speak again with their own synthetic voice. Research on NCBI confirms that patients feel more connected when using a personalized digital voice compared with a generic one.

- Customer service. Companies design assistants that sound more natural. This improves trust and makes customer support less frustrating. Some banks have tested AI voices for account information lines.

- Healthcare training. Medical schools experiment with AI patients who can speak in different tones, ages, and accents. This helps prepare students for real world diversity.

- Education. Language learning platforms use voice cloning to simulate local accents. Learners practice pronunciation with voices that feel authentic.

- Journalism. Media organizations test AI voices for translation and narration. This reduces the need for multiple recording teams.

The variety of use cases shows how broad the potential of this technology is.

Benefits of Voice Cloning

The advantages of voice cloning are clear.

- Personalization. Services can adapt to individuals by using familiar voices. This strengthens emotional connection.

- Efficiency. Production costs drop when new lines can be generated instantly instead of recorded in studios.

- Accessibility. Patients with voice loss regain the ability to speak in their own style.

- Scalability. Developers can test products across many voices without hiring large teams.

For businesses, voice cloning makes it possible to expand quickly into multiple languages and regions. For creators, it removes barriers to content production. Tools like the Graphlogic Text to Speech API make this integration simple and effective.

Risks and Ethical Concerns of Voice Cloning

Voice cloning also introduces serious risks.

Fraud is already a major issue. Criminals use cloned voices to impersonate relatives or executives and convince people to transfer money. In 2023 a UK company reported losing over $200000 to such a scam. The National Institute of Standards and Technology has documented a rising number of cases.

Privacy is another concern. Voices are biometric data. If stolen, they could be misused to bypass security systems that rely on voice recognition.

Consent is perhaps the most sensitive issue. Using someone’s voice without permission violates rights. Legal experts compare it to using a person’s image without consent. Some countries are already discussing laws to regulate digital voice ownership.

Finally, there is the cultural impact. If companies clone voices of actors, speakers, or politicians, who owns the rights to those voices? The debate is ongoing.

Real Life Examples of Voice Cloning

The technology is no longer experimental.

In 2024 a streaming platform used AI to dub foreign shows into English with cloned voices of famous actors. This cut production time in half.

Video game studios employ AI voices to generate hundreds of characters. Players report that voices feel more diverse and natural compared to older games.

Assistive devices are now widely available for patients. They allow people with speech loss to talk again using their own preserved voices.

Journalists have recreated historical speeches using cloned voices to provide audiences with more authentic experiences. Educational apps have launched lessons that simulate regional accents for learners worldwide.

Trends and Forecasts in Voice Cloning

Analysts expect the global market for synthetic voices to grow into billions of dollars by 2030.

Key trends include:

- Integration with immersive technologies. Virtual reality and augmented reality systems will use cloned voices to make avatars more realistic.

- Security features. Companies are developing watermarking techniques to mark AI generated voices and prevent misuse.

- Legal frameworks. Europe and the United States are moving toward laws that define ownership and consent for digital voices.

- Multilingual support. Future models will handle dozens of languages, enabling global applications.

Platforms such as the Graphlogic Generative AI and Conversational Platform already show what is possible when voice cloning is combined with conversational AI and avatars.

Forbes predicts that voice cloning will become a standard tool for businesses by 2030. NVIDIA points to the role of deep learning in creating voices that are almost indistinguishable from human speech.

One important forecast is the development of ethical watermarking. This would embed invisible signals in AI generated voices, allowing detection without affecting quality. Another trend is personalization at scale, where every customer interacts with a service using a custom cloned voice.

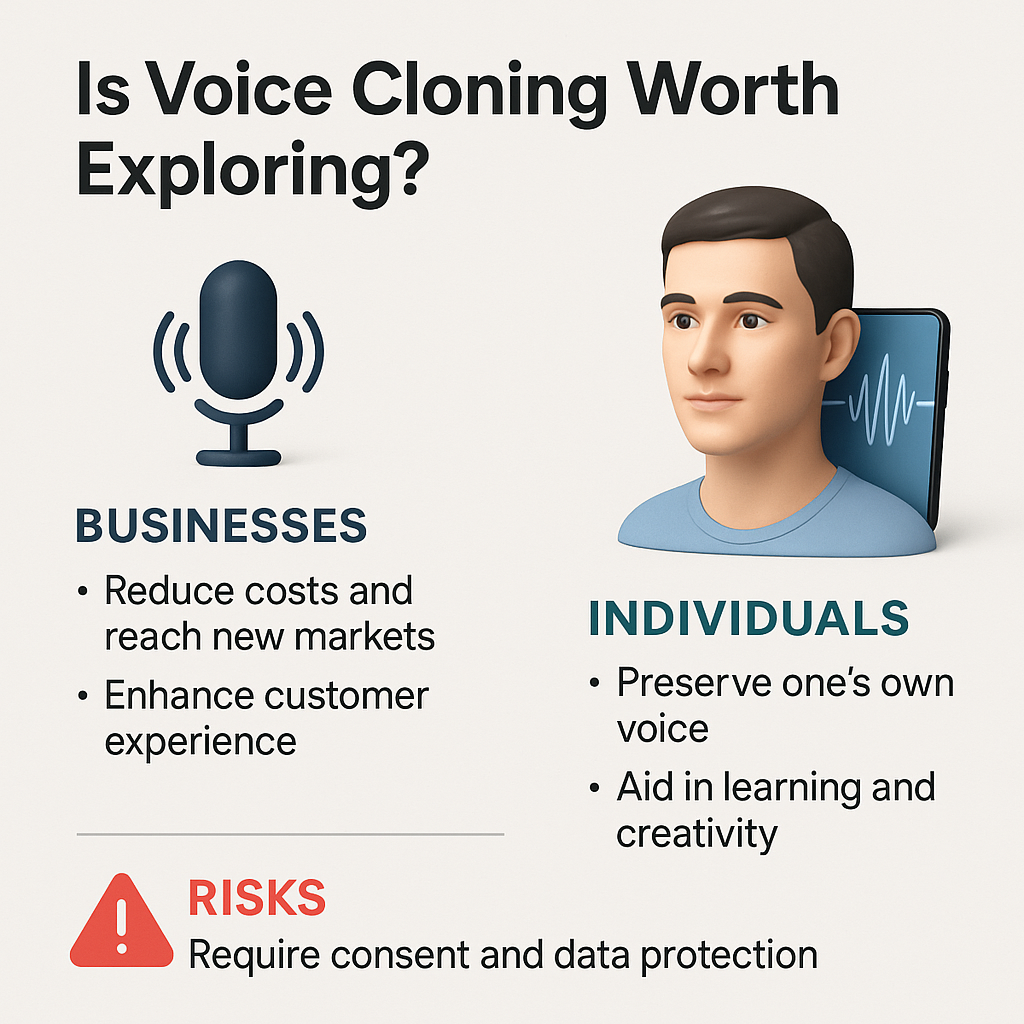

Is Voice Cloning Worth Exploring

Voice cloning is no longer optional for businesses that want to stay competitive. The potential is huge. Media companies cut costs by generating voices instead of recording them. Brands reach global markets by cloning voices in multiple languages. Customer service teams improve user experience with assistants that sound real, not robotic.

For individuals, the value is personal. Patients can preserve their own voice against illness. Learners can train with native sounding AI tutors. Creators can produce podcasts or audiobooks without studios. Voice cloning is already making communication more inclusive.

Yet the risks are just as real. Without consent and transparency, public trust will collapse. Organizations must protect voice data with encryption, use watermarking to flag AI voices, and disclose clearly when speech is machine generated.

The question is not whether voice cloning will spread but who will manage it responsibly. Companies that adopt ethical policies and open communication will gain trust. Those that ignore the risks will face backlash and regulation.

Voice cloning is worth exploring if it is done with care. Used responsibly, it can transform industries, empower individuals, and expand human communication.

Legal and Regulatory Landscape

Voice cloning is advancing faster than laws can catch up. Yet regulators in many regions are beginning to react.

In the European Union, policymakers are debating rules under the AI Act that would classify synthetic voices as high-risk systems. Companies deploying them may be required to meet strict transparency and data protection standards. This includes clear disclosure when users are interacting with an AI-generated voice.

In the United States, several states have introduced bills aimed at preventing deepfake misuse. For example, California already restricts the use of synthetic media in political advertising close to elections. Lawmakers are now considering extensions that would cover voice cloning in fraud cases.

Asia is moving quickly as well. China has issued guidelines on deep synthesis technologies that require consent and labeling of AI-generated content. These rules apply to synthetic voices, making providers responsible for preventing misuse.

The lack of global alignment creates uncertainty for companies working across borders. Until an international framework emerges, businesses must monitor local regulations carefully. They should also consider voluntary codes of conduct, since waiting for laws to arrive may expose them to reputational damage before legal risks even materialize.

Security and Detection Tools

As voice cloning becomes mainstream, security solutions are developing in parallel. The goal is not only to prevent fraud but also to help users distinguish real voices from synthetic ones.

One approach is digital watermarking. Invisible signals are embedded into AI-generated speech. These markers cannot be heard by humans but can be detected by software. Researchers believe this could become a standard to fight impersonation.

Another method is acoustic fingerprinting. Even the most advanced AI models leave tiny artifacts in the sound. Detection tools analyze frequencies and timing patterns to reveal whether speech is synthetic. Several cybersecurity companies now offer early versions of these services.

There are also authentication systems that combine voice recognition with additional factors, such as device ID or biometrics. This reduces the risk of cloned voices tricking voice-based security checks.

Finally, awareness training is a simple but powerful tool. Businesses are beginning to educate staff and customers about red flags in phone calls or voice messages. Combining human vigilance with technical solutions gives the strongest defense.

While no detection system is perfect, these tools represent the first line of protection. Organizations that adopt them early will be better prepared for the next wave of voice cloning threats.

Key Points to Remember About Voice Cloning

- Voice cloning uses AI to create synthetic replicas of human voices.

- The process includes data collection, model training, and synthesis.

- Applications span entertainment, healthcare, customer service, and education.

- Benefits include personalization, lower costs, and accessibility.

- Risks include fraud, privacy loss, and consent violations.

- The future will bring wider adoption, regulation, and better security.

- Psychological and social impact: natural voices increase trust, robotic ones reduce it.

- Business case studies: media, banks, and healthcare startups already cut costs and expand access with voice cloning.

Final Thoughts

Voice cloning shows how far artificial intelligence has come in replicating human communication. It offers major benefits in personalization, accessibility, and efficiency. It also presents risks that require urgent attention.

The coming years will likely bring more natural sounding voices, integration with immersive technologies, and new regulations. The key challenge will be to balance innovation with ethics.

For those ready to explore, platforms like the Graphlogic Text to Speech API and the Graphlogic Generative AI and Conversational Platform provide entry points.

The responsibility rests with developers, regulators, and society to ensure that cloned voices improve lives while protecting human rights.

FAQ

Voice cloning is the creation of a digital copy of a human voice using artificial intelligence.

It collects voice samples, trains algorithms to capture patterns, and generates new speech that resembles the original speaker.

It is used in entertainment, accessibility tools, customer service, healthcare, and education.

Yes. Risks include fraud, identity theft, and unauthorized use of personal data. According to NIST, these risks are growing quickly.

They should obtain explicit consent, secure data, and disclose the use of AI voices. They should also consider watermarking to prevent misuse.