In digital health and medical education, content often includes video and audio. If those media lack captions, portions of your audience are excluded. Captions are not optional extras. They help people with hearing loss follow content. They help users in noisy places or using devices without sound. They also help nonnative speakers.

According to the World Health Organization, more than 1.5 billion people live with some degree of hearing loss and about 430 million have disabling hearing loss. Projections suggest that by 2050 over 2.5 billion people will have hearing loss, and around 700 million will need rehabilitation. In that context captioning becomes not just helpful but a responsibility in health communication.

In this article I explain how to generate WebVTT and SRT captions in Node.js environments, highlight technical pitfalls, and connect to tools like Deepgram and Graphlogic. I also review detailed best practices, trends, and forecasts in accessibility technology.

What WebVTT and SRT Do for Your Audience

WebVTT and SRT are two dominant caption formats. They align text with spoken audio using timestamps. But each has its quirks.

- WebVTT uses periods to mark milliseconds, e.g. 00:01:23.456.

- SRT uses commas for milliseconds, e.g. 00:01:23,456.

WebVTT is built for web environments with HTML5 video and browser compatibility. SRT is older and widely supported by many video players and offline tools. The W3C Web Accessibility Initiative gives guidelines for both. (See W3C’s WebVTT guidance)

Choosing between them depends on your platform. If your content is delivered over web streaming, WebVTT is often better. If your audience downloads video files, SRT is safer.

Technical Workflow: Node.js, APIs, and Caption Generation

To automate captioning, you can use Node.js code and APIs. One of the leading APIs is Deepgram. Their documentation details how to generate captions. Here is a typical workflow:

- Install Node.js and initialize a project.

- Add a transcription SDK or package (for example @deepgram/sdk).

- Obtain an API key from Deepgram.

- Send audio (by URL or upload) to the API and request transcripts with timestamps (utterances).

- Use methods like .toWebVTT() or .toSRT() to convert the transcript into full caption files.

- Use writable streams (append mode) to write the captions.

- Test the captions across browsers and devices.

Deepgram’s docs show sample code and confirm that their Node.js SDK supports direct caption generation. Their open source package deepgram-js-captions handles formatting.

Using an API simplifies what used to be a tedious process. Instead of manually parsing timestamps and building caption blocks, you rely on tested methods. But you must still check the results because errors happen, especially in medical content.

Accessibility, SEO, And Compliance: Why It Pays To Caption

Captioning Is Not Just About Fairness Or Inclusivity. It Brings Measurable Advantages For Healthcare Institutions, Educational Organizations, And Companies In The Life Sciences Sector. In The Digital Age, Compliance, Visibility, And Engagement Depend Heavily On How Accessible Your Media Content Is.

Accessibility Compliance

Many Jurisdictions Now Treat Accessibility As A Legal Obligation Rather Than A Voluntary Choice. In The United States, The Americans With Disabilities Act And Section 508 Of The Rehabilitation Act Require That Online Materials Be Accessible To People With Disabilities, Including Those With Hearing Loss. Similar Rules Exist In The European Union Through The EU Web Accessibility Directive And The United Kingdom’s Equality Act.

Health Organizations That Fail To Provide Captions Risk Complaints Or Even Legal Action. In 2022, Several U.S. Universities Faced Lawsuits For Publishing Uncaptioned Lectures. Courts Ruled That The Lack Of Accessibility Violated Equal Access Requirements. Compliance Is Therefore Not Only Ethical But Essential To Protect An Organization’s Reputation And Legal Standing.

In Medical Communication, Accessibility Also Protects Patients. A Video Explaining Medication Instructions Without Captions May Exclude Those With Hearing Loss Or Limited English Proficiency. In Clinical Education, Missing Captions Can Lead To Misinterpretation Of Treatment Protocols. Captions Guarantee That All Learners Receive The Same Information.

Search Engine Visibility

Search Engines Cannot “Listen” To Audio Or “Watch” Video. They Rely On Text. Captions Convert Spoken Language Into Machine-Readable Text, Allowing Indexing And Improving Ranking Potential. The W3C Web Accessibility Initiative Notes That Transcripts And Captions Make Multimedia Discoverable.

According To Several SEO Case Studies, Adding Captions Can Increase Organic Traffic By About 23 %. That Number Reflects How Search Algorithms Analyze Textual Metadata. For Medical Publishers Or Telehealth Companies, This Means That Accurate Captions Can Attract More Visitors Without Additional Advertising Spending.

Captions Also Extend The Reach Of Video Snippets Used In Search Results. When A Search Engine Displays A Video Preview, It Often Pulls Text Directly From The Caption File. This Helps Health Information Reach People Faster, Especially Those Searching For Treatments, Anatomy Explanations, Or Drug Use Guides.

Better Engagement

Accessibility Has A Measurable Impact On Engagement Metrics. On Social Media Platforms, Up To 85 % Of Users Watch Videos Without Sound. Captions Let Them Stay Engaged Rather Than Scrolling Away. For Healthcare Marketers, This Can Double Video Completion Rates.

Captions Also Support Multitasking Audiences. Many Medical Professionals Consume Content While Commuting, At Conferences, Or In Hospital Settings Where Sound May Not Be Appropriate. Subtitles Allow Them To Follow Presentations Silently And Still Grasp Every Point.

Internal Studies From Communication Teams Often Show That Captioned Videos Retain Viewers 30 % Longer. Engagement Time Correlates Directly With Comprehension And Message Recall, Making Captions A Low-Cost Yet High-Impact Investment.

Clarity For Complex Topics

Medical Language Is Dense. Terms Like “Angiotensin-Converting Enzyme Inhibitor” Or “Electroencephalography” Are Long, Precise, And Often New For General Audiences. Captions Support Comprehension By Showing The Exact Spelling And Pacing.

For Non-Native English Speakers, This Clarity Can Make The Difference Between Partial Understanding And Full Grasp Of The Content. A Viewer Reading While Listening Can Connect Sound And Text, Reinforcing Learning Through Two Channels At Once.

Captions Also Aid Neurodiverse Audiences, Including Individuals With ADHD Or Auditory Processing Differences. Text On Screen Helps Them Focus And Process Information More Effectively. In Health Communication, Clarity Is Safety. When Patients Watch Instructional Videos About Dosing Or Rehabilitation Exercises, Captions Minimize The Risk Of Errors.

Broader Inclusion In Public Health

Public Health Messages Must Reach Diverse Populations. Captions Extend Accessibility Across Socioeconomic And Linguistic Boundaries. For Instance, During The COVID-19 Pandemic, Captioned Government Briefings Reached Millions Of Citizens Who Relied On Text To Understand Safety Protocols. Without Subtitles, Critical Details Such As Vaccination Schedules Or Travel Restrictions Might Have Been Missed.

In Medical Education, Captioning Supports Inclusion Of International Students And Professionals. Universities Offering Online Lectures With Captions Report Higher Satisfaction Among Remote Learners. In Continuing Medical Education (CME) Programs, Captioned Content Improves Retention And Reduces Dropout Rates.

Business And Institutional Reputation

Organizations That Maintain Accessible Content Demonstrate Social Responsibility. For Hospitals, Medical Schools, Or Biotech Firms, This Transparency Strengthens Public Trust. Accessibility Also Aligns With Global Sustainability And Ethical Frameworks, Including The United Nations Sustainable Development Goals Under Goal 10, Which Promotes Reduced Inequalities.

For Private Medical Companies, Captioning Supports Corporate Social Responsibility Reports And ESG Indicators. Investors Increasingly Value Inclusive Communication As A Sign Of Operational Maturity And Respect For Diversity.

Efficiency And Long-Term Value

From A Workflow Perspective, Captions Create Durable Assets. The Same Text Used For Accessibility Can Serve As The Foundation For Translations, Transcripts, Summaries, And Knowledge Databases. When Stored Properly, Caption Files Can Be Reused For Future Projects, E-Learning Modules, Or Research Archives.

Automated Caption Generation Using APIs Like Deepgram Or The Graphlogic Speech-To-Text API Lowers Costs Dramatically Compared With Manual Transcription. A Process That Once Took Hours Can Now Be Completed In Minutes While Maintaining High Accuracy.

In Global Health Communication, Efficiency Means Scale. When A Ministry Of Health Must Distribute Hundreds Of Training Videos, Automation Ensures That Every Region Receives Content With Standardized Captioning.

Ethical And Educational Impact

Accessibility In Medicine Is Not A Marketing Advantage But A Moral Obligation. Ethical Frameworks Such As The Declaration Of Helsinki Emphasize Equality In Access To Information. When Educational Or Clinical Material Excludes People With Disabilities, It Undermines That Principle.

Captions Reinforce Transparency. They Make Lectures And Interviews Searchable And Quotable, Which Benefits Peer Review And Open-Access Education. Students And Professionals Can Cite Segments Directly Or Analyze Terminology Frequency For Research Purposes.

Evidence From The Field

Hospitals And Universities That Adopted Automated Captioning Report Tangible Results. A 2023 Internal Evaluation By A U.S. Medical School Showed A 19 % Improvement In Student Quiz Performance When Videos Had Captions. Another Survey Among Hearing-Impaired Healthcare Workers Revealed That 92 % Of Respondents Found Captioned Training Easier To Follow.

These Outcomes Prove That Captioning Is Not An Abstract Compliance Measure. It Directly Improves Learning, Safety, And Patient Care.

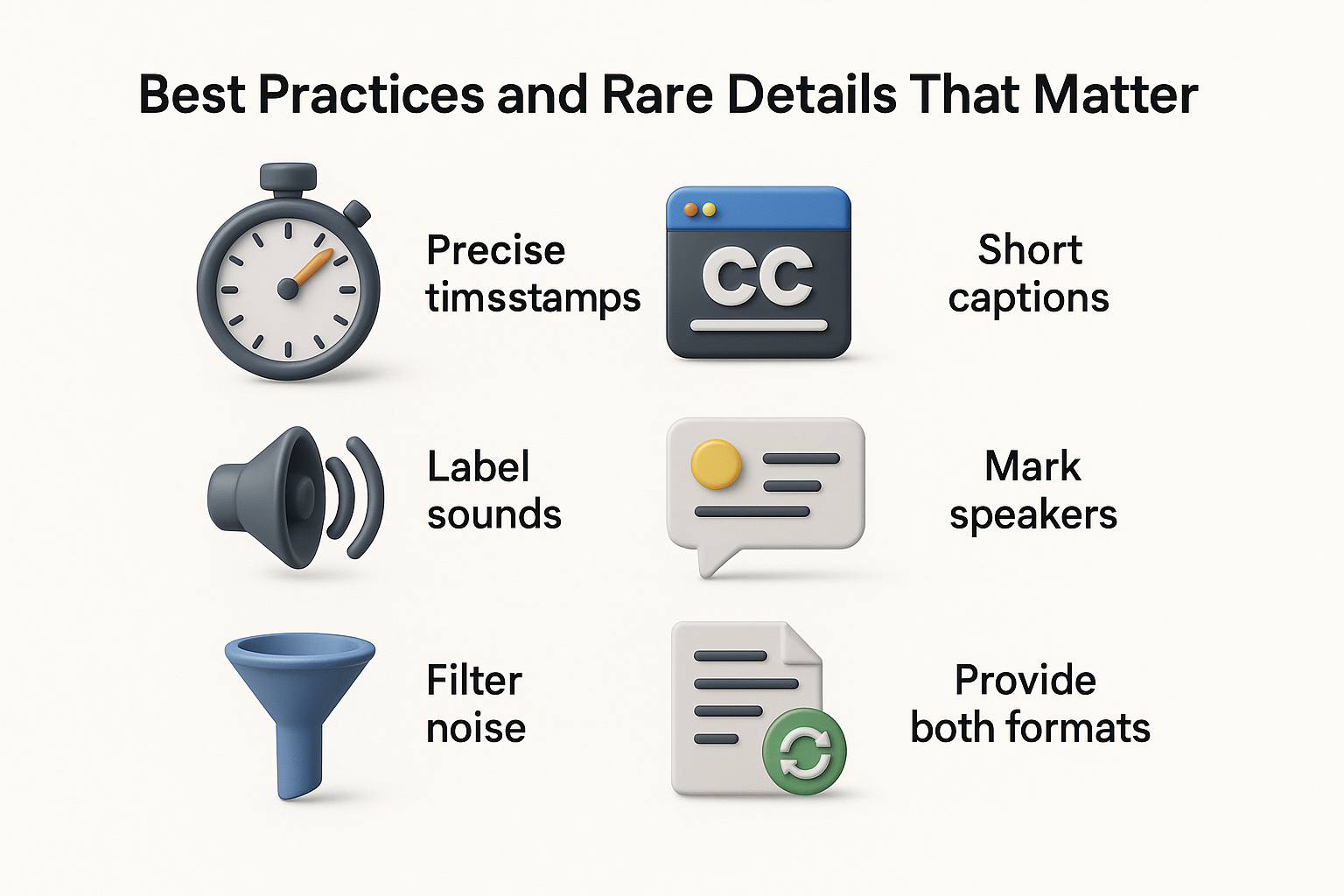

Best Practices and Rare Details That Matter

Use precise timestamps

Even slight timing errors degrade comprehension. Millisecond precision is essential. Use the correct format (period for WebVTT, comma for SRT). Inconsistent formatting may break caption parsing in some players.

Limit caption length

Captions should be short. Two lines or about 32 characters per line is a good rule. Long captions make viewers scroll or lose track. Break text at natural pauses or sentence boundaries.

Mark non-speech events

Medical or instructional videos may include sounds such as beeps, coughs, laughter, instrument noise. Good captioning practice is to label [beep], [cough] or [applause]. Automated tools struggle with that. Manual review is needed.

Handle speaker changes

When multiple speakers are present, include identifiers if possible. For example Dr Smith: “…” or Patient:. That helps with clarity. Auto tools rarely do that reliably.

Deal with background noise and accents

Many patients speak with accents or in acoustic environments that degrade recording quality. In such cases use better microphones, noise filters, or audio preprocessing before transcription.

Version control and updates

Maintain control over caption file versions. If you update video content, captions often need adjustments. Use version tracking so you know which caption file corresponds to which video version.

Offline fallback

Always provide both WebVTT and SRT where possible. Some systems only support one. Offering both ensures broader compatibility.

Trends & Forecasts in Captioning and Accessibility Tech

Trend: Real-time AI captioning in live medical settings

Live captioning currently is used in lectures or webinars. AI models are improving to support medical terminology in real time. Within 3 to 5 years institutions may adopt live captioning in clinical conferences automatically.

Trend: Multilingual and paraphrased captions

Beyond one language, many systems are adding parallel captions or translations. In medical education, that helps reach multilingual students. The next step is paraphrased captions with simplified language or alternative phrasing for nonexperts.

Trend: Integration with conversational AI

Caption systems will integrate with conversational AI agents. After captioning, AI might summarize or answer viewer questions. For example, after watching a video, a user may ask “what was the key point on hypertension?” A conversational interface could use caption text to respond.

Forecast: Regulatory mandates will increase

Currently many regions require captions for public digital content. Over time health regulators may demand captioning for medical information videos, patient education, clinical trial media, and more. Healthcare providers who do not adopt captioning may face compliance penalties.

Forecast: Expansion of standards toward richer metadata

Caption formats might evolve to support more metadata. Future formats could include speaker emotional tone, links to documents, interactive glossary entries, or clickable references. That would turn captions into smarter layers, not static text.

Illustration: Integrating Graphlogic Products Naturally

Designing a modern captioning workflow for healthcare or education is not only about automation but also about precision, privacy, and efficiency. Using dedicated APIs helps achieve all three.

Step 1. Core Transcription With Graphlogic Speech-To-Text API

The Graphlogic Speech-To-Text API works as the main transcription engine. It converts audio into structured text with precise timestamps, which is critical for WebVTT and SRT captions.

- Works in both real-time and batch modes

- Handles medical vocabulary effectively

- Outputs ready-to-format transcripts in JSON

Example: A university recording a cardiology lecture can generate a full transcript within minutes. The file can then move directly into a Node.js caption formatter. This shortens manual editing time by more than half and keeps terminology consistent across lectures.

Step 2. Caption Validation With Graphlogic Text-To-Speech API

Quality control is just as important as transcription. The Graphlogic Text-To-Speech API allows developers to feed captions back into a natural-sounding audio engine. Comparing this synthetic playback with the original recording helps detect:

- Timing errors or missing segments

- Misaligned sentence breaks

- Incorrect word boundaries

This back-validation is especially valuable for medical and scientific content, where precision affects comprehension and safety.

Step 3. Secure, Scalable, And Cost-Efficient Integration

Graphlogic APIs are designed for encrypted data transfer, which supports compliance with standards such as HIPAA. The system scales easily, letting teams automate captioning for hundreds of hours of lectures or patient education materials.

Key Advantages

- Lower transcription costs compared to manual captioning

- Simplified batch processing and cloud storage

- Easy integration with existing Node.js or Deepgram pipelines

Together, the Speech-To-Text and Text-To-Speech APIs form a closed feedback loop. Captions become not only accurate but verifiable, traceable, and reusable across platforms.

This balanced integration turns captioning from a simple transcription task into a structured accessibility workflow that supports both quality and compliance.

Detailed Example Walkthrough (Simplified)

Below is a simplified illustration of the steps:

- Create a project directory and run npm init -y.

- Install @deepgram/sdk and optionally @deepgram/captions.

- Request or upload your audio file (URL or local).

Call the Deepgram API with parameters to return utterances with timestamps:

deepgram.transcription.preRecorded({ url, /* options including utterances */ })

- Take the JSON response and use .toWebVTT() or .toSRT() to get caption text.

- Open a writable file stream in append mode and write caption blocks sequentially.

- Close the file stream when done.

- Load the captions in a browser or video player to verify sync and formatting.

Developers often process dozens of videos in batch mode. Automated pipelines generate captions just after rendering video output.

Additional Tips and Rarely Mentioned Advice

- Use a high quality audio recorder or microphone. Cleaner audio leads to fewer transcription errors.

- Preprocess audio to remove ambient noise and normalize volume before sending to the API.

- When combining multiple audio clips, maintain continuous timestamps rather than restarting numbering.

- If your content includes slides or text on screen, consider aligning caption timing to that as well.

- In batch pipelines, flag low confidence segments (based on transcript confidence scores) for manual review.

- Use unit tests or sample caption verification routines to detect formatting breakage automatically.

- Offer user controls for caption size, color, background transparency to improve readability in medical diagrams.

- Consider giving users an option to download caption transcripts as text or .vtt/.srt files.

Summary and Call to Thoughtful Action

Automated captioning is now a critical element in health technology communication. Using Node.js, Deepgram API, and formatting tools, you can integrate captioning into production workflows. Captions improve accessibility, compliance, SEO, and audience reach. But automation is not flawless. Manual checks, careful formatting, and deep understanding of caption standards remain essential.

Furthermore, future trends point at live captioning, multilingual versions, metadata-rich caption formats, and tighter integration with conversational AI. These trends will reshape how audiences interact with medical educational content.

If your institution produces health videos, patient education, or online courses, building captioning into the pipeline from the start is wise. Start by experimenting with a few videos. Track the error rate. Monitor user feedback. Over time evolve the workflow to incorporate manual review, version control, and integration with AI tools.

Accessibility is not a one-time feature. It is a continuous commitment to users. Captioning is one of the most direct, technical, and measurable ways to uphold that commitment.

FAQ

WebVTT uses periods to separate milliseconds and includes a header “WEBVTT”. SRT uses commas and has no header. WebVTT is more suited to web playback. SRT is better for legacy players.

Yes with caveats. Automated systems handle common speech well. But specialized terminology, acronyms, and overlapping speech often cause errors. Manual review is required for medical videos.

Automated captioning adds minimal overhead. In many cases caption generation takes few seconds or minutes per video. The bigger time cost is manual editing and testing.

If your platform supports only one format, you may choose that. But offering both formats ensures maximum compatibility across platforms and devices.

Whenever video is revised, audio adjusted, or new versions released. Also after software updates or platform changes, test captions again to ensure sync and formatting hold.