AI agents are becoming essential in healthcare. They are not science fiction anymore. These systems automate tasks, analyze data, and improve workflows. Hospitals and clinics use them to reduce pressure on staff and improve patient care.

But what are AI agents? Why are they gaining attention in medicine?

This article explains their core functions, types, and where they work best. We will also look at trends, real-world use, and risks. And we will keep it simple.

What Are AI Agents in Healthcare?

An AI agent is a software program that works on its own. It receives input, analyzes it, takes action, and learns from results. It does not need human help at every step. In healthcare, this means less manual work and better use of time.

An AI agent can answer patient questions, assist with diagnostics, or check paperwork. One system can process voice notes from doctors and turn them into structured records. Another can scan thousands of insurance claims and flag errors in seconds.

According to the Mayo Clinic, AI is already supporting doctors with better diagnostics and faster triage.

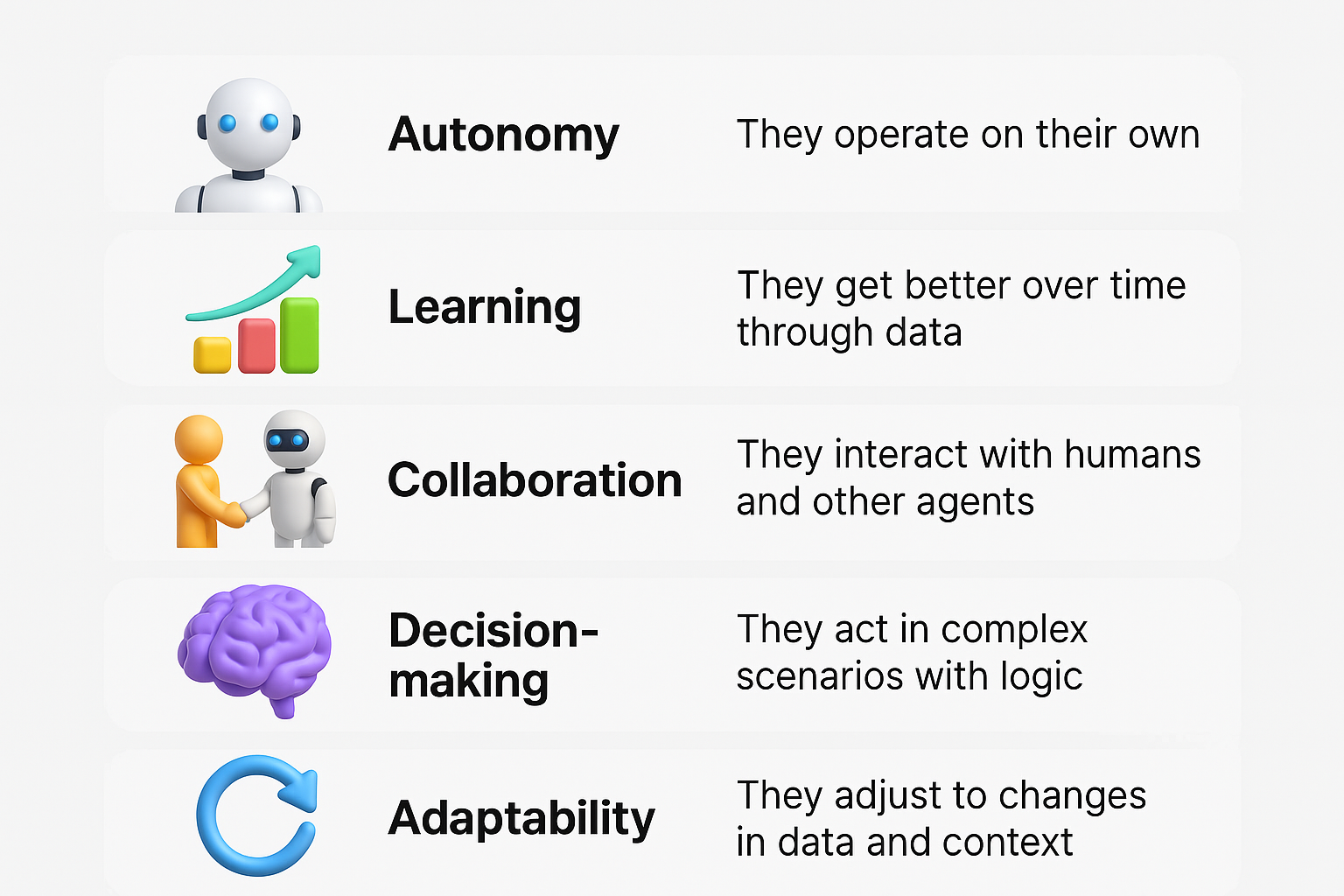

Core Features of AI Agents

AI agents are built to work without ongoing supervision. That makes them different from older software.

One AI agent might help with lab results. Another might manage drug inventory in a clinic. These features make AI agents fit many roles in healthcare without adding complexity.

The World Health Organization warns that human oversight must still be present. AI supports care, not replaces it.

How AI Agents Work: The Four Main Steps

AI agents follow a simple loop. This cycle helps them adjust and improve.

1. Perception

They collect data from their environment. This can be voice, images, or medical records. For example, a camera feed for patient monitoring or audio from a consultation. In clinical systems, perception often involves multimodal input — like matching a spoken symptom description with EHR history and recent vitals. Some agents integrate data from wearable biosensors, continuously tracking heart rate, SpO₂, or mobility status. Others use natural language processing to extract structured meaning from free-text clinical notes or referral letters. In diagnostic imaging, agents detect not just image content but also metadata like scan time and contrast agent used.

2. Reasoning

They process the data using rules or machine learning. This step gives them a way to understand context and find patterns. For example, comparing a current X-ray with past cases. Medical reasoning also includes prioritizing signals — like separating a life-threatening drop in saturation from a false alarm due to sensor detachment. Some agents use causal inference models to go beyond correlation, identifying possible contributors to deterioration. Others combine structured logic with probabilistic layers, such as a model that adjusts its threshold for sepsis alerts based on patient age or comorbidities. Advanced systems also include explainable reasoning modules that generate brief rationales clinicians can review.

3. Action

They make decisions and perform tasks. That could be sending a report, alerting a doctor, or ordering a new test. Actions can be passive — like flagging a lab result — or active, such as triggering clinical workflows or writing draft discharge summaries. In hospitals, some agents auto-update medication plans based on lab changes, while others reroute non-urgent radiology requests during peak hours. A key factor is interoperability: actions must conform to existing clinical protocols and integrate into systems like HL7 FHIR or EPIC APIs. In robotic platforms, action involves physical responses — guiding surgical tools or adjusting infusion pump rates.

4. Learning

They use feedback to adjust future actions. For example, if their suggestion was overruled by a doctor, they note that for next time. Learning can be supervised, semi-supervised, or self-reinforcing. For example, a triage agent may learn from physician overrides that certain symptoms in older adults suggest a different priority level than its initial model predicted. In long-term use, agents refine their decision policies by monitoring outcomes — such as which flagged cases resulted in readmissions or adverse events. Some agents also benefit from federated learning, where models are updated using decentralized data across multiple clinics without moving patient information.

This cycle allows continuous improvement. It helps AI agents evolve instead of repeating errors. According to NIH, systems that learn over time are more useful in complex settings like hospitals.

Types of AI Agents and Where They Fit

There are five key types of AI agents. Each fits different use cases in healthcare.

| Type | How It Works | Healthcare Use |

| Reflex | Reacts to input with a set rule | Thermostat for ICU rooms |

| Model-based | Keeps internal memory of past states | Robot navigating a hospital wing |

| Goal-based | Plans actions to reach a target | Route planning for medical supply delivery |

| Utility-based | Chooses best outcome from many | Drug dosage optimization |

| Learning | Improves with data and outcomes | Chatbots that adapt to patient behavior |

Some clinics use reflex agents for temperature control. Others use utility agents in treatment planning software. Learning agents are already powering many hospital chat tools.

A study from Nature Medicine showed that learning agents improved diagnostic accuracy when used alongside doctors.

AI Agents Are Already Solving Real Problems

These are not experiments or future plans. AI agents are now deployed in public hospitals, private networks, research centers, and insurance systems. Their role is to handle specific, repetitive, or high-volume tasks faster and more accurately than traditional methods — while keeping clinicians in control.

Improving Admin and Billing Workflows

Back-office operations like claim checks, referral processing, and discharge documentation consume hours of clinical and administrative time every day. Delays and human errors in these workflows often result in payment rejections, compliance penalties, or patient dissatisfaction.

AI agents now manage these tasks end-to-end. They pre-fill forms using patient data, validate insurance in real time, and flag missing codes before submission. In some health systems, AI-assisted billing has cut average reimbursement delays by over 30%.

The Graphlogic Generative AI Platform supports this work by transcribing clinician voice notes and turning them into structured documents. For hospitals, this means fewer transcription bottlenecks and better-quality data in the EHR — without burdening the clinical team.

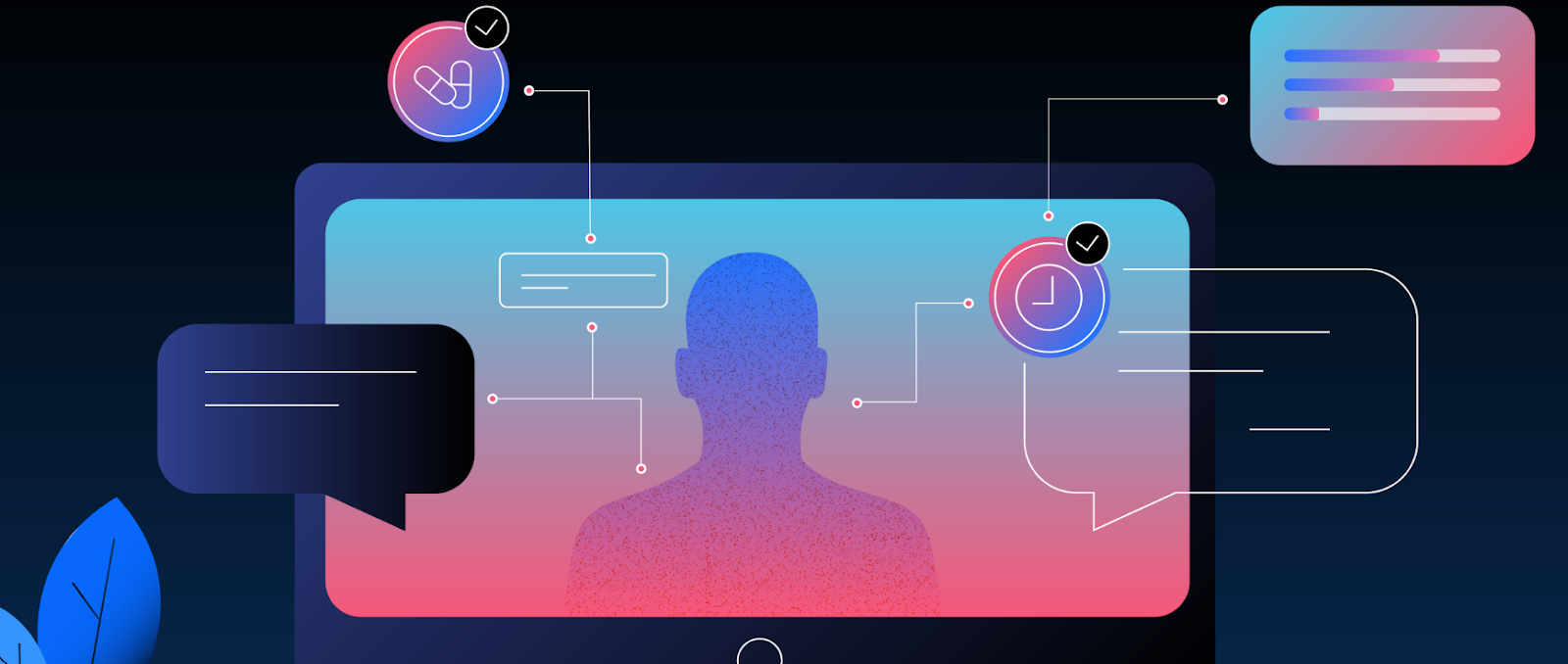

Enhancing Customer Service

Patient-facing systems powered by AI agents can now handle common service requests — such as appointment booking, medication reminders, and results explanations — through chat, voice, or SMS. These agents work 24/7, respond in multiple languages, and remember previous interactions.

They are not just scripted bots. Many use contextual memory and sentiment analysis to adjust tone and guide users through next steps. For example, an agent might notice patient anxiety and slow down the response, offering a contact point for live support.

A case study from the Cleveland Clinic showed measurable improvement in patient satisfaction scores and a significant drop in wait times after implementing a virtual assistant system.

Supporting Diagnosis and Prediction

AI agents assist clinicians by analyzing structured and unstructured data — including imaging, labs, and notes — to support faster and more confident decisions. In radiology, pathology, and even dermatology, they highlight areas of concern, compare with historical data, and suggest likely conditions.

These systems do not replace radiologists or physicians. Instead, they act as secondary readers or prioritization tools that help surface urgent cases. In triage settings, AI agents reduce missed critical findings by flagging subtle anomalies.

At Stanford Medicine, a deep learning system reviewed chest X-rays and helped detect pneumonia with higher speed and comparable accuracy to radiologists. It also improved prioritization by placing severe cases earlier in the review queue — supporting faster clinical action.

Monitoring for Risk and Fraud

In large healthcare systems, detecting safety incidents, medication errors, or fraud requires constant surveillance of transactions, logs, and user actions. AI agents are now doing this in real time.

These agents flag suspicious billing patterns, prescription anomalies, or access violations before they cause financial or legal harm. For clinical risks, they monitor for inconsistent dosing, allergy mismatches, or early signs of sepsis across data streams.

The National Institutes of Health (NIH) has published work on AI agents trained to detect pharmacovigilance signals. These systems analyze post-market surveillance data to identify adverse drug reactions weeks before traditional reporting pathways surface them.

In many hospitals, AI risk-monitoring agents are now required components of digital quality and compliance programs.

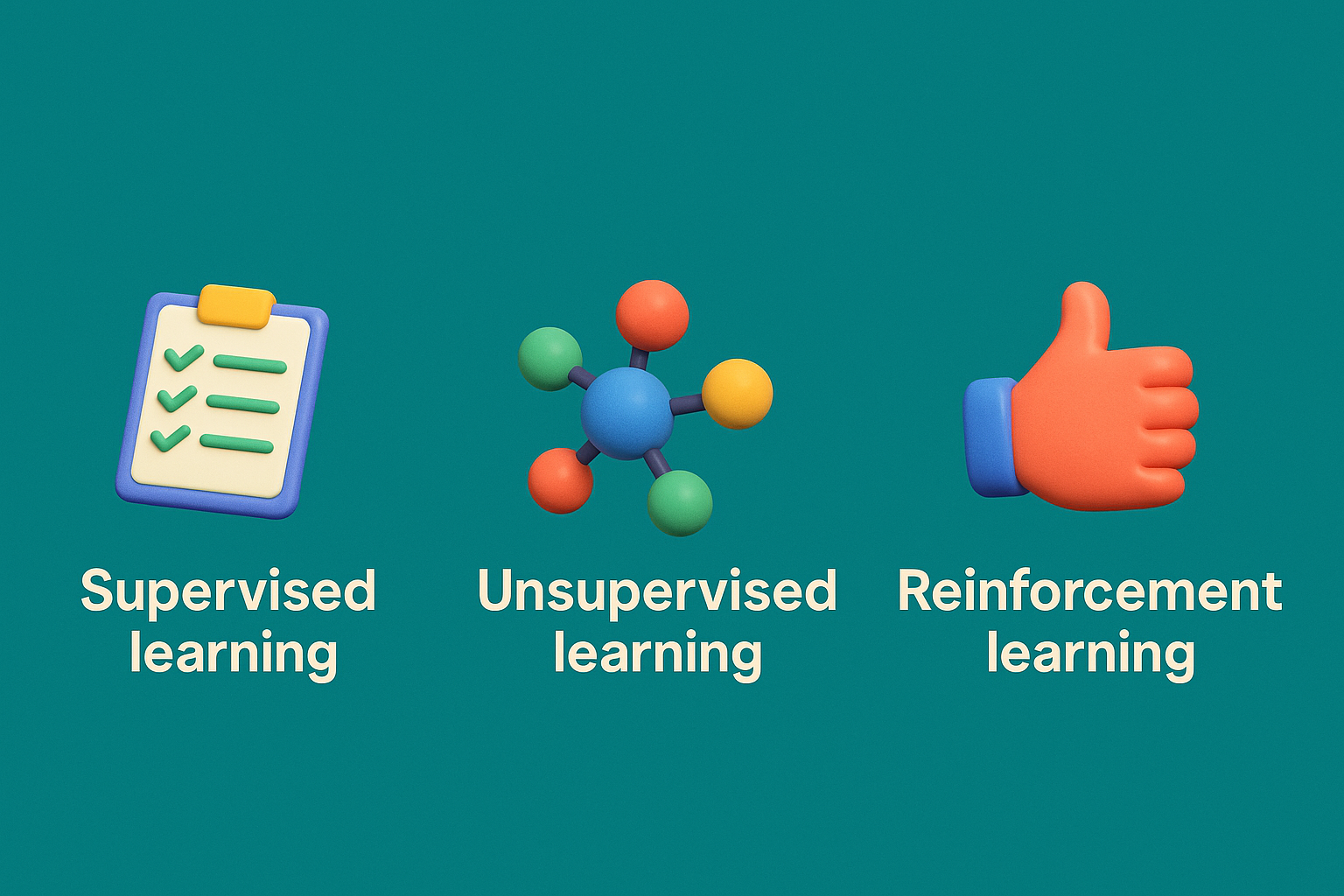

How Agents Learn and Improve

Learning agents evolve based on past performance. They do not need constant reprogramming. Instead, they look at results and adjust their next move.

There are three main learning methods:

- Supervised learning uses labeled data to make predictions

- Unsupervised learning finds patterns without labels

- Reinforcement learning rewards correct choices and adapts

In healthcare, all three are used. For example, AI support tools often rely on supervised learning. They train on known cases. Chatbots use reinforcement learning to respond better over time.

The FDA supports systems that update through learning but wants tight controls in place.

Risks and Ethics: What Needs Fixing

AI in medicine also brings serious risks. These systems must be used carefully.

Technical Challenges

Poor data leads to poor results. Bias in training data can create unfair decisions. Many AI agents are also hard to explain. If a system says no to a treatment, we need to know why.

Privacy and Safety

AI agents handle sensitive data. They must meet privacy standards like HIPAA. Misuse or leaks can cause real harm to patients.

Employment and Oversight

When AI automates work, jobs may shift or disappear. That needs planning. Also, humans must still oversee key decisions.

Clear rules and human review are essential. AI should assist, not replace, the health workforce.

Trends and Predictions: Where Things Are Going

The future of AI agents in healthcare is not about more features. It is about better focus and smarter integration.

- Local AI processing. AI will run directly on hospital devices. This will allow real-time response in ambulances or bedside monitors.

- Emotion-aware systems. AI agents may detect emotion in voice or text. This can help in mental health, where mood shifts are key.

- Multi-agent systems. Hospitals may run several agents that work together. One could monitor vital signs, while another handles scheduling.

- IoT integration. Medical sensors will feed data to AI agents, which will suggest changes or alert teams.

- AI-supported diagnostics. More hospitals will use agents to assist radiologists, not just automate tasks.

These trends point toward safer, more personalized care. But they also increase the need for human oversight and ethical design.

Final Thoughts

AI agents are tools. When used well, they help reduce overload, prevent mistakes, and make care more accessible. They are already solving real problems in hospitals around the world.

But they are not magic. They must be transparent, fair, and used with caution. Human oversight is not optional. Ethics must be built into the systems from the start.

The goal is not full automation. It is smarter collaboration between people and technology.

FAQ

No. They support doctors but do not make final decisions in patient care.

Yes. Errors happen, especially if the data is biased or incomplete. That is why oversight matters.

Yes. Many hospitals already use AI for triage, billing, and diagnostics.

Basic training in digital tools is enough for many users. Technical teams handle setup.

The Graphlogic Speech‑to‑Text API helps transcribe voice input during clinical work. It integrates into common systems.