In 2025, Artificial Intelligence is no longer a buzzword in medicine. It is a clinical reality. From reading X-rays to predicting sepsis hours in advance, AI tools are used daily by doctors and nurses across the world. But this is not a utopia. The progress is real, yet uneven. AI has improved diagnosis, reduced paperwork, and even helped design new drugs, but it has also introduced new problems that clinicians now must manage.

This article looks at what works, what still fails, and what is around the corner. You will discover tested use cases, growing trends, and ethical concerns, all grounded in the day-to-day reality of modern healthcare. We also include links to working tools like Graphlogic’s Speech-to-Text API and Generative AI platform that help solve real-world challenges right now.

What AI Is Doing in Medicine Today

Artificial Intelligence helps healthcare professionals handle large-scale data more quickly and accurately. It powers tools that review medical images, assist with diagnosis, automate paperwork, and even simulate how a patient might respond to a specific drug.

AI is used across hospitals, research labs, and private clinics. It is integrated into radiology platforms, electronic health records, and wearable devices. AI also supports drug development by modeling biological interactions and predicting compound behavior. According to WHO, AI-driven decision-making tools have already improved diagnostic accuracy in imaging by up to 30%.

However, the real power of AI lies in its ability to operate quietly in the background. Tools like Graphlogic’s Speech-to-Text API reduce time spent on note-taking. Others help hospitals match patients with open clinical trials based on real-time data, avoiding missed treatment opportunities.

Despite progress, many tools still require high-quality data to function. Without consistent input, the best models can misfire or remain underused.

AI in Diagnostics: More Speed, More Accuracy, Still Needs Supervision

AI systems now interpret CT scans, MRIs, and mammograms faster than most radiologists. Some tools highlight lung nodules or brain hemorrhages that might otherwise go unnoticed in a busy hospital. A 2024 meta-analysis in JAMA showed that properly trained AI models matched expert radiologists in diagnostic accuracy for multiple conditions.

AI also aids in pathology by reading biopsy slides and flagging abnormal cells. This reduces human error and speeds up diagnosis, especially in underserved areas with fewer specialists.

But even in the most advanced hospitals, AI does not act alone. Human review remains essential. Many tools are not certified for autonomous use and must be reviewed by a clinician before being used in patient decisions.

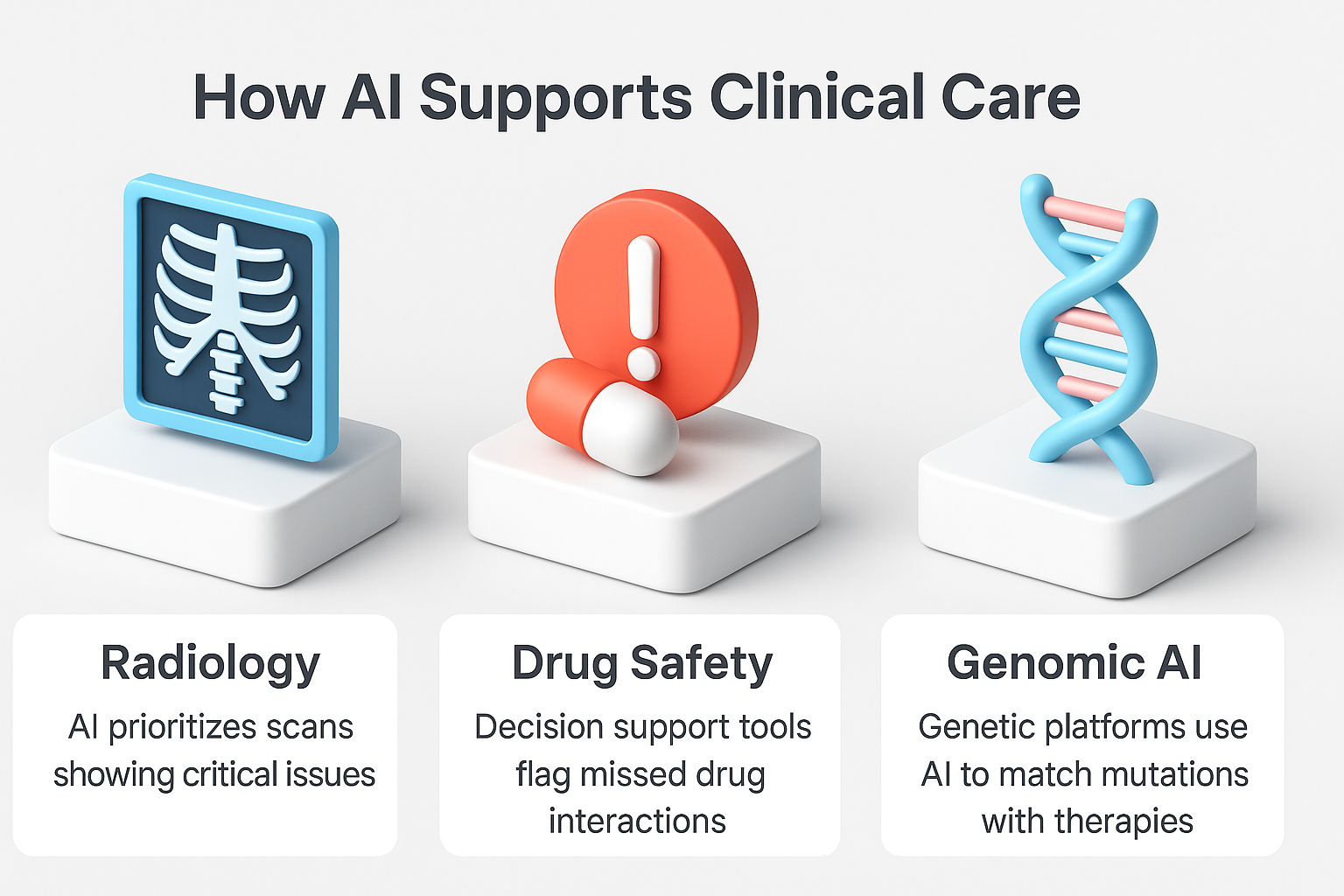

Some common diagnostic use cases:

- AI in radiology prioritizes scans showing critical issues

- Decision support tools flag missed drug interactions

- Genetic platforms use AI to match mutations with therapies

Hospitals using AI in imaging report 25% shorter turnaround times for radiology reports. However, clinicians also note an increase in false positives, requiring re-checks and sometimes confusing results.

Personalized Care: AI That Matches the Treatment to the Patient

AI allows clinicians to offer therapies designed for the individual, not just the population average. By processing lab results, genomic data, lifestyle patterns, and even social factors, AI models recommend targeted treatments that improve outcomes and reduce side effects.

In cancer care, AI helps match patients to immunotherapy based on biomarkers. In diabetes, machine learning forecasts glucose spikes to personalize insulin doses. A patient’s sleep, diet, and stress levels can also feed into AI tools that predict flare-ups in autoimmune conditions.

Pharma companies now use AI to model how drug molecules will behave in the human body. This shortens the drug development cycle by years. DSP-1181 is one example, created in under 12 months using AI.

AI tools also assist in clinical trial design. They simulate patient responses to different protocols and help researchers avoid ineffective or unsafe combinations. The NIH has stated that AI will be essential to precision medicine development through 2030 and beyond.

But personalized medicine only works with strong data infrastructure. Inconsistent electronic health record formats and data silos often limit the reach of these tools. This remains one of the largest barriers to scaling personalized AI.

Smarter Monitoring and More Proactive Virtual Care

AI enables remote monitoring systems that track patients at home in real time. These systems use smart sensors to follow heart rate, oxygen saturation, blood pressure, and glucose levels. Algorithms detect dangerous trends before they become emergencies.

For example, AI can detect subtle changes in a heart failure patient’s breathing pattern days before symptoms appear. The doctor gets alerted early and adjusts the treatment plan. Studies show these systems reduce hospitalizations by 20% for chronic disease patients.

At the same time, AI chatbots handle routine communication. They answer medication questions, confirm appointments, and remind patients to refill prescriptions. These bots reduce call volume and support patients after discharge when follow-up is most critical.

Telemedicine platforms often embed these systems. AI assists in triaging cases so that urgent issues reach doctors faster. It also provides basic self-care guidance for mild conditions, keeping ERs less crowded.

However, accuracy and language support remain challenges. Some bots misunderstand patient input, especially in multilingual settings. Strong oversight and well-designed fallback options are crucial.

Reducing Clinician Overload by Automating What Matters

Administrative overload is one of the top reasons doctors leave the profession. In 2024, more than 60% of clinicians reported burnout, citing documentation, billing, and inbox load as key stressors. AI offers tools to relieve this pressure.

Natural language processing tools convert doctor-patient conversations into structured clinical notes. Graphlogic’s Speech-to-Text API is one such tool. It captures medical information with accuracy and reduces the need for manual typing by up to 50%.

Other AI tools optimize appointment scheduling based on staffing and predicted patient volume. Some predict which patients are at high risk of no-shows and adjust accordingly. These tools reduce wasted slots and improve clinic flow.

Clinical decision support systems also help by providing reminders for screenings, dosage warnings, and infection control alerts. According to a recent Health Affairs review, hospitals using AI alerts in their ICUs have reduced preventable adverse events by 30%.

The result is more time for patient interaction and fewer errors. Still, doctors must validate all AI-generated suggestions. Over-reliance on automation can introduce blind spots.

Ethical Concerns, Operational Barriers, and Systemic Challenges

Despite clear benefits, the rollout of AI in healthcare is full of obstacles. These range from ethical dilemmas to legal uncertainty and operational inertia.

Bias is one of the biggest risks. When AI models are trained on datasets that underrepresent women, racial minorities, or rural populations, they may make inaccurate or harmful recommendations. This is not theoretical. Studies have found racial disparities in AI tools used for pain management and ICU triage.

Privacy is another major issue. Healthcare systems handle vast amounts of sensitive information. While tools claim to be secure, breaches remain common. Strong encryption, access logging, and compliance audits are needed to protect patient rights.

Regulation remains unclear in many regions. While the FDA has approved some AI systems, most are still considered experimental. This slows adoption and puts hospitals in legal grey zones.

Integration is often the most practical problem. Many hospitals operate with legacy systems that cannot support advanced AI tools. Staff may also resist change if training is lacking or tools interrupt workflows.

Accountability is crucial. AI cannot replace judgment. Hospitals must define clear responsibility lines. Doctors should always know when a recommendation came from a machine, and patients deserve transparency.

Where Medical AI Is Heading Next

The next phase of AI in healthcare will be shaped by collaboration, not automation. AI tools will become smarter, but their value will depend on how well they fit into clinical workflows. By 2030, we will likely see AI assistants in every major care setting.

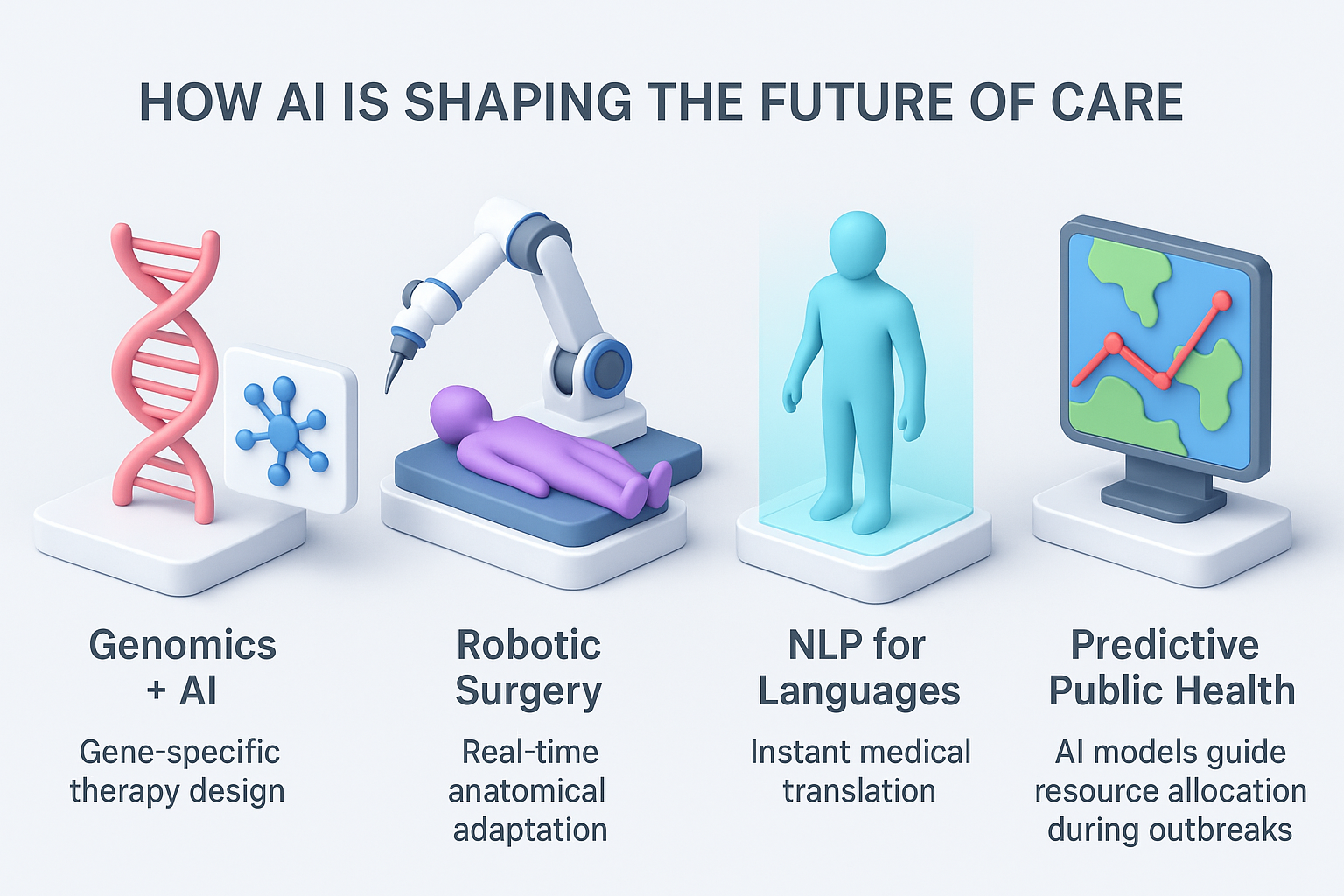

Emerging trends include:

- AI and genomics working together to design gene-specific treatments

- AI-driven robotic surgery that adapts in real time to anatomical variation

- Real-time translation of multilingual medical interactions through natural language processing

- Digital twins of patients, allowing simulation of treatment outcomes before administering drugs

- Predictive public health models that guide resource planning for pandemics or seasonal outbreaks

As Harvard Medical School suggests, the path forward is not to build smarter machines, but more human-centered systems. AI should reduce burden, improve fairness, and support better decision-making across every level of care.

FAQ

No. AI tools are designed to assist, not replace. They support clinical decisions but do not take responsibility for outcomes.

Not always. While some AI tools match or exceed human accuracy in imaging, they still require human oversight and regular recalibration.

Current legal standards hold healthcare providers accountable, not the AI system. Responsibility lies with the clinicians who use these tools.

You can ask your doctor directly. Many hospitals also disclose this in consent forms. Patients have the right to know if AI is part of their care.

Yes. Predictive models can flag early warning signs for conditions like stroke, cancer, or kidney failure. They allow for earlier intervention and better outcomes.