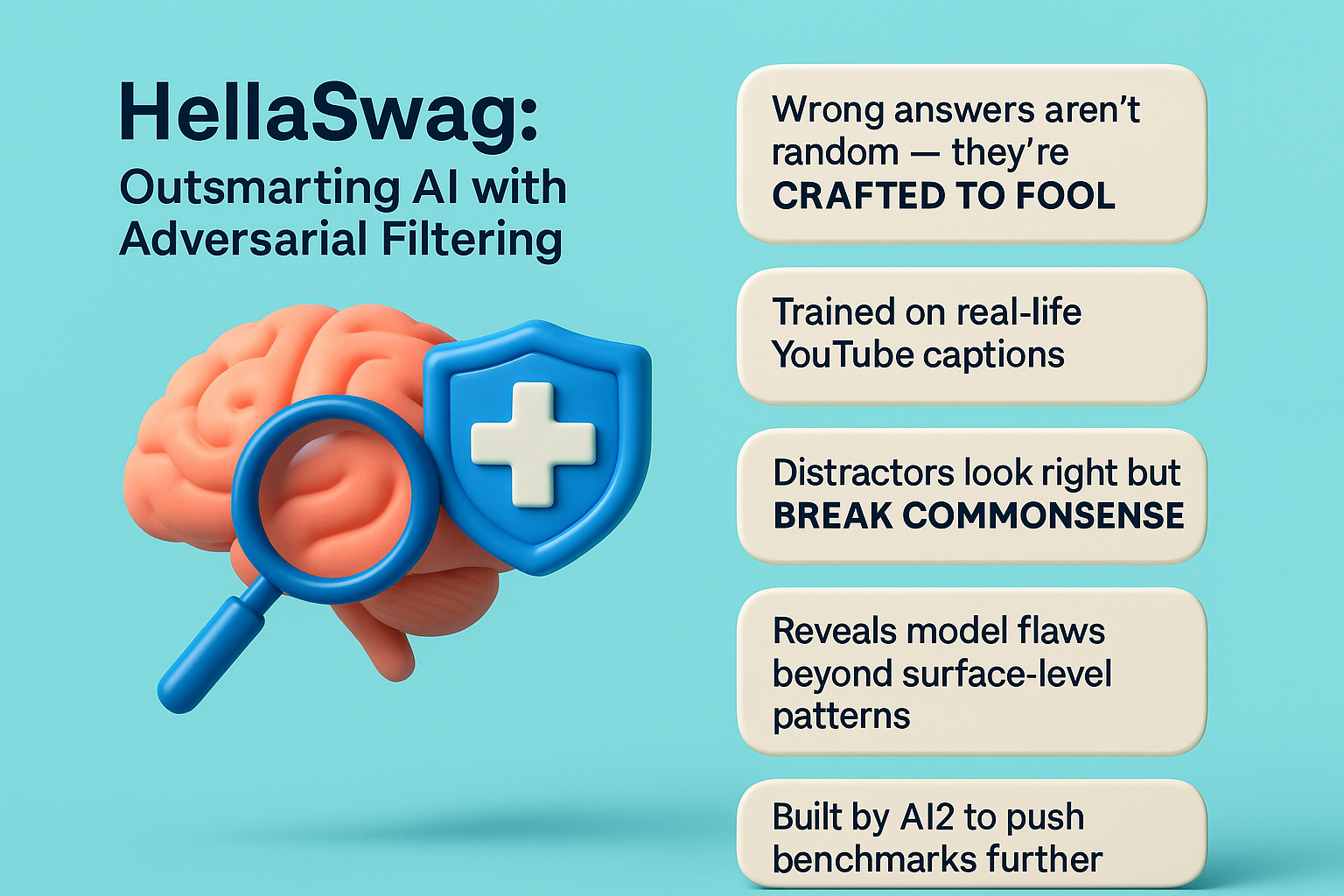

Commonsense is still one of the hardest skills for artificial intelligence to learn. As of mid-2025, even the most advanced large language models can fail basic logic tests that a ten-year-old would ace. This is where HellaSwag comes in. It is not just another benchmark. It is one of the few tools that exposes how far language models still are from true understanding.

In a world filled with multimodal systems and conversational agents used in healthcare, customer support, and creative tasks, this gap is critical. If AI systems cannot reason through basic real-world events, can they really be trusted with complex tasks? This article explains what makes HellaSwag different, how it evolved, and why it remains a gold standard for AI commonsense evaluation.

What HellaSwag Really Tests: The Missing Layer of Logic

HellaSwag focuses on something often missing from AI evaluation. It does not just test whether a model can complete a sentence. It tests whether that completion makes sense in the real world. The dataset contains thousands of examples based on video captions. Each snippet sets a scene. The model must choose the most likely outcome from four possible continuations.

The task is not about language fluency. It is about logic and context. Imagine a scene where someone walks into a kitchen holding a grocery bag. One option says the person begins unpacking food. Another says they take a nap. Both may be grammatically correct, but only one fits real-world behavior. HellaSwag is designed to detect whether AI can make this judgment correctly.

The format makes it harder than most benchmarks. All options are written to sound plausible. The distractors use similar vocabulary and phrasing as the correct answer. That eliminates shortcuts like relying on keyword overlap or sentence length. Instead, models have to reason about physical events and plausible outcomes. That is why humans still outperform machines in this task by a wide margin.

How Adversarial Filtering Made HellaSwag a Turning Point

One of the most important innovations in HellaSwag is the use of adversarial filtering. Unlike older datasets that relied on humans to write incorrect answers, HellaSwag uses a machine-in-the-loop system to generate distractors. These incorrect choices are automatically selected and refined until they are as convincing as possible. They may use accurate grammar and context-specific terms, but they subtly break commonsense.

This method exposes AI weaknesses in ways that traditional multiple-choice tests cannot. For example, a model trained only on statistical correlations might choose an answer simply because it matches certain word patterns. With adversarial filtering, that trick does not work. The wrong options are designed to confuse models trained this way.

This technique was introduced in 2019 by researchers at the Allen Institute for AI. The dataset was created using thousands of YouTube video caption segments. These captions cover everyday situations such as cooking, exercising, or assembling furniture. The adversarial endings are crafted using a technique called AF filtering. It stands for Adversarial Filtering. This process removes any distractors that are too easy and replaces them with stronger ones that challenge even modern models.

What a HellaSwag Question Looks Like and Why It Works

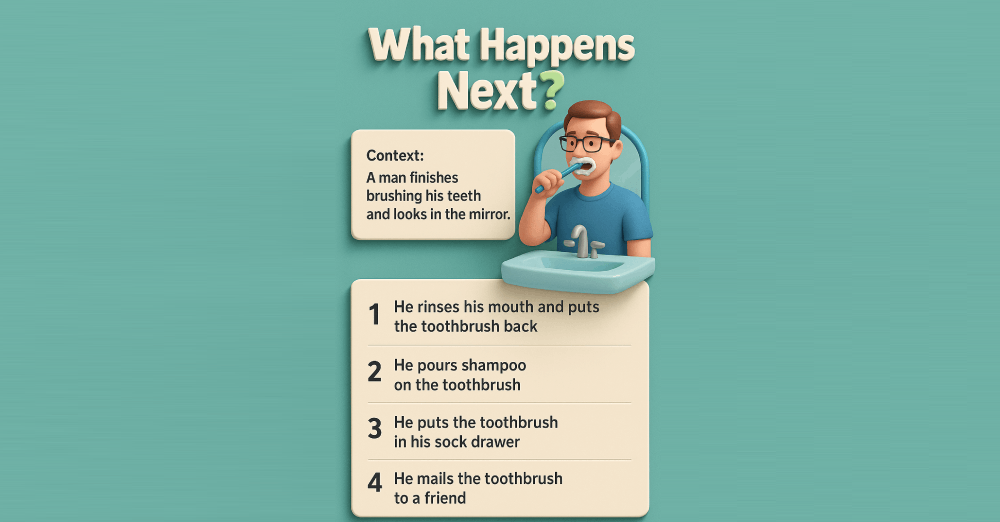

Every question in HellaSwag follows a structured format. It starts with a short snippet from a video description. This might describe someone doing a task, interacting with an object, or beginning an action. The next step is choosing the most logical continuation from four options. Three are intentionally misleading but carefully written to resemble a reasonable response.

Here is how a typical example might look:

Context: “A man finishes brushing his teeth and looks in the mirror.”

Options:

- He rinses his mouth and puts the toothbrush back

- He pours shampoo on the toothbrush

- He puts the toothbrush in his sock drawer

- He mails the toothbrush to a friend

To a human, the correct answer is obvious. But for AI systems that rely on surface-level pattern recognition, the plausible phrasing of all four options creates confusion.

Each distractor shares topic relevance and wording with the correct answer. They often use verbs that commonly appear with the main nouns. For example, “place,” “unwrap,” and “hand” might all appear in kitchen-related text. This design forces the model to consider the physical logic of the situation, not just the words.

How Language Models Perform and Where They Fail

In early versions of HellaSwag, models like BERT scored under 50%. These models could understand word relationships but had no grounding in physical reality. They struggled with tasks that required even basic causal or temporal logic.

By 2023, models like Falcon-40B achieved about 85.3% accuracy on HellaSwag. GPT-4, with few-shot reasoning and in-context learning, reached 95.3%. These results show big improvements. However, even top-performing models make errors that no human would. For instance, some models still pick answers where someone washes a pizza before cooking it, or where a person sings instead of jumping into a pool.

In 2025, we still see major open models failing at least 5% of the time on average. That means one in twenty responses can be nonsensical in real-world applications. This has serious implications. In healthcare settings, legal document summarization, or even customer support, such mistakes are not acceptable.

To improve real-world deployment, many companies now train models using adversarial benchmarks like HellaSwag. This includes tools such as the Graphlogic Generative AI platform, which integrates reasoning benchmarks during model evaluation and fine-tuning.

Where HellaSwag Fits Among Other Benchmarks

Not all benchmarks measure the same thing. HellaSwag focuses on real-world commonsense, specifically in physical situations. Other benchmarks, like ARC or CommonsenseQA, test scientific knowledge or general trivia. These tasks often use crowd-written or rule-based distractors. HellaSwag uses a machine-assisted method that adapts based on model performance, raising the difficulty curve over time.

Here is a comparison to understand the difference:

| Benchmark | Focus | Type of Challenge | Year |

| HellaSwag | Physical commonsense inference | Adversarial video caption continuations | 2019 |

| ARC | Science and logic | Rule-based multiple-choice | 2018 |

| CommonsenseQA | General commonsense reasoning | Crowd-written plausible distractors | 2019 |

One advantage of HellaSwag is that it continuously reveals weaknesses that models might mask in simpler benchmarks. In 2025, it is still one of the few tests where human accuracy is consistently higher than the best open-source models.

Why HellaSwag Matters in Real Applications

HellaSwag is not just useful in labs. It plays a growing role in industry. For instance, speech-to-text systems used in healthcare must recognize when a phrase deviates from normal behavior. AI that transcribes a doctor’s notes cannot confuse “takes a pill” with “throws the pill.”

Similarly, generative tools that produce content or hold conversations benefit from commonsense grounding. This is especially true for systems like Graphlogic Speech-to-Text API, which aim to support high-stakes environments with low tolerance for errors.

Training models with HellaSwag examples can reduce hallucinations, improve answer relevance, and boost trustworthiness. AI that understands context performs better when responding to follow-up questions or interpreting unclear input. This adds resilience, particularly when data is noisy or incomplete.

Industry studies suggest that fine-tuning on adversarial tasks improves robustness by over 15%, especially in multilingual and low-resource contexts. That makes it a critical component in modern AI pipelines.

What to Expect in the Future of Benchmarks

As AI systems grow more capable, benchmarks must evolve too. Static datasets quickly become outdated. The researchers behind HellaSwag have suggested adding multimodal elements, such as combining video, text, and audio. This would bring benchmarks closer to real-world reasoning needs.

Future updates might include:

- Tasks requiring long-form reasoning across multiple steps

- Video-based prompts with visual scenes and actions

- Audio cues that influence text decisions

- Mixed-domain reasoning across scientific, emotional, and physical logic

More importantly, future datasets must include more diverse cultural scenarios. Current benchmarks mostly reflect Western patterns of behavior. Expanding this would help create AI systems that work globally, not just in English-speaking markets.

Some initiatives, like VCR (Visual Commonsense Reasoning), are already experimenting with this direction. Multimodal datasets are expected to grow 35% year-over-year, according to AI research group Epoch, as demand for generalist AI models rises.

Final Takeaway

Commonsense is not an optional feature. It is a foundation for any reliable AI system. HellaSwag continues to be a key tool for testing this skill. By pushing models to go beyond grammar and into logic, it reveals how much work remains. If you are building or deploying AI in 2025, you cannot afford to skip commonsense benchmarks like HellaSwag.

To build models that think like people, we have to train and test them on what people really know. And HellaSwag is still one of the most effective ways to do that.

FAQ

HellaSwag is a dataset that tests how well AI can understand physical events using text. It presents a real-world scene and asks the model to pick the most likely next step. It uses adversarial filtering to make the wrong answers tricky, which makes it more challenging than traditional tests.

Language models are good at recognizing patterns in text but not always good at understanding real-life logic. Commonsense tasks like HellaSwag show where models fail when predictions must make sense in the real world.

They use them to test and improve AI models before deployment. This is especially important in high-risk fields like medicine, law, or finance. Using adversarial benchmarks can reduce the chance of AI making illogical or unsafe suggestions.

Yes. The dataset is open source and available on AllenAI’s HellaSwag page. It includes evaluation scripts and documentation.

As of 2025, closed-source models like GPT-4.5 and Claude 3 Opus score near human-level. Open models such as Falcon-180B and Mistral-mixtral series also perform well with fine-tuning.