Speech recognition is no longer a futuristic gimmick. In 2025, it is powering hospitals, customer support teams, and AI agents — often without users even realizing it. Over 90% of global businesses are now investing in voice AI, according to Statista. But with so many speech-to-text APIs on the market, choosing the right one is harder than it looks.

This guide explains what matters in 2025, how the top tools compare, and what to watch out for before committing to any provider. If voice tech is on your roadmap, these are the details that will save time, money, and future headaches.

What a Speech-to-Text API Does and Why It Matters

A speech-to-text API is a programming interface that converts spoken language into written text using advanced algorithms. At its core, it uses Automatic Speech Recognition (ASR), a technology built on deep learning models trained on vast amounts of audio data. These models learn to recognize patterns in spoken words, account for differences in accent, handle background noise, and understand complex sentence structures.

Most APIs are cloud-based and receive audio input in formats such as WAV, MP3, or FLAC. They process the file, detect speech segments, and return text in real time or batch mode. Real-time transcription is essential for applications like live captioning or voicebots. Batch transcription is used for post-processing recorded audio, such as in legal or healthcare documentation.

Some APIs also offer hybrid deployment options. For instance, Graphlogic’s platform supports both cloud and private on-premise integration, which is valuable for companies with strict data governance rules. You should also know that some tools use end-to-end neural networks, while others use modular pipelines with separate acoustic and language models. The choice affects customization, speed, and accuracy.

The best systems are not just transcription engines. They also embed natural language understanding features that allow applications to extract meaning, sentiment, and speaker roles. These additional features unlock the potential of voice data far beyond simple note-taking.

Key Features You Need to Compare in 2025

Evaluating speech APIs requires looking at much more than just price or brand. Here is a detailed look at the most important features to consider in 2025, based on real developer feedback and verified technical documentation.

Accuracy in Real Environments

Speech accuracy varies across vendors and depends heavily on factors like accent variation, background noise, speaker overlap, and domain-specific vocabulary. Industry leaders like Deepgram have published accuracy rates as high as 92% for American English in noisy conditions. However, these numbers can drop significantly for less supported languages or dialects.

To assess accuracy properly, test the API using your own recordings. This includes real calls, medical notes, or interviews from your field. Make sure to evaluate word error rates in both ideal and difficult conditions. Also verify support for disfluencies like filler words, hesitations, and mid-sentence corrections. These are common in real speech and not all models handle them well.

Real-Time and Streaming Support

Live transcription is essential in customer service, accessibility tools, and AI assistants. You should look for APIs that support streaming input with sub-second latency. This means the audio is processed as it is received, not after the full recording ends. Graphlogic’s Speech-to-Text API offers continuous streaming with dynamic speaker detection and low latency buffering.

Some providers also offer word-level timestamps, which allow developers to align audio and text precisely. This is essential for video subtitling and live captioning. Check the documentation to ensure the timestamps are included in the API’s response schema.

Multilingual and Dialect-Specific Support

Speech technology is expanding to support more languages, but quality still varies. Google’s Speech-to-Text supports over 120 languages, while Deepgram supports around 30. OpenAI’s Whisper supports 57, according to its model card, but its performance is inconsistent across low-resource languages.

If your product serves global audiences, prioritize APIs that offer dialect-specific models or allow custom pronunciation dictionaries. Also verify how the system handles code-switching, which is when speakers shift between languages in one sentence. This is common in international contexts and often mishandled by generic models.

Advanced Functional Features

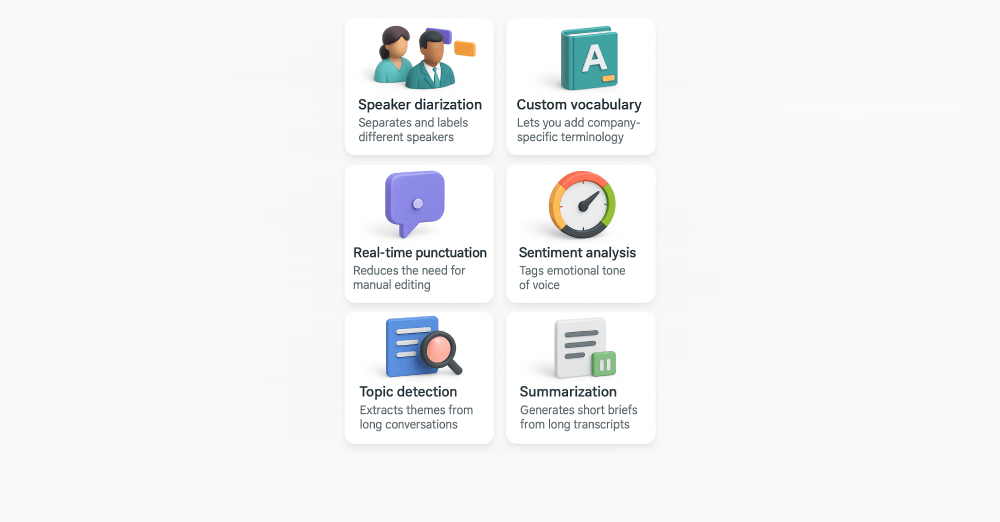

In 2025, the most competitive APIs go beyond transcription. Look for these additional features:

- Speaker diarization: Separates and labels different speakers.

- Custom vocabulary: Lets you add company-specific terminology.

- Real-time punctuation: Reduces the need for manual editing.

- Sentiment analysis: Tags emotional tone of voice.

- Topic detection: Extracts themes from long conversations.

- Summarization: Generates short briefs from long transcripts.

These features are increasingly expected in areas like legal, education, and healthcare. For example, Graphlogic’s generative AI platform includes summarization and text-to-speech options, enabling bidirectional interaction.

Practical Use Cases Across Industries

Speech APIs are being used in surprising ways across various sectors. Here are the most relevant examples for 2025 and how these tools are being applied beyond basic transcription.

Healthcare and Medical Dictation

Doctors often dictate patient notes, which are later transcribed manually. This is time-consuming and error-prone. With medical-specific APIs, doctors can now transcribe clinical conversations in real time, even with specialized vocabulary. Tools like Deepgram’s medical model and Graphlogic’s customizable engine support integration into EHR systems and digital scribes.

Transcriptions also help improve billing accuracy by capturing all spoken procedures and diagnoses. Some hospitals report 30% faster documentation and 40% reduction in administrative errors after API integration.

Contact Centers and Customer Experience

Contact centers record thousands of hours of calls. APIs convert them into text for analysis. Features like speaker labels, keyword detection, and emotion tracking help assess agent performance and customer satisfaction.

Live transcription also powers real-time coaching tools, where supervisors receive alerts based on trigger phrases. For example, a finance company used speaker diarization to separate agent and customer speech, achieving a 25% improvement in compliance monitoring.

Voice-Driven Interfaces

Smart assistants, kiosks, and virtual agents rely on fast speech recognition. Here, latency and multilingual support are critical. These applications are usually powered by APIs embedded in mobile apps or devices. In 2025, the demand for embedded AI is rising with edge computing, where processing happens locally on-device.

Graphlogic’s Voice Box API is one such product that allows developers to build interactive voice agents with flexible architecture and event-based triggers.

Comparing Top Providers by Key Metrics

Here is a simplified comparison of major players in the space. All pricing reflects 2025 publicly available data where possible.

| Provider | Strengths | Weaknesses | Price per Hour |

| Deepgram | Fast, customizable, on-prem supported | Fewer languages than competitors | $0.46 |

| OpenAI Whisper | Multilingual, free and open source | No real-time, no customization | Free (infra costs) |

| Google STT | Broad language set, mature API | Premium cost, complex pricing | High ($1.20+) |

| Azure STT | Good Microsoft ecosystem integration | High latency, expensive | $1.10 |

| AssemblyAI | Fast, includes summarization and analytics | Moderate accuracy, fewer languages | $0.65 |

These prices can vary based on enterprise plans and volume usage. Make sure to read each vendor’s documentation and SLA for current rates and support policies.

Integration Tips and Scalability Advice

When planning to embed speech APIs in production systems, ease of integration is essential. Look for SDKs in popular languages such as Python, JavaScript, and C#. Clear API docs and open developer forums also help reduce onboarding time.

Scalability is another concern. Verify how the service handles peak demand and simultaneous streams. Choose providers with proven reliability in large-scale deployments. Deepgram and Microsoft Azure both offer enterprise-grade SLAs with over 99.9% uptime guarantees.

Also consider infrastructure flexibility. Some use cases may require hybrid setups or on-premise deployments for compliance. Graphlogic supports both, which is critical in sectors like healthcare and legal.

The Future of Voice Recognition in 2025 and Beyond

Several important developments are shaping the future of speech recognition technology.

- Transformer-based models: These neural architectures are improving context awareness and long-form transcription.

- Multimodal transcription: Tools are beginning to combine audio, video, and text for more accurate interpretation.

- Edge deployment: Local processing reduces latency and improves privacy.

- Low-resource language expansion: APIs are starting to support African, Indigenous, and minority languages.

- Real-time summarization: Summaries generated as people speak are now possible in enterprise use.

As APIs grow smarter, they are becoming essential to automation and accessibility in business. AI assistants, smart workflows, and cross-language collaboration all benefit from these advancements.

Final Thoughts

Speech-to-text APIs have gone from experimental tools to production-grade infrastructure. Choosing the right provider depends on understanding your workflow, testing real-world data, and comparing support, price, and accuracy.

Whether you are improving healthcare documentation, building a voicebot, or analyzing customer calls, speech APIs are now a foundational part of modern applications. In 2025, investing in the right solution is not just a tech upgrade. It is a competitive necessity.

FAQ

Look for APIs with domain-specific models and high tolerance for background noise. Deepgram and Graphlogic both support medical terms and offer speaker diarization, which is useful for group consultations.

Whisper is powerful for research and supports many languages. However, it is not real-time, lacks support, and must be self-hosted. Consider infrastructure costs before committing.

Prices vary. Deepgram offers $0.46 per hour, while Google and Azure exceed $1.00. You should also account for data transfer and API call limits.

Only some providers offer offline or on-device options. Graphlogic supports private deployment, which is suitable for regulated environments.

Use your own audio samples and compare word error rate, punctuation accuracy, and speaker separation. Avoid relying solely on benchmarks from vendors.