The ARC Benchmark remains one of the most reliable methods for measuring reasoning in large language models. In 2025 it is even more relevant because the new ARC-AGI-2 tasks have exposed reasoning gaps in models that otherwise perform strongly. Humans score above 60 % on these tasks, while leading AI systems often stay in the single digits.

This clear gap shows that many models still rely on pattern matching instead of deep comprehension. Such weaknesses can have serious consequences in sectors like healthcare, law, and education, where reasoning mistakes are costly. Even the best open-source performer, Falcon-40B, reaches only 61.9 % on ARC, proving that there is still a long way to go.

Introduction to ARC Benchmark LLMs

ARC was created by AI2 researchers in 2018 to measure reasoning beyond fact recall. The benchmark contains more than 7 800 multiple-choice science questions. About 3 000 belong to the Easy Set, which focuses on straightforward retrieval. Around 4 800 form the Challenge Set, which requires multi-step reasoning.

The ARC Corpus adds 14 million science-related sentences for context. This forces models to connect facts rather than rely on exact matches. This combination of questions and corpus makes ARC a realistic measure of reasoning in areas like clinical decision support or technical troubleshooting.

Structure and Dataset

The ARC dataset is deliberately divided into components that test different dimensions of model ability. The Easy Set contains approximately 3 000 multiple-choice science questions. These are designed to check whether a model can handle straightforward factual queries, such as recalling a scientific term, identifying a basic property of matter, or recognizing the correct definition of a process. A model that struggles here is unlikely to succeed on more advanced tasks. The Easy Set serves as a baseline that quickly reveals any major gaps in general knowledge.

The Challenge Set is significantly more demanding, with about 4 800 questions that require multi-step reasoning. Many of these questions combine concepts from multiple disciplines. For example, a single question might involve understanding a physical principle, applying it to a biological context, and then interpreting an experimental result. The Challenge Set is where most models fail, since it requires logical synthesis rather than direct retrieval. These questions often mirror the complexity of real-world decision-making, such as diagnosing a patient with overlapping symptoms or solving an engineering problem with incomplete data.

Supporting both sets is the ARC Corpus, which contains 14 million science-related sentences drawn from textbooks, research papers, and educational websites. The corpus is not just background material. It is a vital resource for models that rely on retrieval-augmented generation or contextual learning. Access to this evidence enables a system to find relevant facts, cross-reference them, and form a coherent answer. Developers who fine-tune models on the ARC Corpus often see improvements in the Challenge Set because the model learns to connect information spread across multiple contexts.

| ARC Subset | Number of Questions | Difficulty Level |

| Easy Set | ~3 000 | Fact retrieval |

| Challenge Set | ~4 800 | Advanced reasoning |

| Corpus | 14 million sentences | Training and evidence |

By using both the Easy and Challenge Sets together, developers can measure not only raw knowledge but also the reasoning skills required to apply it effectively. This dual-structure approach helps identify whether a model’s strengths are balanced or skewed toward either simple recall or complex inference. In practice, consistent performance across both sets is rare, and tracking the gap between Easy and Challenge scores can reveal how well a model might perform in unpredictable, high-stakes environments such as medicine, law, or scientific research.

ARC in the Model Evaluation Landscape

ARC is unique among benchmarks because it focuses on a model’s ability to integrate information and apply it to complex problems. While many evaluations measure factual recall or broad multitask capabilities, ARC is designed to assess reasoning in a scientific context where correct answers often depend on connecting multiple ideas. This makes it particularly relevant for industries where precision and logical consistency are critical.

For example, a model may score highly on a factual benchmark like MMLU by memorizing information but still fail to solve an ARC Challenge Set problem that requires synthesizing evidence from several domains. The difference between these capabilities is not academic. It directly impacts how well a model performs in real-world situations, such as interpreting technical documentation or troubleshooting a system failure.

The current leader in the open-source category is Falcon-40B, which holds a 61.9 % score on ARC. This is impressive for an open model but still far from human-level performance. In the closed-source category, models like Google’s ST-MoE-32B exceed 86 %, highlighting the advantages that proprietary architectures can bring in reasoning tasks. These differences matter when selecting AI for use in healthcare diagnostics, financial risk modeling, or legal analysis, where reasoning quality can outweigh raw factual knowledge.

ARC and the Hugging Face Leaderboard

The Hugging Face Open LLM Leaderboard provides a comprehensive view of model performance by combining ARC with other benchmarks, each targeting a different capability. This multi-benchmark structure allows evaluators to see how well a model performs across reasoning, commonsense, multitasking, and factual accuracy.

| Benchmark | Primary Focus | Description |

| ARC | Scientific reasoning | Complex science questions |

| HellaSwag | Commonsense reasoning | Daily-life scenario logic |

| MMLU | Multitask capability | Diverse academic questions |

| TruthfulQA | Fact-checking | Models’ truthfulness |

ARC’s role in this mix is to identify models that can apply structured reasoning to domain-specific problems. HellaSwag complements this by checking a model’s understanding of everyday logic and plausibility. MMLU tests adaptability across many academic and professional fields, while TruthfulQA verifies whether a model produces factually correct information.

This combination of tests prevents overfitting to a single benchmark and offers a clearer picture of a model’s overall strengths and weaknesses. For organizations choosing between competing AI systems, such transparency is essential. It ensures that a high ARC score is evaluated alongside other necessary skills, resulting in more balanced and informed deployment decisions.

ARC in Real-World Domains

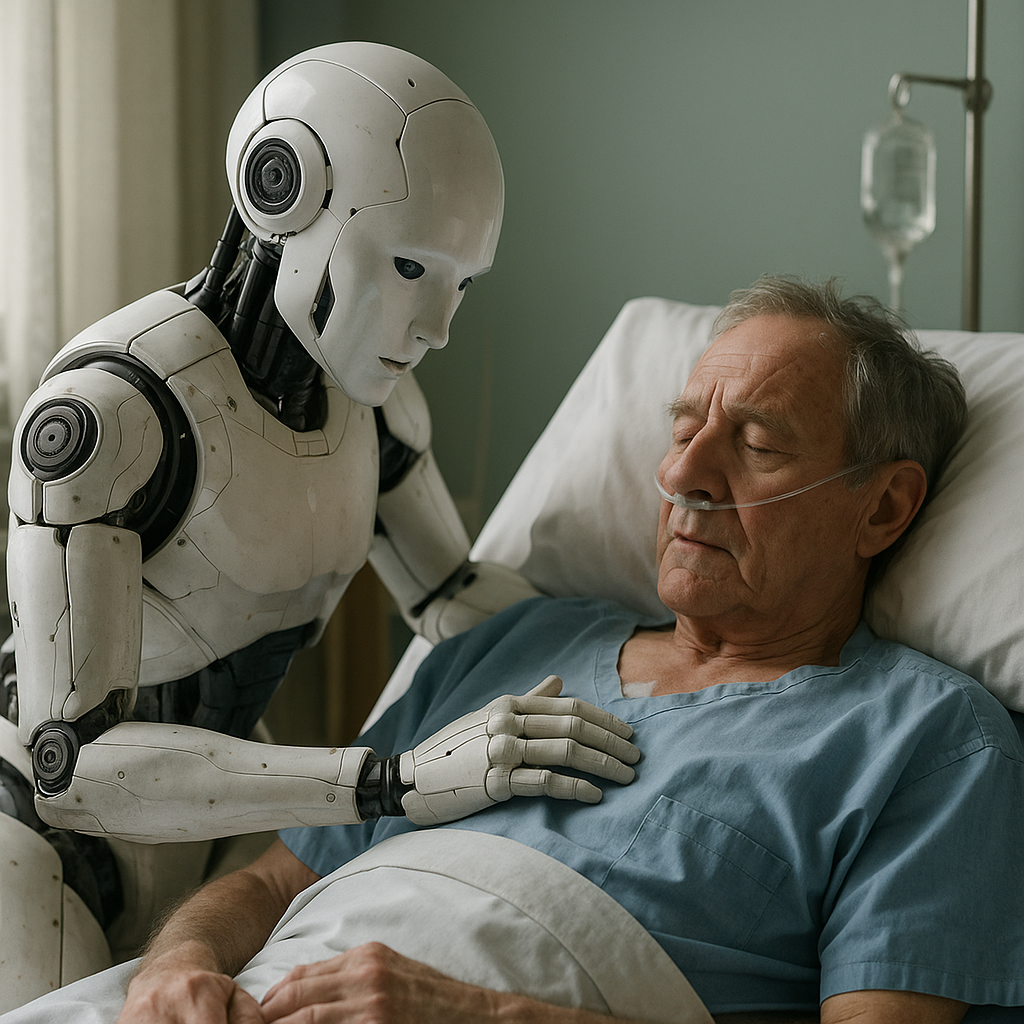

ARC’s design makes it useful far beyond academic evaluation. In healthcare, ARC-like reasoning challenges are valuable for testing AI systems intended for diagnosis or treatment planning. Such systems must integrate patient symptoms, laboratory data, imaging results, and medical history into coherent conclusions. High ARC performance suggests that a model is better equipped to manage complex or ambiguous cases, such as those involving overlapping conditions or rare diseases. This can reduce misdiagnoses and improve patient safety.

In education, ARC principles can be applied to adaptive learning systems that tailor instruction to each student. These systems must not only detect errors in a learner’s responses but also understand the reasoning behind those errors. A high-performing model on ARC-like benchmarks is more likely to give clear explanations, offer accurate examples, and adjust its teaching style to address specific misunderstandings. This supports more effective and personalized learning experiences.

In scientific research, ARC-style evaluation can help determine whether AI is capable of synthesizing findings from multiple studies. This ability is crucial in fields like pharmacology, where understanding drug interactions requires integrating data from chemistry, biology, and clinical trials. Models with strong ARC performance are better at identifying hidden connections, generating relevant hypotheses, and proposing viable next research steps. This kind of reasoning can accelerate discovery in domains such as materials science, climate modeling, and environmental health, where progress depends on interpreting diverse and often fragmented data sets.

Scoring and Evaluation

The ARC scoring system is intentionally simple but captures important details about a model’s reasoning. Each correct answer is worth one point. If the model selects multiple choices and one of them is correct, it receives partial credit. The credit is calculated as one divided by the total number of options selected. This prevents models from gaining full points by guessing all possible answers and rewards more focused reasoning.

This scoring method provides more than a raw accuracy percentage. It allows researchers to see how often a model identifies the correct answer as part of a smaller, targeted set of possibilities. This is particularly important when evaluating reasoning under uncertainty. A model that consistently includes the right answer among a few choices is showing partial comprehension, even if it does not always commit to the single correct option.

For example, in healthcare applications, partial correctness can still be clinically useful. If an AI diagnostic assistant includes the correct disease in its top two suggestions, a physician can investigate further and confirm or rule out each possibility. This is safer than a system that provides a single but incorrect diagnosis. In technical troubleshooting, partial credit can indicate that the AI is narrowing down the likely cause of a fault, even if it has not fully resolved the issue.

By tracking both full and partial scores, ARC makes it possible to monitor incremental improvements in reasoning ability. Developers can see whether training changes increase the likelihood of the correct answer being in the top set of choices. Over time, this helps refine models for high-stakes environments where near-miss answers still guide productive action.

Methods to Improve ARC Performance

Improving ARC scores requires targeted strategies that strengthen both factual knowledge and reasoning ability. One effective method is fine-tuning models with diverse datasets that include reasoning-rich problems. Another approach is integrating retrieval-augmented generation using the Graphlogic Generative AI Platform. This gives models real-time access to relevant information when solving ARC-style tasks, improving accuracy without memorizing fixed answers.

Training with multi-step reasoning prompts can improve a model’s ability to break down complex tasks. Regular testing on both Easy and Challenge Sets ensures balanced improvement. Using the ARC Corpus for targeted practice can further improve a model’s ability to link related facts spread across multiple contexts.

Finally, combining ARC with other reasoning-focused benchmarks such as HellaSwag and TruthfulQA creates a comprehensive evaluation loop. This ensures that progress on ARC does not come at the expense of commonsense understanding or factual accuracy.

Recent Developments

In 2025 ARC-AGI-2 introduced abstract visual puzzles to test general reasoning. Humans solve them quickly, but AI models fail more than 90 % 2.

The public set contains 1 000 training puzzles. The evaluation set contains 120 unseen puzzles. Scoring uses pass@2, allowing two attempts per puzzle 5.

| Model Name | Model Type | ARC Score (%) | Notable Traits |

| Falcon-40B | Open LLM | 61.9 | Strong open-source leader |

| ST-MoE-32B | Closed LLM | 86.5 | Top proprietary model |

| GPT-4 | Closed LLM | ~80 | Multi-task proficiency |

| LLaMA 2 | Open LLM | ~65 | Efficient and versatile |

ARC and Regulation

As AI moves into sensitive sectors, regulators are considering reasoning benchmarks in approval processes. ARC offers a structured way to assess whether a system applies knowledge logically and consistently.

In healthcare, ARC-like tests could be part of certification for diagnostic AI tools. In finance, they could check reasoning quality in risk analysis or fraud detection. In education, they could help accredit AI tutoring systems.

Governments in the US, EU, and Asia-Pacific are already discussing reasoning benchmarks in AI oversight. If adopted, ARC could become a formal standard for trust-sensitive AI deployments.

Why ARC Matters for Future AI

ARC places reasoning at the center of AI evaluation, which is essential for building systems that can be trusted in sensitive environments. Many benchmarks measure factual recall, but ARC is designed to test whether a model can connect different ideas, interpret evidence, and reach logical conclusions. This skill is often more important than memorized knowledge, especially in situations where wrong answers can have serious consequences.

In healthcare, a reasoning-focused benchmark like ARC is relevant for assessing AI used in diagnostics, treatment planning, and clinical decision support. A model that performs well on ARC’s Challenge Set is more likely to integrate patient data with medical guidelines in a coherent way. In finance, strong ARC performance can indicate the ability to evaluate market scenarios or detect fraud patterns by combining multiple data points.

The Challenge Set remains unsolved by any model as of 2025, which underscores the difficulty of these reasoning problems. This ongoing gap drives research into better architectures, more effective training data, and advanced prompting techniques. The fact that no system has reached full human-level performance ensures ARC will remain a benchmark for innovation. For AI teams, closing the Challenge Set gap is not only a technical goal but also a way to prove trustworthiness and real-world readiness.

How Developers Use ARC

Researchers use ARC to evaluate new model architectures and training strategies beyond standard accuracy benchmarks. By comparing results on the Easy and Challenge Sets, they can see whether an improvement is due to better fact recall or a genuine gain in reasoning ability. This distinction is critical for designing systems that work reliably in unpredictable conditions.

Developers often analyze ARC results to pinpoint weaknesses in their models. If a system struggles with certain types of multi-step questions, they can target training with similar problems to improve performance. This approach prevents wasted resources on broad retraining when focused adjustments will suffice. Many teams incorporate ARC into automated testing pipelines using the Graphlogic Generative AI Platform. This enables continuous tracking of reasoning performance alongside other metrics.

For products that present results to non-technical users, ARC outcomes can be delivered through voice interfaces. The Graphlogic Text-to-Speech API can convert evaluation feedback into clear spoken explanations, making results more accessible. In educational tools, this can help instructors understand how well an AI system reasons about subject matter. In healthcare software, it can provide clinicians with concise, spoken summaries of reasoning quality, improving trust and transparency.

FAQ

ARC uses text-based science questions, while ARC-AGI-2 focuses on abstract visual puzzles.

It gives models two attempts, capturing reasoning that improves on the second try.

Yes, especially in fields requiring complex reasoning like diagnostics, research, and technical problem-solving.

Yes. It holds 61.9 % on ARC as of early 2025.

No. It should be combined with other benchmarks to get a complete performance profile.