Artificial intelligence in medicine depends on one crucial stage called pre-training. This is when a large language model learns to understand human language before it ever sees a medical record or scientific paper. The quality of this phase determines whether an AI system will later provide useful clinical insights or produce unreliable answers.

In this article we will explore how data shapes the intelligence of medical AI. You will learn what happens during pre-training, how models transform raw text into structured knowledge, and why the quality of that data matters more than its size. We will also look at modern preprocessing techniques, key trends in ethical data use, and practical steps for building reliable language models in healthcare. Finally, the article will outline future directions — from privacy-preserving datasets to explainable medical AI that doctors can truly trust.

Why Pre-Training Matters

Every medical AI assistant, search engine, or decision-support tool begins its life in pre-training. During this process, the model reads through billions of sentences from books, articles, and public web pages. It studies how words connect and how context changes meaning. The model does not yet perform medical tasks. It simply builds the linguistic foundation that later allows it to interpret lab results, summarize research, or translate patient notes into plain language.

How Models Learn During Pre-Training

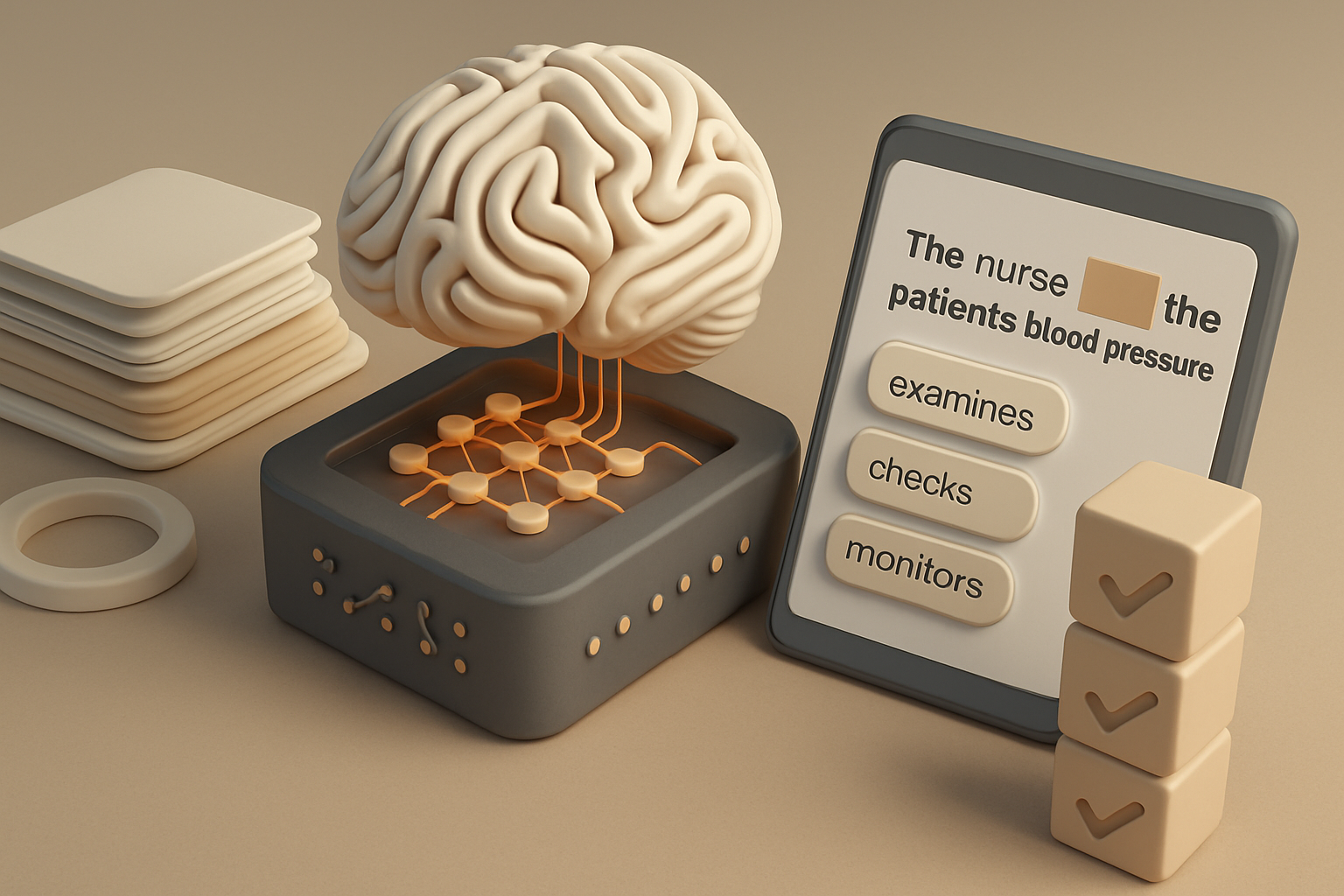

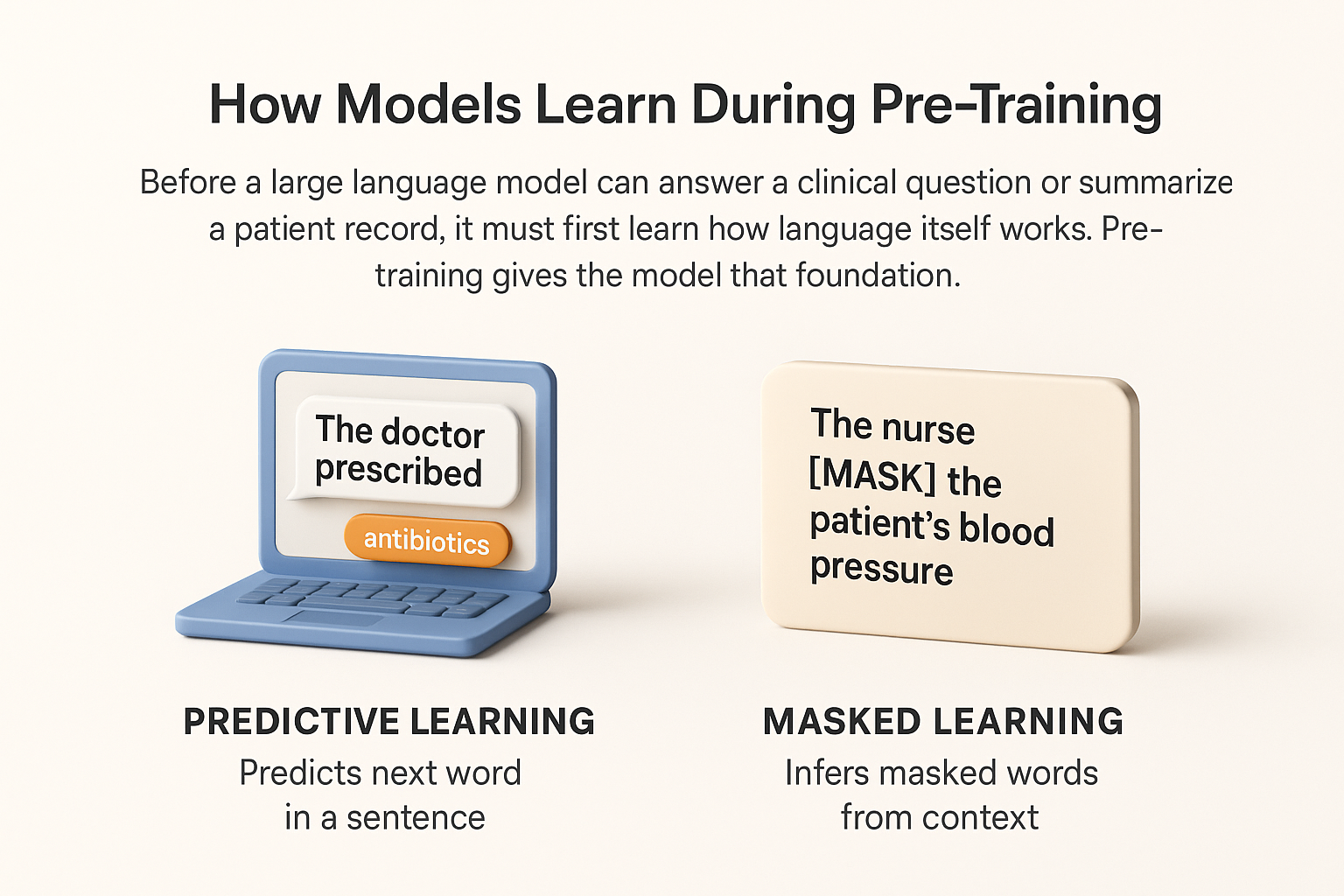

Before a large language model can answer a clinical question or summarize a patient record, it must first learn how language itself works. Pre-training gives the model that foundation. During this stage, the system processes billions of sentences to detect patterns, grammar, and relationships between words. It does not yet know medicine, but it learns how to read, predict, and reason through text.

A model learns language in two main ways. The first method is predictive or causal learning. It teaches the system to anticipate what word or phrase should come next. For example, when reading “The doctor prescribed,” the model predicts likely continuations such as “antibiotics” or “treatment.” By repeating this across trillions of tokens, the model develops a sense of fluency and coherence.

The second method is masked learning. Here, certain words in a sentence are hidden, and the model must infer what they are based on the surrounding context. For example, in “The nurse [MASK] the patient’s blood pressure,” the model learns to recover the missing verb. This approach builds strong comprehension because the model examines both the left and right context rather than reading only forward.

Modern systems often combine these two strategies. Predictive learning helps with generating natural sentences, while masked learning strengthens understanding of meaning. Together, they allow the model to handle the complexity of medical language, where small differences in context — such as “positive” in a test result — can completely change interpretation.

Data as the Real Source of Intelligence

No model can think better than the data it was trained on. In the medical field, this rule is absolute. If the dataset contains low-quality or biased information, the model will reproduce those errors in its predictions. A single incorrect pattern in data can lead to the model offering unsafe advice or misinterpreting a clinical summary.

High-quality data gives the model not only knowledge but also reliability. Engineers therefore spend months curating text that represents diverse medical specialties, languages, and writing styles. Good data includes scientific literature, anonymized patient records, clinical trial reports, and healthcare guidelines. Poor data, such as unverified web content or duplicated passages, can corrupt learning outcomes.

A reliable dataset should reflect how real professionals communicate. That includes abbreviations, shorthand notes, and mixed terminology found in everyday hospital records. It must also include plain-language examples so the model can later talk naturally with patients. Diversity and accuracy together form the real intelligence inside an AI system.

How Data is Prepared Before Training

Transforming raw text into a medical-grade dataset involves several precise steps that determine how well the model will later perform in clinical tasks. Each stage requires both automation and human supervision to ensure the highest data quality.

- Collection. Engineers start by gathering text from trusted sources such as scientific journals, regulatory documents, medical textbooks, and verified open datasets. The goal is to provide the model with both broad linguistic coverage and domain-specific terminology. This step also includes building balanced samples from different specialties like oncology, cardiology, and pharmacology to reflect the diversity of real clinical communication.

- Filtering. Once collected, data undergoes automated filtering to remove irrelevant, noisy, or low-quality content. Spam pages, broken files, and non-medical discussions are excluded. In sensitive domains like healthcare, human experts often review random samples to confirm contextual relevance and readability. This combination of automation and human oversight prevents unwanted data patterns from slipping into the corpus.

- De-duplication. Teams then identify and remove duplicate passages that can distort model learning. Repetition may cause a model to memorize text rather than extract meaning, which reduces its ability to generalize across new cases. Advanced hashing and similarity detection algorithms help recognize and eliminate near-identical entries across millions of documents.

- Normalization. Engineers standardize punctuation, spaces, letter cases, and special symbols to unify the text format. Although this seems minor, normalization ensures that data from multiple hospitals, regions, or publication systems can be processed consistently. Without it, small formatting inconsistencies could lead to tokenization errors and slower training.

- Tokenization. Text is broken down into smaller elements known as tokens. Instead of treating each word as a separate unit, models often use subwords, which allow rare or complex terms like chemical compounds, gene identifiers, or drug names to be represented more efficiently. Tokenization also reduces vocabulary size, saving computing power while preserving meaning.

- Chunking. The final step divides the tokenized text into fixed-length sequences that the model can process during training. These chunks must be long enough to preserve context but short enough to fit within the model’s memory window. Proper chunking ensures that language flow and medical context are not broken between sequences, leading to more coherent understanding and prediction.

Each of these six stages directly influences the accuracy, stability, and cost of model training. Well-designed preprocessing can reduce computation time by up to 25% and improve comprehension at the same time. For medical applications, that means faster training, safer predictions, and models capable of supporting real-world clinical decision-making with greater reliability.

Balancing General and Medical Data

Creating a language model for healthcare requires a careful mix of general and domain-specific data. General text helps the system understand everyday language, idioms, and grammar. Medical data, in contrast, teaches precision, terminology, and professional tone.

If training includes only clinical text, the model may become rigid and struggle with natural conversation. It might understand the term “hypertension” but fail to connect it with “high blood pressure” in patient-friendly dialogue. Conversely, if trained only on public text, it may misunderstand medical abbreviations or dosage terms.

The most effective balance combines both worlds. Many projects use roughly 70% general data and 30% medical data during pre-training. This ratio allows the model to remain fluent yet accurate. Later fine-tuning can further specialize it for clinical reasoning or documentation tasks.

Maintaining balance also helps reduce bias. When the dataset reflects multiple communication styles — from academic research to nursing notes — the model becomes more adaptable to real-world healthcare settings. It can respond naturally to a patient, summarize a complex study for a doctor, and keep tone and meaning consistent.

Avoiding Memorization and Bias

One of the most serious challenges during language model training is unwanted memorization. Instead of learning patterns and meaning, a model may simply store and reproduce exact fragments from its dataset. In everyday applications this is already undesirable, but in medicine it can be dangerous. A model that recalls confidential phrases from patient records can unintentionally expose private information.

To prevent this, developers continuously monitor the model’s outputs during pre-training and fine-tuning. Specialized tools detect repetitions and overrepresented patterns. When the same phrasing appears too frequently in generations, teams adjust or rebalance the dataset. In addition, deduplication at the data-processing stage helps remove identical text segments before they ever reach the training phase.

Another risk is bias. Medical language reflects the history of healthcare systems, which means it can contain demographic or cultural imbalances. If these remain uncorrected, a model might prioritize certain groups, overlook others, or reproduce outdated medical assumptions. Bias may also appear when data from one region dominates over others, leading to lower performance on diverse populations.

Reducing bias requires a mix of technical and ethical practices. Developers include geographically and linguistically diverse data, balance gender and age representation, and involve clinicians from different backgrounds in dataset review. Fairness auditing tools are used to measure whether predictions vary between demographic groups. Regular human evaluation remains essential because many subtle stereotypes or linguistic asymmetries cannot yet be detected automatically.

Ethical responsibility in model design now forms part of medical AI governance. Institutions expect transparent documentation about what data was used, how it was cleaned, and what steps were taken to protect privacy. These principles make AI safer and align it with the trust standards required in clinical environments.

Trends Defining the Future of Pre-Training

The pre-training stage of medical AI continues to evolve rapidly. New trends show where the field is heading and what priorities define responsible innovation.

1. Privacy Protection

Privacy has become a central requirement in healthcare AI. Regulations such as GDPR in Europe and HIPAA in the United States require strict anonymization of patient data. Modern frameworks address this by generating synthetic medical text that maintains linguistic realism without referencing any real individuals. Synthetic data now plays a growing role in expanding training sets while preserving confidentiality.

2. Energy and Cost Efficiency

Training a large model demands vast computational resources. Developers are therefore shifting focus from sheer scale to smarter data use. Strategies such as adaptive sampling, smaller vocabularies, and selective retraining maintain accuracy with less power and memory. This move not only cuts costs but also reduces the environmental footprint of large-scale AI development, which is increasingly scrutinized by both regulators and the scientific community.

3. Transparency and Explainability

Clinicians no longer accept opaque “black-box” algorithms. They need to know how an AI reached its conclusion. Future pre-training pipelines are expected to include metadata that connects model predictions to specific types of data or reasoning steps. Such traceability helps doctors validate outputs, build trust, and integrate AI safely into patient care.

4. Collaborative and Open Datasets

Hospitals, research centers, and universities are beginning to form collaborative networks that share de-identified medical data. These joint repositories allow smaller organizations to train competitive models without breaching patient privacy. This democratization of access strengthens innovation and helps standardize data quality across the field.

5. Domain Adaptation and Continuous Learning

New methods allow models to keep learning from fresh medical data without full retraining. This continuous adaptation is crucial for fields like infectious disease tracking, where knowledge changes rapidly. Updating models in smaller, frequent cycles ensures that language and clinical knowledge remain current.

Collectively, these trends point toward an ecosystem that values responsibility and sustainability as much as raw capability. The next wave of pre-training will focus less on size and more on precision, transparency, and collaboration.

Real-World Examples

Practical use of pre-trained models in medicine is already widespread. Hospitals now apply specialized language models to automate report writing and assist doctors in summarizing electronic health records. These systems can reduce administrative workload and ensure that key information is not lost in long notes.

Pharmaceutical companies employ large models to analyze clinical trial reports, detect potential safety issues, and identify promising research directions. This speeds up the process of evaluating new treatments while maintaining compliance with regulatory standards.

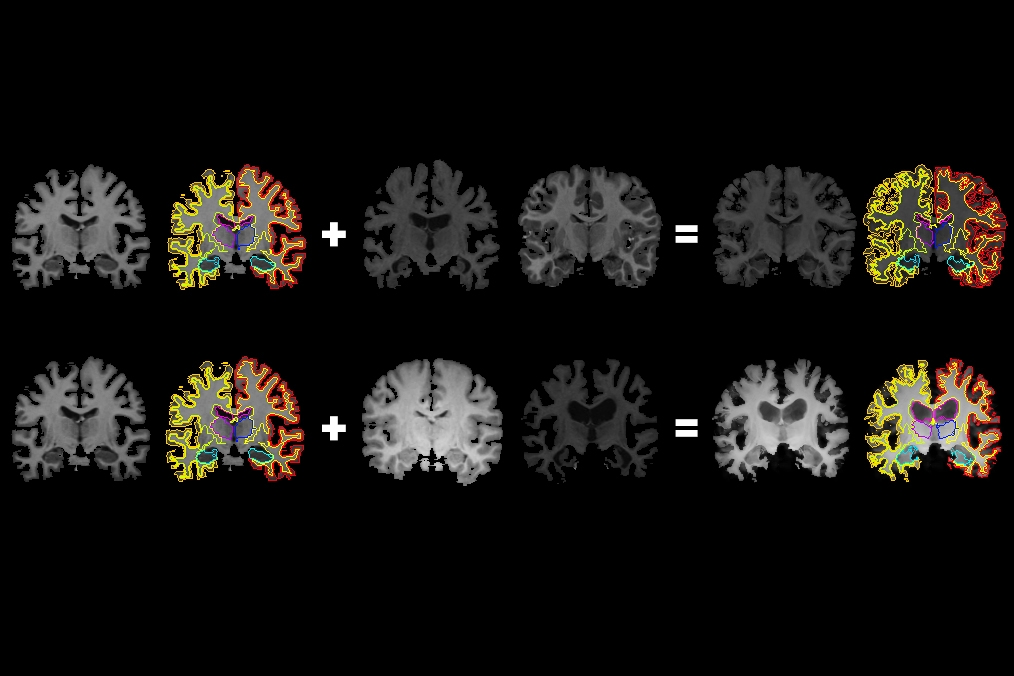

Public health agencies explore multilingual models capable of translating medical guidelines and patient information across languages, making healthcare more inclusive. Research groups also train smaller domain-specific models for radiology, pathology, or genomics to improve diagnostic accuracy.

All these applications rely on the same foundation: carefully prepared, high-quality pre-training data. Without rigorous preprocessing and ethical review, even the most powerful architecture cannot produce safe or reliable outcomes. When done correctly, pre-training becomes not only a technical process but also a statement of trust, transparency, and responsibility in modern medicine.

Tips for Building Medical LLMs

Creating a reliable language model for medicine requires much more than computing power. The foundation lies in data integrity, domain knowledge, and continuous quality control. The following recommendations summarize key practices used by leading research teams and healthcare AI developers.

- Collect data from verified scientific and clinical sources.

Only use text that originates from trusted medical publications, validated databases, and clinical documentation reviewed by professionals. Examples include peer-reviewed journals, regulatory guidelines, and de-identified hospital records. Avoid relying on open web content or social media, which can introduce misinformation and unverified claims. Verified data ensures that the model learns evidence-based medicine rather than popular myths. - Apply strict anonymization before any model sees the text.

Before including clinical data, all personally identifiable information must be removed or masked. This includes names, addresses, record numbers, and even contextual details that could reveal identity indirectly. Advanced anonymization uses both manual review and algorithmic pattern detection. It is safer to lose some data than to risk privacy violations. In healthcare AI, compliance with data protection standards is as important as technical accuracy. - Regularly monitor output to detect factual errors.

Even a well-trained model can make confident but incorrect statements. Teams should review generated summaries, diagnoses, or research analyses at multiple training stages. Continuous human-in-the-loop evaluation helps catch inaccuracies before deployment. When errors are found, they often trace back to specific data sources or preprocessing issues, allowing developers to refine the pipeline efficiently. - Include diverse linguistic styles, from academic reports to patient dialogues.

Medical language varies widely. A cardiologist’s report, a public health brochure, and a patient’s email all use different tone and structure. Training the model on these varied styles helps it adapt to real-world communication. This diversity also supports multilingual healthcare delivery and patient-centered design, allowing AI to interact naturally with professionals and the public alike. - Retrain tokenizers to handle chemical names, gene codes, and dosage patterns.

Standard tokenization often splits long terms like “acetylsalicylic acid” or “BRCA1 gene” into meaningless fragments. Custom tokenizers trained on biomedical text prevent this and help the model represent complex terms correctly. This small step significantly improves accuracy in pharmacology, genomics, and clinical text analysis. - Keep a separate validation dataset that never overlaps with training material.

Validation is the true measure of performance. The dataset used for testing must remain isolated from training to avoid information leakage. In medical AI, overlapping data can give a false impression of accuracy and hide generalization problems. Always validate on new, unseen documents from similar but distinct sources. - Document every decision in the data pipeline.

Record where data came from, what filters were applied, and what was excluded. Transparent documentation supports reproducibility and accountability. Hospitals and regulators increasingly request such audit trails as part of AI governance. - Plan for continuous updates and retraining.

Medical knowledge evolves. Drug recommendations change, new diseases appear, and terminology shifts. A reliable model must have a mechanism for regular updates, whether through incremental fine-tuning or scheduled re-training on fresh, verified data.

Following these practices builds systems that are not only accurate but also compliant, explainable, and adaptable to the fast-changing medical landscape.

The Human Role in AI Training

Despite major advances in automation, the most important factor in medical AI remains human expertise. Language models may learn statistical patterns, but they cannot interpret medical meaning without guidance. Clinicians, linguists, and data scientists play distinct yet complementary roles in the training process.

Clinicians ensure medical correctness. They review datasets, flag ambiguous terminology, and help define what constitutes an acceptable response. Their feedback during evaluation prevents subtle clinical errors from being embedded into the model.

Linguists and domain editors analyze how information is expressed. They verify that the model’s output is both grammatically sound and contextually accurate. In patient-facing systems, they make sure the tone is empathetic and clear.

Data scientists and engineers maintain the pipeline’s integrity. They manage preprocessing, tokenization, and monitoring systems. Their technical insight ensures that the model’s training follows reproducible and ethical standards.

Human review continues even after deployment. In clinical applications, experts monitor live interactions and audit model outputs to ensure safety. This process, sometimes called “human-in-the-loop oversight,” balances automation with professional judgment.

Ultimately, AI can process millions of records faster than any human team, but it lacks intuition, ethical reasoning, and real-world accountability. Human experts define what accuracy, fairness, and trust mean in a healthcare setting. Their participation transforms a machine-learning model into a responsible medical tool that aligns with the principles of care and patient safety.

FAQ

It is the first stage where AI learns language patterns from large text collections before handling specific medical tasks.

Even small errors can distort learning. Careful cleaning ensures accurate, consistent, and safe data.

Yes. Hospitals can train smaller local models on anonymized data using open frameworks.

The model may repeat private text, spread misinformation, or misinterpret clinical terms.

It will rely on mixed data sources, stronger privacy controls, and explainable medical outputs.