Education in 2025 is experiencing a fundamental shift. According to an IDC survey 86% of education organizations now use generative AI, more than in any other industry. Another IDC study shows that 60% of universities already run generative AI applications in production, while 32% plan deployment within a year.

In the United Kingdom a survey by Jisc reported that 92% of students now use AI tools such as chatbots for study compared with 66% in 2024. In the United States researchers at an elite college found that 80% of students had adopted AI academically within two years of the launch of ChatGPT. These numbers reveal that chatbots are no longer experiments. They are becoming part of everyday education.

How Chatbots Support Students and Staff in Practice

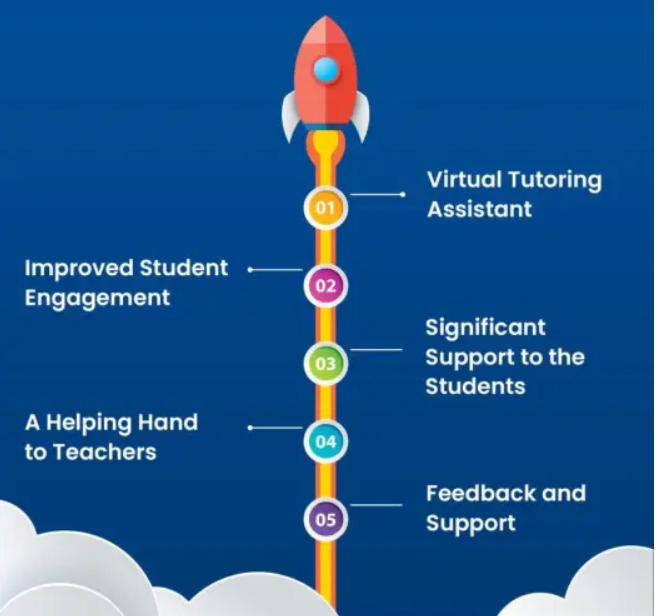

Chatbots in schools and universities work as helpers. They answer questions about admissions, timetables, deadlines and homework. They provide responses at any time of the day. This matters in global institutions where students live in different time zones.

In South Australia the EdChat chatbot can assess an English language assignment in 52 seconds instead of 30 minutes. The program saves about 15 000 teacher hours every year. Teachers spend more time guiding students instead of grading routine tasks.

In Toronto the All Day TA chatbot answered 12 000 queries in one semester at the Rotman School of Management. Professors reported that students asked deeper questions in office hours since the simple issues were already resolved. The system now operates in over 100 institutions across North America.

These cases demonstrate how chatbots reduce repetitive work and give staff more time for meaningful interaction.

Benefits That Institutions Report and Their Real Limits

The benefits of chatbots are visible in statistics. One U.S. university saw a 40% drop in unresolved student tickets after deploying a chatbot. Response times shortened and student satisfaction improved.

Key benefits include faster engagement, reduced workload, scalability without new hires, and multilingual inclusivity. Yet challenges remain. Chatbots struggle with nuanced or emotional requests. They mislead if not updated regularly. They often integrate poorly with legacy systems. Privacy compliance is crucial under GDPR in Europe or FERPA in the U.S. Training is necessary so teachers know how to rely on AI without fear.

Technology alone does not guarantee success. Careful human oversight and policy frameworks determine effectiveness.

Personal Learning Gets Smarter with Adaptive Chatbots

Adaptive chatbots use performance data to tailor content. They deliver quizzes, evaluate responses, and adjust lessons. Students can learn at their own pace. Teachers see progress and identify gaps.

Georgia Tech uses adaptive AI in computer science education to modify lessons in real time 2025 report. In language learning, Duolingo chatbots already personalize practice.

The Graphlogic Generative AI & Conversational Platform supports adaptive learning by generating tailored responses. The Graphlogic Text-to-Speech API converts lessons into speech, improving access for auditory learners and visually impaired students. Such features extend inclusivity without heavy cost.

Trends Shaping Chatbots in Education

Immersive Learning with AR and VR

A 2025 EDUCAUSE survey shows 97% of students prefer courses enhanced with AR or VR, while 90% of educators confirm higher engagement. Chatbots are expected to guide students inside virtual labs or simulations.

Institutional AI Training

The American Federation of Teachers is building an AI training hub funded with $23 million from Microsoft, OpenAI and Anthropic. Teachers will learn to design quizzes, plan lessons and communicate with parents using AI. The focus is on control by educators rather than dependence on vendors.

Rapid Student Adoption Without Policy

In the UK 92% of students report AI use but only 33% receive training. This gap raises risks for academic integrity.

Local AI for Equity

The nonprofit Education Above All launched Ferby, serving 5 million children in India through localized chatbots. It works across languages and runs on low cost devices.

Academic Integration Rising

At a selective U.S. college 80% of students already use AI in their studies. Humanities students rely on it for writing. Engineering students use it for coding.

The Role of Parents in Educational Chatbots

Parents are becoming active users. In the United States 40% of schools now provide parental access to AI assistants. Parents check attendance, homework rules and upcoming deadlines.

A survey shows 68% of parents believe chatbots improve communication. Yet only 25% fully trust their accuracy. Schools must earn trust with reliable sources and transparent policies.

The Digital Divide and AI Access

Not every student benefits equally. UNESCO estimates that 260 million children remain out of school worldwide. Many lack internet access. Traditional AI chatbots cannot reach them.

Low bandwidth or SMS-based solutions are emerging in Africa and South Asia. Local language models improve access. A chatbot that works offline or in regional languages can reduce divides. Policymakers must view chatbots as equity tools, not just efficiency projects.

Legal and Ethical Considerations

Compliance Requirements

Chatbots handle sensitive student data such as grades, identifiers, and communication records. In the United States institutions must follow FERPA. In Europe GDPR applies. In California CCPA gives families rights to know and delete collected data. Managing compliance is complex when multiple vendors are involved.

Policy Gaps

A 2024 EDUCAUSE survey showed that 47 % of universities lacked a clear AI data policy. By 2025 the number is improving, but many institutions remain exposed. Without clear rules chatbots may store unnecessary or insecure data.

Cybersecurity Risks

Education is a frequent target of cyberattacks. A 2025 report recorded a 32 % rise in attacks compared with 2024. Chatbots linked to student portals can become entry points if not secured with strong authentication and encryption.

Ethical Concerns

Sensitive data from mental health queries or counseling requests raises ethical questions. Many experts argue that emotional or health data should never be stored without explicit consent. Universities must provide transparency, opt out rights, and publish regular reports.

The Bottom Line

The legal and ethical challenges are not barriers but reminders. With clear contracts, audits, and responsible governance, chatbots can support education while protecting student privacy and trust.

Challenges That Persist

Many universities still hesitate to adopt AI at scale. A study of U.S. research universities found that 63% encourage the use of generative AI, 56% share sample syllabi with AI guidance, while 37% provide no guidance at all. The inconsistency creates confusion for both students and faculty.

The main challenges include:

- AI literacy gaps

Teachers and students must learn how to use AI responsibly, monitor outputs, and recognize bias. Without training, overreliance and misuse grow quickly. In 2024 47% of universities had no clear AI data policy. - Faculty resistance

Many educators fear that chatbots promote plagiarism or weaken student critical thinking. Surveys show 50% of students worry about privacy, and teachers share these concerns. Trust depends on transparency and strong governance. - Technical barriers

Legacy systems do not always integrate smoothly with AI chatbots. Implementation and maintenance costs are high, especially for smaller schools. Static knowledge bases become outdated quickly if not updated regularly. - Legal and ethical risks

Universities must comply with FERPA in the U.S., GDPR in Europe, and CCPA in California. Failure to comply risks fines and reputational damage. Many schools lack the expertise to manage these obligations. - Equity concerns

Wealthier institutions adopt advanced AI tools faster, while underfunded schools lag behind. UNESCO estimates that 260 million children worldwide remain out of school, many without internet access. Without inclusive design, chatbots risk widening inequalities.

The barriers are not only technical but also cultural. Institutions must invest in infrastructure, training, governance, and equity if they want AI to succeed. Otherwise resistance and ineffective deployment will continue.

Conclusion: Balancing Promise and Caution

In 2025 chatbots are mainstream. They personalize learning, cut costs and scale support. Yet they bring privacy concerns, equity challenges and risks to academic integrity.

The institutions that succeed will integrate AI responsibly. They will train staff, build strong policies and protect student trust. Chatbots should complement teachers, not replace them. With careful use, AI can make education more inclusive and effective for millions worldwide.

FAQ

No. They scale support but cannot provide empathy or complex guidance.

Yes. EdChat saved 15 000 teacher hours annually. All Day TA handled 12 000 queries at a cost of about $2 per student.

They are reliable for structured questions. They fail with nuanced or emotional issues.

Yes. Many value instant support, but 50 % worry about privacy.

Risks are high. With 92 % of students in the UK using AI, assessments must adapt.

Parents at 40 % of U.S. schools use chatbots. 68 % see improved communication, but only 25 % trust accuracy.

One U.S. college reported a 15 % rise in counseling referrals after introducing a chatbot. Students appreciated anonymity.

Yes. Ferby serves 5 million children in India through local language access.