In 2025, AI voice agents are more than a tech trend. According to Juniper Research, over 8.4 billion voice-enabled devices are now active globally. That is more than the population of the planet. The global market is projected to exceed $38 billion by 2026. Companies are integrating voice interfaces into hospitals, banks, retail apps, and education platforms.

These systems now book appointments, give financial updates, and help customers shop hands-free. But do they actually improve service or just replace it with automation? The answers are more nuanced than you might expect.

Below is a practical guide to how voice agents work, where they are useful, what problems they face, and how to get started.

What Is a Conversational AI Voice Agent and Why It Matters

A conversational AI voice agent is a program that listens, understands, and responds like a person. It uses speech recognition to hear what you say, natural language processing to understand the meaning, and machine learning to improve over time.

These agents are changing industries where round-the-clock support and speed are essential. In healthcare, they help patients with appointment scheduling and medication reminders. In customer service, they handle routine questions quickly and consistently. Their ability to work without breaks makes them attractive to organizations facing labor shortages.

In the US, the healthcare sector is expected to face a shortage of over 3.2 million workers by 2026, according to the Association of American Medical Colleges. AI agents can help fill these gaps by handling non-urgent interactions.

You can experiment with this kind of technology using the Graphlogic Text‑to‑Speech API, which delivers natural-sounding speech suitable for healthcare, finance, or retail use cases.

How Voice AI Works: Core Technologies Behind the System

Understanding what is under the hood of voice AI systems is key to using them effectively. Each conversation involves several layers of processing that happen in milliseconds.

- First is speech-to-text, which converts the user’s voice into written input. Tools like the Graphlogic Speech‑to‑Text API use neural models to improve accuracy, even with accents or background noise.

- Next is natural language processing, which identifies grammar, meaning, and intent. This allows the system to figure out whether the user wants to schedule an appointment or file a complaint.

- Then comes dialogue management, which ensures the agent remembers context from earlier in the chat. Without this, conversations feel robotic or repetitive.

- The final step is text-to-speech, which delivers the AI’s response aloud. Modern platforms use expressive voice models to vary tone, speed, and emotion.

Systems that combine all of these layers reliably are often built using platforms like the Graphlogic Voice Box API, which lets developers test and refine AI behavior for their audience.

Which Type of Voice Agent Fits Your Use Case

Not every voice system works the same way. Choosing the right type depends on what kind of problem you want to solve.

- Chatbots handle simple tasks, usually by text but sometimes with voice input. They are best for FAQ-style conversations.

- Voice assistants like Siri or Google Assistant are good for everyday tasks such as setting reminders or controlling smart devices.

- AI agents are more complex and used in businesses. They connect to databases, understand more nuanced queries, and hold longer conversations.

- AI copilots are deeply integrated into work tools. They help staff manage workflows, predict needs, and assist with multitasking.

If your goal is customer-facing automation with some intelligence, start with an AI agent. If your need is internal productivity, consider deploying a copilot-style integration.

What You Actually Gain from Voice AI

Many companies turn to voice AI because it cuts costs. But its real value goes beyond that. In successful deployments, the technology improves workflow, reduces user frustration, and adds speed to basic interactions.

- Operational efficiency increases because staff spend less time on repetitive tasks. In customer service, teams report up to 30% faster case resolution when AI handles first-line queries.

- Customer satisfaction improves when users get answers instantly. A Zendesk study shows a 20% rise in satisfaction scores when AI voice systems are used.

- Cost reduction is significant at scale. Deloitte reports companies save between $0.70 and $1.10 per automated call compared to live agents.

- Scalability ensures systems can respond during high-demand periods. Whether during flu season or sales events, AI does not get overwhelmed.

- 24/7 availability makes support accessible without hiring night shift teams or outsourcing overseas.

These results are only possible when the system is trained well and designed around the actual needs of the users.

Where Voice Agents Actually Work in 2025

Voice AI has moved beyond tech demos. It is now used in major sectors with real-world outcomes.

In healthcare, systems manage patient check-ins, follow-up reminders, and pre-visit screening. The Mayo Clinic’s voice-based assistant is a widely cited example, offering basic health advice and helping reduce hotline load.

In banking, voice agents help with account balances, transaction history, and fraud alerts. Bank of America’s AI assistant “Erica” processed over 1.5 billion interactions by early 2025, with adoption still growing.

In e-commerce, smart assistants guide users through products, manage orders, and even recommend based on past behavior. Retailers using voice interfaces see cart values increase by as much as 18%, according to internal case studies from Salesforce.

In education, tools like Duolingo have added spoken comprehension testing and pronunciation scoring, improving retention and engagement.

These sectors succeed with voice AI because they focus on repeatable, time-sensitive tasks that do not require emotional nuance.

Why Voice AI Still Struggles in Some Areas

No technology is perfect, and voice AI comes with limits. These are especially important in sectors like healthcare and finance where precision matters.

Systems often struggle with:

- Understanding regional accents or dialects, especially in noisy settings

- Recognizing sarcasm, emotion, or ambiguity, which reduces response quality

- Protecting sensitive information, which raises compliance concerns under laws like GDPR or HIPAA

- Integrating with old systems, which increases setup time and cost

To make sure your implementation succeeds, test systems with real users early. Also ensure your platform provider follows encryption and security protocols.

Even the most advanced platforms will misfire without good training data. That is why tools like the Graphlogic Generative AI Platform are useful. They support retrieval-augmented generation and domain-specific fine-tuning for more accurate results.

What the Next Generation of Voice AI Will Look Like

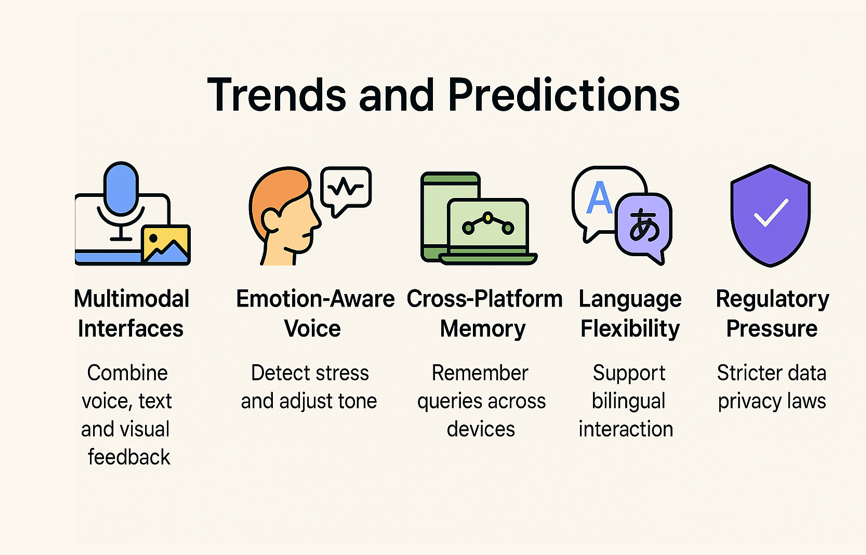

By late 2025, voice AI is evolving beyond basic assistants into a more adaptive and multimodal experience. This shift is driven by user demand for smoother, more human-like interaction, as well as by improvements in model training and deployment tools.

Here are some of the main trends shaping the future of voice AI:

- Multimodal interfaces are becoming standard. AI systems now combine voice input with visual and text feedback. This is especially useful in travel, healthcare, and e-commerce, where spoken instructions are paired with visual confirmations.

- Emotion-aware voice models are improving. New systems can detect vocal stress or frustration and respond with a calmer tone or escalate to a human. This has big implications for mental health triage and customer retention.

- Cross-platform memory is emerging. Users want continuity between devices. Platforms are beginning to remember previous queries or preferences across mobile, web, and smart speaker environments.

- Language flexibility is expanding. Many tools now support bilingual interaction and code-switching, especially in regions where people mix languages naturally in conversation.

- Regulatory pressure is increasing. Data privacy laws in Europe, North America, and parts of Asia now require clear consent for voice recordings and stricter retention policies. Compliance will shape how AI voice products are built and sold.

Industry Forecasts for 2026 and Beyond

Looking ahead, several forecasts outline where this market is going:

- According to Statista, the number of voice commerce users worldwide will exceed 650 million by 2026, doubling from 2023.

- Gartner predicts that 30% of enterprise customer service interactions will be handled by voice-based AI by 2027.

- In healthcare, Accenture reports that automated voice systems will reduce administrative load by up to 45%, particularly in triage and patient outreach.

The takeaway is simple. Voice AI is not just growing. It is being embedded into critical systems that need accuracy, speed, and reliability. Platforms like the Graphlogic Generative AI Platform are already incorporating retrieval-augmented generation and real-time adaptation to meet these rising expectations.

Businesses that ignore voice AI risk falling behind, especially as users expect seamless, voice-enabled support as the default — not the exception.

FAQ

Yes, but only if trained properly. Some platforms allow custom language models to improve accuracy in bilingual or multilingual contexts.

Reputable platforms encrypt both live and stored voice data. Always ask vendors how they handle storage and deletion under privacy laws.

They can be. For simple use cases like order tracking or appointment booking, even a small pilot project can cut costs and improve service.

They can simulate tone, but do not truly understand emotion. In sensitive settings, it is better to use voice AI only for routing or support, not for crisis care.

Use lightweight tools like the Graphlogic Voice Box API to test single features. Start with one task and expand gradually.