Automatic Speech Recognition is no longer just a feature in digital assistants. In 2025 it supports healthcare, law, education, media, customer service, and even agriculture. The global market is projected to reach $40 billion this year with growth above 17% annually.

This article compares three leading open source ASR models: Whisper, Facebook wav2vec2, and Kaldi. We will examine their accuracy, training data, hardware demands, and best use cases to help you choose the right system for research, business, or education.

Why speech recognition models matter in 2025

The growth is not only about money. It is also about access and necessity. In healthcare, more than 70% of providers in the United States now use speech recognition in electronic health record systems. This reduces the administrative burden on doctors, cuts down transcription costs, and improves accuracy of patient data. In education, lecture transcription allows millions of students to revisit content, supporting both remote learning and accessibility for students with hearing impairments. Streaming companies like Netflix or YouTube rely on ASR to scale captioning across languages. Governments use it for parliamentary records.

The pandemic years accelerated the adoption curve. Suddenly every meeting, class, and consultation could be remote. Accurate speech recognition was no longer optional. Open source models made this acceleration possible. Before 2019, proprietary systems dominated the field, often costing millions of dollars in licensing. By 2025, developers can download free, high-performing models from GitHub or Hugging Face and deploy them within hours.

Three names dominate discussions about open source ASR. Whisper from OpenAI, Facebook wav2vec2 from Meta AI, and Kaldi, the veteran toolkit developed in 2011. Each has different strengths, and each faces different weaknesses. Some are easy to use but resource heavy. Others are highly customizable but technically complex. Understanding these differences is not only an academic exercise. Choosing the wrong tool can increase costs, slow projects, and reduce accuracy in critical applications like healthcare or law enforcement.

What is an ASR model

An ASR model is software that translates spoken words into written text. At its core it is a pattern recognition system. It takes raw sound waves, segments them into frames, extracts features, and then maps those features to phonemes and words. In practice modern ASR systems are much more complex. They use deep neural networks that process the entire waveform and learn patterns from massive datasets.

Older ASR systems from the early 2000s often used Hidden Markov Models combined with Gaussian Mixture Models. They required handcrafted feature extraction, often using Mel Frequency Cepstral Coefficients (MFCCs). These systems were brittle and struggled with accents or noise. By the mid 2010s deep learning revolutionized ASR. Neural networks such as recurrent neural networks (RNNs) and later transformers could learn representations directly from raw audio.

Open source ASR democratized this progress. Instead of relying on expensive commercial APIs, any developer could now train, test, and deploy their own models. This opened the field to startups, universities, NGOs, and hospitals. For example, rural clinics in India now use open source ASR for local dialect transcription. Universities in Africa use it to preserve endangered languages.

ASR models are not just about converting speech into text. They are about enabling accessibility, preserving cultural diversity, and reducing inequality in access to digital services. This broader role makes benchmarking even more important. If accuracy is low, marginalized groups are left behind. If deployment is too costly, smaller organizations cannot benefit.

Overview of Whisper, Facebook wav2vec2, and Kaldi

Whisper

Whisper was released by OpenAI in 2022. It is based on a transformer architecture and was trained on 680,000 hours of audio scraped from the web. The dataset was diverse in both quality and content, which is unusual in ASR. This makes Whisper robust in noisy conditions and capable of handling heavy accents. Unlike older models that focused mainly on English, Whisper supports over 90 languages out of the box. It can also perform speech translation, converting spoken words in one language into text in another.

Whisper is widely used for podcast transcription, video subtitling, and accessibility projects. In 2023–2025 it became popular in journalism, where it helps transcribe interviews in multiple languages quickly. It is also used in healthcare for multilingual patient records. The main drawback is computational cost. Large models require GPUs with high memory and can be slow in real time.

Facebook wav2vec2

wav2vec2 was introduced by Meta AI in 2020. It represents a major shift in how speech recognition models are trained. Instead of requiring large labeled datasets, wav2vec2 uses self supervised pretraining. It was trained on 53,000 hours of unlabeled speech from the LibriLight dataset. After pretraining, the model can be fine tuned on smaller labeled datasets for specific tasks.

This approach drastically reduced the need for manual transcription. It also improved accuracy. wav2vec2 regularly achieves Word Error Rates below 5% on English datasets like LibriSpeech. It can be fine tuned for medical transcription, call center data, or legal speech. This flexibility has made it a favorite in both academia and industry. However, its multilingual coverage is weaker compared to Whisper.

Kaldi

Kaldi is the oldest of the three and one of the most cited tools in speech recognition research. Released in 2011, it is not a single model but a complete toolkit. Kaldi provides recipes, libraries, and scripts to build ASR systems from scratch. It supports Gaussian Mixture Models, Hidden Markov Models, and neural networks. Its modular architecture makes it extremely flexible.

Kaldi remains widely used in universities for teaching and experimentation. It has powered dozens of research papers and many production systems. Its main advantage is customizability. A skilled engineer can control every aspect of the pipeline, from feature extraction to decoding. The drawback is complexity. Kaldi has a steep learning curve and is less user friendly than Whisper or wav2vec2.

Key features of each model

When comparing Whisper, wav2vec2, and Kaldi it is important to look at what sets them apart in daily use.

Whisper offers multilingual coverage across more than 90 languages. It is robust against heavy accents and background noise because it was trained on 680,000 hours of real and often imperfect audio. Whisper also integrates translation. It can take spoken Spanish and output English text directly. That dual ability to transcribe and translate makes it appealing for global newsrooms and multinational organizations. However, its large versions require GPUs with 16 GB of VRAM, which is out of reach for many small teams.

Facebook wav2vec2 is built around self supervised pretraining on 53,000 hours of speech data. The key feature is fine tuning. With as little as 10 hours of labeled audio from a hospital or call center, wav2vec2 can specialize in that domain. This makes it extremely efficient in terms of labeled data needs. It regularly reaches Word Error Rates under 5% on clean English test sets. However, it is less prepared for multilingual work out of the box.

Kaldi stands apart because it is not a prebuilt model but a toolkit. It lets researchers mix Gaussian Mixture Models with Deep Neural Networks. Its recipes have been used in hundreds of published papers. Kaldi’s modular design means it can integrate with older pipelines or cutting edge research. Many universities still teach ASR using Kaldi because it forces students to understand every stage. The drawback is that even a basic setup can take weeks. Unlike Whisper or wav2vec2, Kaldi does not come with ready to use models.

Performance metrics for ASR

Evaluating ASR requires a consistent framework. The three main metrics are Word Error Rate, Real time Factor, and resource efficiency.

Word Error Rate (WER) is the most common. A WER of 5% means that in a sample of 100 words, 5 are wrong. Whisper averages around 6–7% on multilingual benchmarks, wav2vec2 often drops below 5% for English, and Kaldi can reach similar levels if tuned properly.

Real time Factor (RTF) shows how long it takes to process audio. A value of 1.0 means that one minute of audio requires one minute to process. Whisper small models run near 0.6 on consumer GPUs, wav2vec2 base models near 0.3, and Kaldi recipes vary from 0.4 to 1.0.

Resource efficiency is becoming more important as energy costs rise. Training wav2vec2 on 1,000 hours of labeled data can cost $10,000 in cloud GPUs. Whisper large requires GPUs with 16 GB of VRAM for smooth use. Kaldi can run on CPUs, but training takes much longer.

Benchmarking results in 2025

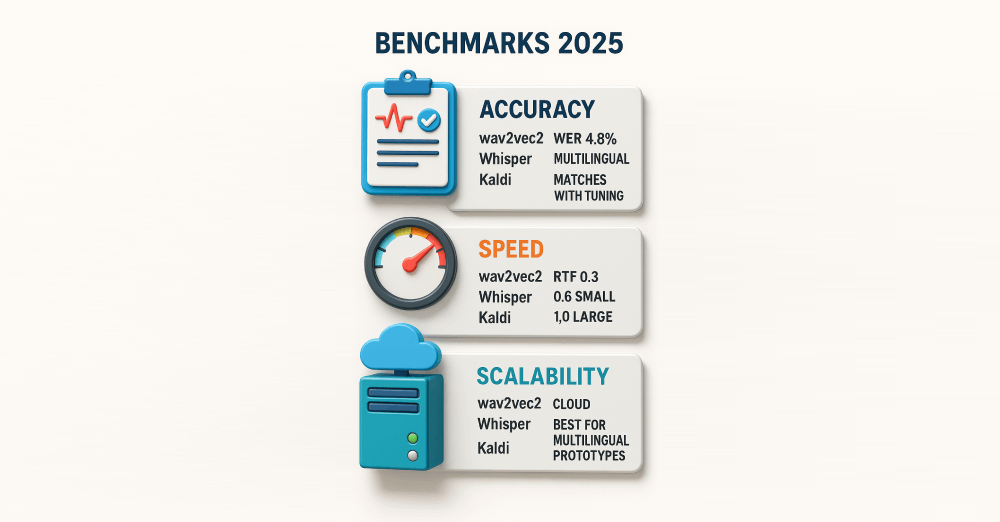

Benchmarks in 2025 confirm the strengths and weaknesses of the three systems.

Accuracy: wav2vec2 is consistently top for English. On the LibriSpeech test set it reaches WER as low as 4.8%. Whisper holds its own with WER around 6–7% but has the advantage of multilingual robustness. Kaldi can match these numbers if tuned with domain data but usually requires far more engineering hours.

Speed: wav2vec2 base runs at RTF around 0.3 on standard GPUs, making it efficient for real time transcription. Whisper small models run around 0.6, while larger ones can slow to 1.0. Kaldi speed is inconsistent and heavily dependent on the chosen pipeline.

Scalability: wav2vec2 integrates into modern pipelines with minimal friction. It is popular in cloud services and APIs. Whisper is widely used for prototyping multilingual systems. Kaldi remains strong in research labs but is less common in enterprise production.

Training data and fine tuning

The training data behind each model explains many of their differences.

Whisper used 680,000 hours of audio scraped from the web. This included not only clean recordings but also noisy, accented, and multilingual data. As a result Whisper is robust in real environments such as hospitals or crowded classrooms. Fine tuning Whisper is possible but less common, since the base models already generalize well.

wav2vec2 was pretrained on 53,000 hours of LibriLight, which is mostly clean audiobook speech. This explains its strong performance on datasets like LibriSpeech. Fine tuning is critical for wav2vec2 when applied to noisier or domain specific tasks. A hospital might fine tune with 10 hours of labeled radiology dictation and achieve usable performance.

Kaldi does not ship with pretrained models. Users must collect their own datasets. This is why it is popular in research where teams experiment with custom corpora. Kaldi recipes have been applied to everything from 1,000 hours of English speech to small endangered language datasets of only 50 hours.

Fine tuning costs are nontrivial. Training wav2vec2 on a dataset of 1,000 hours may cost $10,000–15,000 in cloud GPU resources. Whisper fine tuning is more resource intensive. Kaldi can be cheaper in raw compute but far more expensive in engineering time.

Hardware requirements

Hardware determines whether a model is practical for a team.

Whisper large requires GPUs with at least 16 GB of VRAM for smooth inference. Even Whisper small can struggle on consumer laptops. This limits its real time use for small clinics or schools.

wav2vec2 base can run on GPUs with 8 GB of VRAM. This makes it easier to deploy widely. It can also be quantized to run on CPUs, though at slower speeds.

Kaldi is flexible. It can be optimized for CPU clusters, which was common before large GPU clusters became affordable. However, training deep neural networks with Kaldi on CPUs is extremely slow.

Enterprises often choose hosted APIs to avoid managing this hardware. The Graphlogic Speech to Text API is one example. It builds on open source models but removes the need to own and maintain expensive GPUs.

Use cases for each model

Choosing the right ASR model depends heavily on the setting and requirements.

Whisper is often the first choice for multilingual applications. Newsrooms use it to transcribe interviews in 90 languages. Subtitling platforms rely on it to scale across regions. In healthcare, hospitals in Europe deploy Whisper for patient intake since patients often speak multiple languages in one session. Journalists use it to quickly produce transcripts from field recordings with background noise.

Facebook wav2vec2 is powerful in domains with consistent audio quality. Hospitals fine tune it with 10 hours of medical recordings to build transcription systems that recognize specialized terms. Financial companies use it to process customer calls, where accuracy in English is critical. Customer service platforms prefer wav2vec2 for its balance of speed and accuracy.

Kaldi is favored in academic research and experimental deployments. It is used to test new decoding algorithms, study low resource languages, and build experimental hybrid systems that combine traditional and neural approaches. Universities often train students on Kaldi so they can understand ASR at the level of features, phonemes, and decoding graphs.

Advantages and limitations

Each ASR model comes with clear strengths and trade-offs. Whisper is convenient for multilingual work but can be heavy on resources. Facebook wav2vec2 is precise and efficient in English but requires tuning for other conditions. Kaldi offers unmatched flexibility yet demands high technical skill. The table below shows these points side by side.

| Model | Advantages | Limitations |

| Whisper | Multilingual out of the box, robust against accents, trained on 680,000 hours of varied audio, integrates speech translation | Requires GPUs with 16 GB of VRAM for large models, can be too slow for real time transcription in resource limited environments |

| Facebook wav2vec2 | Pretrained on 53,000 hours of audio, requires less labeled data for fine tuning, achieves WER under 5% in English, efficient for domain specific systems | Weaker multilingual performance out of the box, requires domain tuning for noisy conditions, fine tuning can cost $10,000 or more for large datasets |

| Kaldi | Extreme flexibility, ability to combine classic and modern methods, used in research for over a decade, strong community | Steep learning curve, no ready pretrained models, deployment often takes weeks, resource use depends heavily on engineering skill |

How to choose the right ASR model

Selection depends on context.

- In healthcare accuracy matters most. wav2vec2 fine tuned on 10 hours of radiology data can reach usable levels for clinical documentation. Whisper can also serve but may require more hardware.

- In education multilingual adaptability is key. Whisper supports 90 languages and is ideal for international classrooms.

- In research reproducibility and flexibility are central. Kaldi allows experiments with decoding graphs and phoneme models that newer systems hide.

- In customer service speed and scale dominate. wav2vec2 base models running at RTF 0.3 process calls in real time.

When scaling beyond prototypes many teams prefer APIs. The Graphlogic Speech to Text API provides enterprise deployment without hardware management. For conversational systems the Graphlogic Generative AI and Conversational Platform merges speech recognition with dialogue.

Challenges in benchmarking

Benchmarking ASR models is never straightforward.

Audio quality variability is a major challenge. Public benchmarks like LibriSpeech contain clean audiobook recordings. Real environments have overlapping speech, coughs, and background noise.

Dialects and accents also complicate evaluation. A model that performs at 5% WER in American English may fail with Scottish or Indian English. Whisper trained on 680,000 hours is better prepared but still far from perfect.

Dataset differences matter. wav2vec2 pretraining on 53,000 hours of audiobook speech explains its strength in LibriSpeech benchmarks but also its weakness in noisy call center data.

Hardware differences make results hard to compare. An RTF of 0.3 on one GPU may be 0.6 on another.

Reproducibility is another issue. Kaldi recipes require careful parameter tuning. Two teams running the same recipe may report different results.

Trends and forecasts for 2025 and beyond

Several trends define the future of ASR.

- Low resource languages: More than 2,500 languages are at risk of disappearing. Open source ASR projects now train on small corpora to preserve them. Whisper’s multilingual training data helps, but specialized datasets are needed.

- Medical transcription: The medical transcription market is projected to grow to $6 billion by 2030. Hospitals demand HIPAA compliant ASR with near perfect accuracy. wav2vec2 fine tuned on specialty data is already used in radiology and cardiology.

- Green AI: Training large models consumes hundreds of MWh. Efficiency is now a research priority. Teams optimize RTF and reduce GPU hours to cut energy bills.

- Edge deployment: Smaller versions of wav2vec2 and Whisper now run on devices with 8 GB of VRAM. This allows offline transcription without cloud dependence. For rural clinics or military use cases, this is transformative.

- Voice security: ASR is merging with biometrics to enable authentication. This creates both opportunity and risk. Accuracy in recognizing speech content must be balanced with accuracy in verifying identity.

- Hybrid systems: Kaldi’s modularity allows blending symbolic approaches with modern neural architectures. These hybrids may outperform purely end to end models in efficiency.

Key points to remember

- ASR converts audio into text using deep learning.

- Whisper was trained on 680,000 hours of diverse multilingual data.

- wav2vec2 was pretrained on 53,000 hours of audiobook speech and fine tuned for domains.

- Kaldi is a flexible toolkit used in research since 2011.

- Performance metrics include Word Error Rate, Real time Factor, and resource use.

- Whisper is best for multilingual, wav2vec2 for domain accuracy, Kaldi for research.

- Future trends include efficiency, edge deployment, and support for low resource languages.

Practical advice for teams

- Start small. Test open source models with your own audio before scaling.

- Do not trust benchmarks alone. Real world noise and accents reduce accuracy.

- Estimate infrastructure costs early. Whisper large may cost $500 per month per GPU in cloud services.

- Use APIs when speed to market matters. The Graphlogic Speech to Text API removes hardware concerns.

- For sensitive domains such as medicine or law, prioritize privacy with on premise deployments.

- Fine tune wav2vec2 with domain specific audio to gain accuracy. Even 10 hours of labeled speech can improve performance significantly.

- Monitor community progress. Open source projects evolve quickly. New checkpoints can cut Word Error Rate by several percent in months.

FAQ

It is software that converts speech into text using acoustic and language models.

wav2vec2 usually has the lowest Word Error Rate in English with results below 5%. Whisper is more robust in multilingual settings.

Use shared datasets such as LibriSpeech and CommonVoice. Measure Word Error Rate and Real time Factor on identical hardware.

Whisper works for multilingual transcription. wav2vec2 is strong in domains like medicine and customer service. Kaldi fits research and experiments.

Yes. Hospitals, financial companies, and call centers already deploy them. Many rely on hosted APIs for scale.

Running Whisper large on cloud GPUs with 16 GB VRAM can cost hundreds of $ monthly for high volume transcription. Fine tuning wav2vec2 on 1,000 hours of data can reach $10,000 in compute.

Privacy depends on deployment. On premise Kaldi installations are more secure. Cloud APIs may log audio unless agreements specify otherwise.

Yes. wav2vec2 fine tuned on clinical datasets achieves WER below 8%. Whisper is also strong in noisy patient interviews.

Very important. Whisper large requires GPUs with 16 GB VRAM. wav2vec2 base runs on 8 GB. Kaldi can run on CPUs but training is much slower.

Kaldi has the longest academic tradition. Whisper and wav2vec2 have active developer communities on GitHub and Hugging Face.

Yes. Modern APIs combine ASR with dialogue. Platforms like the Graphlogic Generative AI and Conversational Platform connect transcription with natural responses.