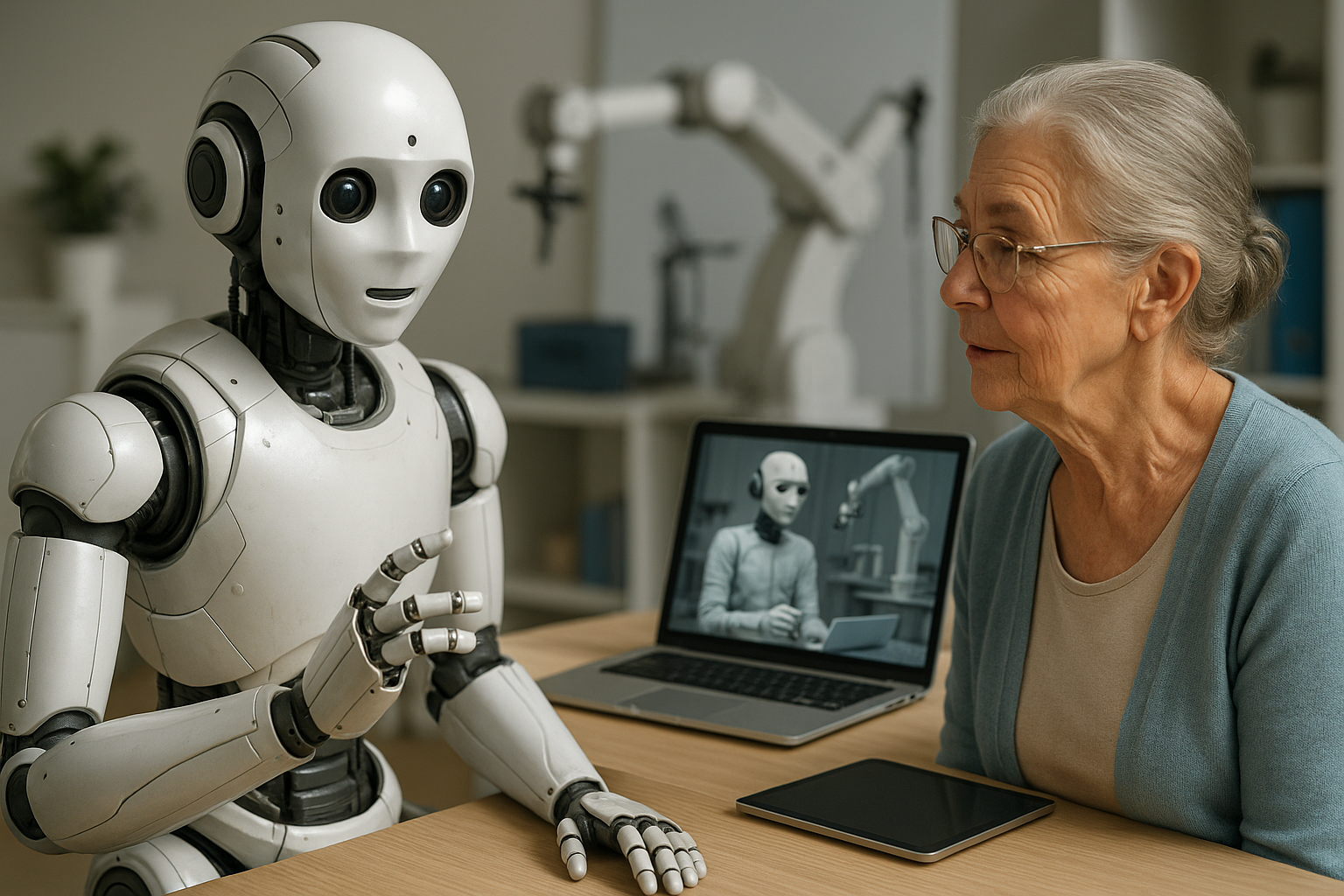

The emergence of AI robots that integrate large language models (LLMs) marks a shift in robotics. Instead of rigid machines following fixed scripts, robots can now parse language, sense context, and act with more autonomy. These systems are moving toward being partners rather than tools in health care, homes, labs, and factories.

This article discusses how LLMs transform robotic architecture, surveys major research such as PaLM-E, reviews real systems, examines challenges, offers rare technical insights and advice, and outlines trends and forecasts. The goal is to present an expert but accessible view of this evolving field. I also retain links to two relevant products: Graphlogic Generative AI & Conversational Platform and Graphlogic Text-to-Speech API.

Why Language Matters in Robotic Intelligence

Robots in the past required precise commands like move or lift. They lacked flexibility. If an unexpected obstacle appeared, they could fail or freeze. By adding LLMs, robots can interpret instructions in natural speech or text, infer intent, and adapt.

Consider the phrase “Clean up the spill before someone trips.” A robot must detect what a spill is, locate it, choose tools, and plan a path. This is more than spatial computation. It requires reasoning about human safety. LLMs provide that reasoning layer.

In research, Google introduced PaLM-E, a language model extended to ingest sensor data and produce robot plans. It fuses visual, textual, and state inputs to generate action sequences. In evaluation, PaLM-E solved embodied reasoning tasks while retaining language skills. In experiments, the model showed positive transfer learning on vision and language domains that improved robotic tasks. Some variants achieved 94.9% success in task and motion planning benchmarks without task-specific fine tuning.

The value of LLMs in robotics lies not only in language but in grounding language in perception and action.

Evolution of Interaction: From Buttons to Conversational Agents

To see how far the field has come, consider the evolution of human-robot interaction. Early robots of the 1950s and 1960s used buttons and switches. Interaction required technical expertise. In the 1980s, voice recognition enabled simple commands such as “move forward.” But vocabulary was limited and error rates were high. Later, natural language processing allowed more flexible commands, yet robots still lacked reasoning and adaptability.

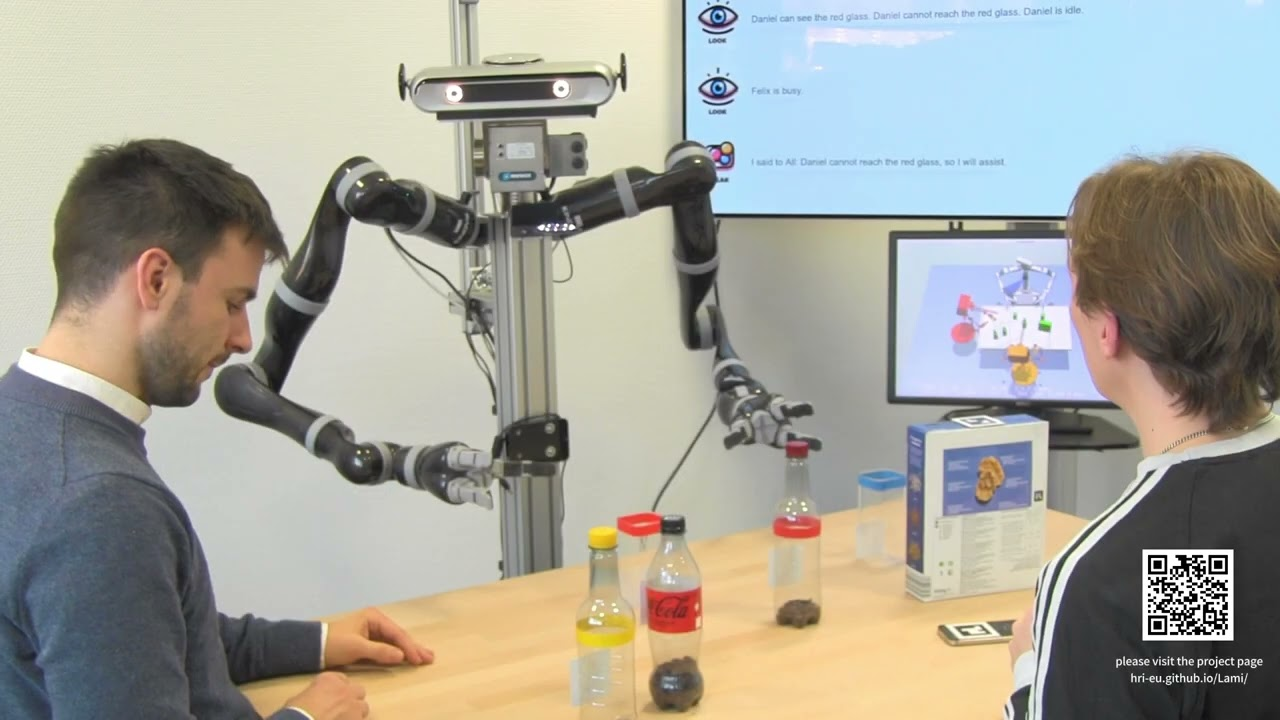

With LLMs, robots can hold dialogues, ask for clarification, explain reasoning, revise plans, and adapt as context changes. Robots move from executors of code toward dialogue agents embedded in the world.

Key Architecture: How Language Models Enter Robotics

Integrating large language models (LLMs) into robotics requires a layered architecture consisting of sensor encoding, multimodal fusion, LLM core, executor, and feedback loop. Each layer contributes to transforming raw sensory input into intelligent, context-aware robotic actions.

- Sensor Encoding: Robots first translate raw environmental inputs — such as visual data, tactile signals, and spatial measurements — into high-dimensional vector representations. This process allows non-linguistic information to be expressed in a form compatible with LLM embeddings.

- Multimodal Fusion: These encoded sensory vectors are integrated with textual and contextual embeddings, creating a unified multimodal representation. This allows the model to reason jointly over perception and language, enabling understanding of tasks like “pick up the red mug on the table.”

- LLM Core: The central language model processes these fused embeddings as if they were extended tokens, generating structured text outputs that represent task plans or reasoning steps. In PaLM-E, for instance, visual tokens and linguistic tokens are interleaved, allowing the model to operate seamlessly across domains.

- Executor: The generated text is parsed into executable commands or motion primitives for the robot’s control system. This step grounds the linguistic reasoning into physical actions — such as navigation, object manipulation, or communication.

- Feedback Loop: Continuous environmental feedback allows for dynamic re-evaluation. The robot can adjust its plan when encountering new obstacles, incorrect actions, or user interventions.

A key insight of this architecture is that the same LLM core can generalize across multiple robot embodiments — drones, manipulators, or mobile assistants — rather than requiring a separate model for each. This reusability dramatically lowers development costs and accelerates adaptation. PaLM-E exemplifies this with a unified system spanning visual understanding and robotic control, showing transfer between simulation and real-world robots.

The architecture supports multi-step reasoning, situational adaptation, and natural human-robot dialogue, allowing robots to explain their decisions, clarify instructions, and continuously learn from interaction.

Real Systems in Practice

Modern robotics research and commercial applications already embody these principles.

- Amazon Astro integrates navigation, perception, and conversational reasoning in a consumer-grade home robot. It extends beyond simple motion by adding contextual understanding and safety awareness — for instance, detecting broken glass or intruders and alerting the user. Its conversational interface blends language understanding with sensor-driven hazard detection, representing a step toward general-purpose domestic autonomy.

- Google Robotics’ RT-2 (Robotics Transformer 2) represents a vision-language-action model that uses both image and text inputs to predict robot actions. It merges perception, reasoning, and control into a single transformer framework. By grounding visual understanding in language-based reasoning, RT-2 demonstrates generalization to unseen tasks — performing actions that were never explicitly trained but inferred through multimodal reasoning.

- Emerging research, such as “Towards End-to-End Embodied Decision Making via Multimodal LLMs,” extends this direction by directly connecting models like GPT-4-Vision to embodied agents. The study introduced a benchmark for decision-making where GPT-4-Vision outperformed hybrid perception–planning frameworks by +3% in accuracy, showing measurable gains in real-world decision reliability.

These examples confirm that embodied reasoning using multimodal LLMs is not theoretical — it is an active and rapidly advancing research frontier, merging perception, cognition, and control into unified, language-driven systems.

Platforms and Toolkits Driving Innovation

Beyond large research institutions, toolkits and platforms are democratizing access to these architectures.

The Graphlogic Generative AI & Conversational Platform enables developers to build robotic and conversational systems that integrate reasoning, retrieval, and planning modules. By providing a pipeline-based interface, teams can incorporate domain-specific logic, retrieve external data, and dynamically extend robot capabilities through API-based integration.

Complementing this, the Graphlogic Text-to-Speech (TTS) API transforms generated text into natural, expressive speech, giving robots lifelike communication abilities and completing the human-robot communication loop.

Such platforms allow robotics teams to focus on physical control, hardware design, and task execution, while outsourcing dialogue management and reasoning to cloud-based LLM frameworks. Furthermore, retrieval-augmented generation (RAG) modules empower robots to access real-time knowledge — from maintenance logs to current weather or traffic conditions — instead of relying on static onboard memory.

Together, these systems form a new ecosystem of modular, multimodal robotics driven by language-based intelligence — where perception, reasoning, and communication converge into a single, adaptable framework capable of operating across diverse environments and robot types.

Challenges That Must Be Solved

Privacy and Security

Robots often collect sensitive data such as voice, images, or patient information. Ensuring secure storage, encrypted transmission, and local processing is essential. In healthcare or domestic use, privacy safeguards are mandatory.

Bias and Ethics

LLMs inherit biases from training data. If deployed in robotics, these biases can influence navigation or task decisions. The NIST SP 1270 report explains that bias is socio-technical, emerging from data, algorithms, and human design. It categorizes bias into systemic, statistical, and human forms.

NIST also provides an evaluation method in IR 8269 that defines bias impact testing for machine learning systems. Applying such standards is vital for safe and fair robot behavior.

Language Complexity

Humans speak with idioms, sarcasm, and cultural cues. Robots can misinterpret such nuances. A casual “sure, whatever” might not mean approval. Handling ambiguity is still difficult for LLMs.

Real-Time Performance

LLMs are computationally heavy. Robots must respond instantly. Latency can be reduced with model distillation, quantization, or on-device processing.

Safety and Robustness

Robots must default to safe states when uncertain. Explainable outputs help humans intervene. Fallback behavior should be part of design.

Transfer and Generalization

Many models still overfit to a specific robot or environment. True generalization across domains is still limited, though systems like PaLM-E begin to solve it.

Continual Learning

Robots need to adapt while deployed. Updating without compromising safety or stability remains a key challenge. Modular architectures and controlled fine-tuning are partial solutions.

Trends and Forecasts in AI Robotics

AI-driven robotics is rapidly evolving from scripted automation toward adaptive, multimodal intelligence. The trends shaping this transformation involve not only advances in model architecture but also in deployment strategy, safety, and human-robot interaction.

Edge Multimodal Models

A defining shift is the move from cloud-based inference to on-device computation. By processing perception and reasoning directly on robot hardware, latency is drastically reduced, reaction times improve, and robots become more resilient to connectivity issues. This architectural evolution increases autonomy and preserves privacy — both crucial for personal, medical, and industrial robots.

Emotional Intelligence

Future robots will integrate affective computing to sense tone, emotion, and body language. Detecting mood and emotional state enables empathetic and context-aware interactions. This will enhance communication quality in care, therapy, education, and customer service scenarios, allowing robots to engage naturally and supportively with humans.

Explainable Reasoning

Transparency in AI decisions is becoming essential. Robots will narrate their internal reasoning — explaining why a particular path, object, or tool was chosen. This explainability builds user trust, supports accountability, and allows humans to correct or teach the system through natural conversation rather than technical intervention.

Cross-Domain Generalization

One of the most transformative trends is developing unified models that work across multiple robot embodiments — drones, manipulators, or mobile assistants — using a single LLM-based core. This reduces the need to train separate models for each robot type, cutting costs, shortening development time, and enabling consistent reasoning across platforms.

Hybrid Symbolic and Neural Models

Combining symbolic reasoning with neural perception bridges logic and learning. Neural components handle complex sensory understanding, while symbolic structures enforce rules and causal logic. This hybrid approach reduces hallucinations, increases reliability, and enables robots to follow ethical or safety constraints more consistently.

Domain Specialization

Even as general models expand, domain-specific fine-tuning remains key. Robots in medicine, manufacturing, or logistics will use specialized multimodal models adapted for high-precision tasks. For example, surgical robots can interpret gestures and vital signals, while industrial robots can detect anomalies in production lines or manage hazardous environments safely.

Continual Learning

Robots of the near future will learn continuously from real-world experience. Instead of relying solely on pretraining, they will update their models dynamically as they interact with new environments, objects, and people. This lifelong learning capability ensures adaptability, making robots more flexible, robust, and responsive over time.

Outlook Toward 2030

By 2030, service robots will operate on embedded multimodal models that seamlessly integrate perception, reasoning, and communication. These systems will safely navigate homes, hospitals, and workplaces, understanding both the physical and emotional context of human environments. Robotics will move from isolated automation to general embodied intelligence, where robots think, adapt, and collaborate alongside people in everyday life.

Technical Insights and Expert Tips

Building reliable and adaptive AI-driven robotic systems requires a blend of architectural discipline, safety engineering, and data strategy. Below are detailed insights and best practices derived from cutting-edge research and applied robotics development.

Modular Pipelines

Design robotic systems using modular and decoupled pipelines so that updates or failures in one component (e.g., perception, reasoning, control) do not destabilize the entire system. This allows individual modules to be retrained, replaced, or optimized independently. Containerized microservices or ROS 2 nodes are effective patterns for maintaining flexibility and stability as systems evolve.

Few-Shot and Prompt Tuning

For language-based reasoning modules, prefer few-shot learning or prompt tuning over full model retraining. This approach significantly reduces compute cost and enables rapid adaptation to new tasks or vocabularies. Small prompt libraries can encode specific task instructions, domain constraints, or user preferences without modifying the model weights.

Mixed Training Data

Train with heterogeneous datasets that include both embodied (sensorimotor) and abstract (symbolic or linguistic) tasks. As confirmed in PaLM-E’s evaluation, combining grounded and abstract data improves generalization between reasoning and perception. This hybrid training enables a robot to not only execute actions but also explain or justify them in human terms.

Rollback and Safety Mechanisms

Implement rollback systems that allow robots to revert to a safe state if an action sequence fails or becomes uncertain. Safety checkpoints, watchdog timers, and sensor-based anomaly detection can trigger controlled resets or human intervention. Such redundancy is essential for autonomous systems operating in public or medical environments.

Provocation and Edge-Case Testing

Include provocation tests that intentionally challenge the system with rare, ambiguous, or adversarial inputs. This ensures robustness in edge conditions like partial sensor failure, conflicting commands, or unpredictable human behavior. Test suites should simulate environmental noise, occlusion, and unexpected stimuli to validate real-world reliability.

Verified Language Subsets

In regulated domains such as healthcare or clinical robotics, use verified and restricted language subsets. This means constraining the LLM’s output to medically validated terminology or pre-approved command structures. Doing so minimizes misinterpretation, reduces liability, and ensures compliance with domain-specific safety standards.

Data Privacy and Anonymization

Always apply anonymization and encryption before storing sensor logs or conversation histories. Mask personally identifiable information (PII), faces, or voices in multimodal data. Implement local data retention policies and secure deletion pipelines, especially for robots operating in private or sensitive environments.

Hierarchical Planning

Adopt hierarchical planning architectures where the LLM generates high-level plans or reasoning chains, and lower-level control policies execute them. This mirrors how humans think abstractly but act through motor routines. The separation of cognition and execution improves explainability and enables safer low-level control under uncertainty.

Retrieval-Augmented Grounding

Enable retrieval modules that provide factual grounding, especially in sensitive domains like medicine, law, or education. By fetching verified external knowledge, the robot reduces hallucinations and maintains accuracy in decision-making. Retrieval-augmented generation (RAG) architectures also make robots more data-efficient, as they can reference rather than memorize information.

From Prototype to Production

These technical principles help robotics teams transition from experimental prototypes to scalable, safety-verified systems. By combining modularity, safety layers, multimodal learning, and factual grounding, developers can ensure that embodied LLMs move beyond impressive demos — toward consistent, trustworthy performance in the real world.

Conclusion

The integration of LLMs into robotics is a visible technological shift. Models like PaLM-E demonstrate that one embodied model can manage perception, planning, and language in real tasks. Progress continues as bias mitigation, efficiency, and safety frameworks evolve.

For developers, the Graphlogic Generative AI & Conversational Platform offers a fast entry point for dialogue and planning integration, while the Graphlogic Text-to-Speech API provides a natural communication layer.

The coming decade will define how robots coexist with humans, not as tools but as collaborators. The challenge for researchers and builders is to ensure that intelligence, ethics, and empathy grow together.

FAQ

Robots with LLMs can clarify instructions, generate plans, learn from dialogue, and adapt. Classic robots only execute static code.

Yes. PaLM-E demonstrates cross-embodiment generalization, reducing the need for separate models per robot.

They output text plans that are converted into natural speech using systems like the Graphlogic Text-to-Speech API. This gives robots an understandable human voice.

Key risks include data leaks, bias, and misinterpretation. Standards like NIST SP 1270 and IR 8269 provide guidance for responsible use.

Prototypes exist already. Widespread adoption could occur in 5–10 years once safety, regulation, and user trust mature.

No. Robots will assist rather than replace. They can handle repetitive tasks like medication reminders or movement assistance, but emotional understanding and complex decision making remain human strengths. Proper design should complement human work, not replace it.

Follow structured evaluation such as NIST IR 8269 and include balanced datasets. Use post-hoc calibration and human review loops before deployment.

The cost depends on hardware and model type. For instance, using an open-source model fine-tuned locally can be done for under $50 000, while enterprise-grade multimodal systems with real-time inference may reach several hundred thousand dollars including sensors and compute hardware.