Healthcare AI depends on large and reliable datasets. Patient records, diagnostic scans, and treatment outcomes form the backbone of predictive models. Yet strict regulations like HIPAA in the United States and GDPR in Europe restrict access to real-world data. The result is a growing tension between the need for innovation and the duty to protect individuals.

Synthetic data has become a credible solution. It is generated information that resembles the patterns of actual patient datasets without exposing private details. Researchers at UC Davis Health are already applying this method to emulate patient information. By doing so, they can accelerate clinical studies while maintaining confidentiality.

What Synthetic Data Really Is

Synthetic data is not just fake numbers or random images. It is carefully generated content that mirrors the statistical properties of real datasets. The concept gained traction in 2012 when MIT scientist Kalyan Veeramachaneni created synthetic student records for the EdX platform. His goal was to bypass privacy restrictions while keeping the data useful for research.

Modern synthetic data can take many forms. Tabular structures capture patient demographics or financial transactions. Time series mimic heart rate readings or stock flows. More advanced forms generate images and clinical notes. Techniques such as Variational Auto Encoders, Generative Adversarial Networks, and diffusion models make this possible. Each method has unique strengths.

Generative AI Techniques That Power Synthetic Data

Generative AI is the engine behind synthetic datasets. Its value lies in understanding data distributions and then creating new samples that look and act like real ones.

Variational Auto Encoders (VAEs). VAEs compress input data into a smaller space and then reconstruct it. This technique works well for tabular data such as patient vitals or insurance claims. It helps retain correlations between variables, which is essential in medicine and finance.

Generative Adversarial Networks (GANs). GANs involve a generator and a discriminator that train together. The generator produces synthetic data while the discriminator tests its realism. Over many cycles, the system learns to create highly authentic data. GANs are especially valuable for complex tasks like generating MRI scans or training autonomous driving systems.

Diffusion Models. These models add random noise to data and then learn to reverse it. They are particularly effective for image synthesis, producing detailed medical scans with subtle variations. Research published in Nature Digital Medicine highlights their role in medical imaging.

Each technique solves a different problem. VAEs provide structured reliability, GANs push realism, and diffusion models excel at subtle detail.

Real Applications Across Sectors

Healthcare

Synthetic datasets allow hospitals to test algorithms for disease prediction without handling patient files. For example, researchers use them to simulate cancer patient data. This supports model training while respecting confidentiality.

Finance

Banks simulate fraud detection scenarios with synthetic transaction data. This lets them refine credit scoring systems without exposing real customer details.

Urban Planning

City planners create demographic and traffic models with synthetic datasets. These help design safer roads and equitable social programs while avoiding invasive tracking of citizens.

Retail

Retailers use synthetic data to model shopping behavior. This lets them test marketing algorithms in a safe way without compromising customer privacy.

A Lancet Digital Health report confirms that synthetic datasets are shaping multiple industries by filling the gap between privacy and innovation.

Market Growth and Economic Impact

The global synthetic data market is expanding rapidly. It is projected to grow from $0.3 billion today to $2.1 billion by 2028. This growth implies a compound annual rate of about 45.7%.

The main drivers are healthcare and finance. Both sectors are under strong regulatory scrutiny. They are also under pressure to innovate using machine learning models. Synthetic data reduces the tension by providing datasets that are both useful and compliant.

At the same time, advances in generative AI continue to raise the quality of synthetic outputs. This makes the technology more appealing to investors, startups, and established corporations. The market is not only growing but also diversifying, as new niches open for providers of privacy-first AI solutions.

Challenges and Risks That Cannot Be Ignored

Synthetic data is promising but not flawless. The most pressing challenges include:

- Data quality dependence. If the original data is flawed, synthetic data will inherit those flaws. Poor quality hospital records, for example, can lead to misleading synthetic patient datasets.

- Privacy leakage. Weak generation methods may allow attackers to reidentify individuals. Studies show that careless use of synthetic datasets can expose sensitive details. Differential privacy offers protection, but it requires careful implementation.

- Bias persistence. If real data is biased, synthetic data can reproduce or even amplify those patterns. In medicine, this can worsen inequities in diagnosis or treatment.

To address these risks, organizations must use high quality real datasets as the foundation, conduct privacy audits, and combine synthetic with real data for balance.

Ethical and Social Dimensions

The ethical implications of synthetic data extend beyond compliance. Patients and citizens need to trust that synthetic data cannot harm them. Transparency about how data is generated, validated, and used is critical.

Bias reduction is another key area. Techniques such as oversampling underrepresented groups can help. Continuous fairness audits are necessary to detect and correct skewed outcomes. The World Health Organization has emphasized the importance of responsible AI use in healthcare, including transparent reporting of synthetic methods.

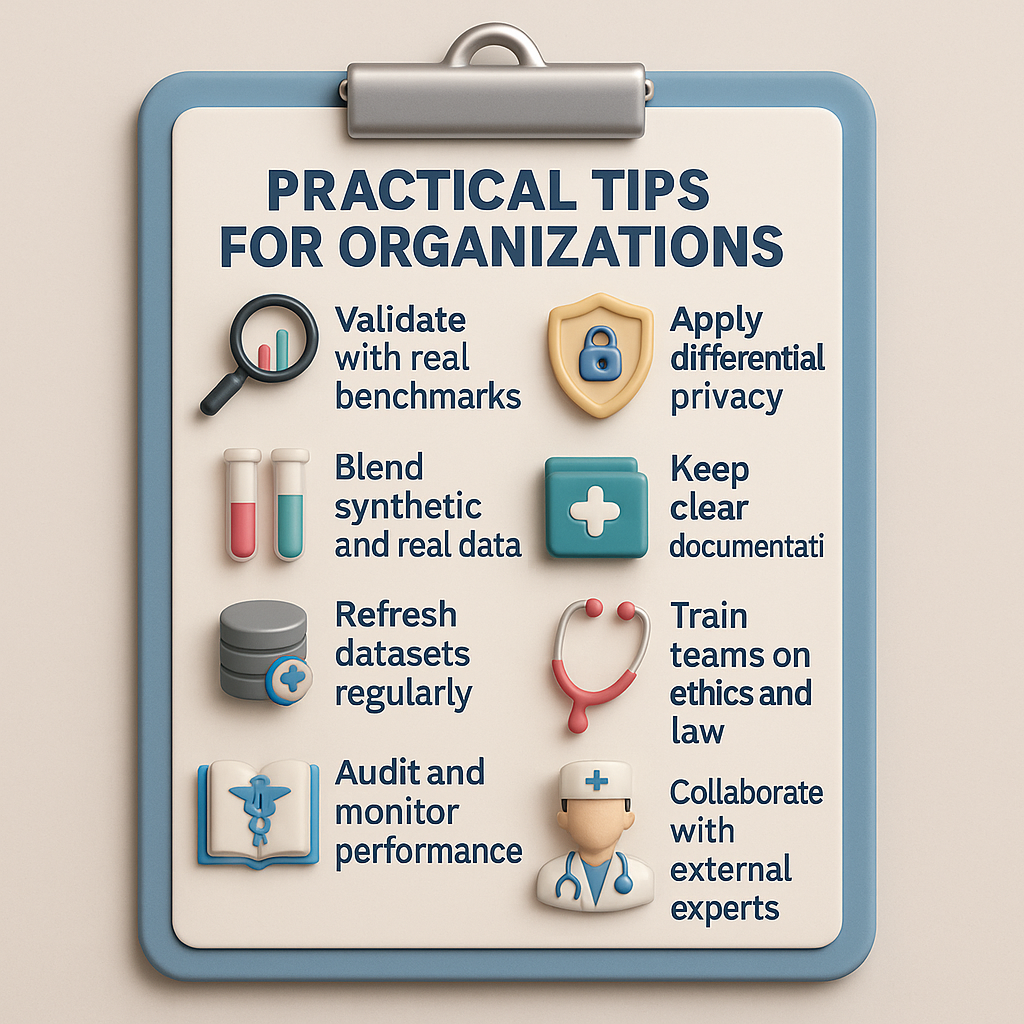

Practical Tips for Organizations

Organizations that want to use synthetic data effectively need more than technical skill. They must build processes that guarantee privacy, maintain compliance, and produce reliable outcomes. The following practices offer a structured roadmap.

Validate Against Real Benchmarks

Every synthetic dataset should be tested against trusted real world benchmarks. Validation ensures that correlations, distributions, and edge cases are preserved. For example, in healthcare, synthetic lab values must still reflect the statistical spread of real patients with diabetes or heart conditions. Without this comparison, models risk drifting into patterns that look plausible but fail in clinical practice. Benchmarks also provide a common language for regulators, researchers, and auditors.

Apply Differential Privacy

Differential privacy techniques are crucial for preventing reidentification. Even high quality synthetic datasets can leak information if attackers cross reference them with outside sources. Injecting controlled statistical noise helps prevent this risk. Organizations should embed differential privacy during generation, not as an afterthought. This step ensures compliance with GDPR and HIPAA, which require that personal data cannot be reconstructed.

Combine Synthetic and Real Data in Training

Using only synthetic data can lead to overfitting or unrealistic outcomes. A balanced strategy involves blending synthetic and authentic records. For instance, a fraud detection model might train on millions of synthetic transactions and then fine tune with a smaller set of verified real transactions. This hybrid method strengthens generalization and ensures the model does not misinterpret rare but critical patterns.

Maintain Detailed Documentation

Regulatory agencies expect transparency. Documentation of dataset creation must include source data quality, generation methods, privacy safeguards, and validation steps. This record not only satisfies auditors but also builds internal accountability. Teams should treat documentation as part of the development process, not as a compliance chore. Over time, such records create institutional knowledge that improves the design of future datasets.

Update Regularly to Reflect Reality

Real world patterns evolve. Disease prevalence shifts, financial fraud tactics change, and urban traffic adapts to new technologies. Synthetic datasets must evolve too. Static synthetic data can quickly become outdated and mislead models. Organizations should schedule regular refresh cycles, ideally aligning them with changes in the real world systems they simulate. Continuous updates make synthetic datasets a living tool rather than a frozen snapshot.

Train Teams Beyond Technology

Technical accuracy is not enough. Employees must also understand the ethical and legal context of synthetic data. Training programs should cover responsible AI use, fairness metrics, and the social impact of misusing data. This helps organizations prevent unintentional harm and strengthens public trust.

Audit and Monitor Performance

Validation before deployment is important, but monitoring after deployment is equally critical. Synthetic data may perform well in lab conditions but behave differently in real world applications. Ongoing audits catch emerging biases, shifts in accuracy, and potential privacy risks. Organizations should set up continuous monitoring pipelines that alert teams when performance indicators drift.

Collaborate With External Experts

Partnerships with universities, regulators, and independent auditors bring external oversight. Collaboration helps organizations validate claims and discover blind spots. For instance, peer reviewed studies on synthetic healthcare data, such as those in The Lancet Digital Health, provide valuable external benchmarks. External validation builds credibility and reassures stakeholders that synthetic data is safe and effective.

Trends and Forecasts

Synthetic data is not just a stopgap measure. It is evolving into a foundation of responsible AI. Several interconnected trends are shaping this shift.

Integration With Emerging AI Platforms

Platforms such as the Graphlogic Generative AI & Conversational Platform are already experimenting with synthetic datasets. The next stage will see conversational systems trained on privacy safe data for clinical advice, insurance queries, and financial interactions. This lowers risk for users while maintaining natural dialogue quality.

Voice and Medical Communication Tools

Healthcare professionals increasingly rely on real time communication systems. Voice solutions like the Graphlogic Text to Speech API can turn synthetic insights into spoken medical dialogue. This supports privacy conscious decision making during consultations and remote monitoring. The trend points toward a fusion of synthetic data and speech technology in electronic health records and telemedicine.

Cross Sector Expansion

While healthcare and finance remain early leaders, education and public services are following close behind. Universities test adaptive learning systems on synthetic student data to protect minors. Government agencies use artificial demographic datasets to design policies without exposing census details. The versatility of synthetic methods ensures that adoption will reach transportation, retail, and even defense.

Specialized Policy Frameworks

Governments are moving from general privacy laws to synthetic specific regulation. Draft proposals in the United States and Europe suggest new standards for validation, disclosure, and reidentification testing. Clearer policies will reduce uncertainty, give confidence to smaller organizations, and help patients and customers trust synthetic approaches.

Democratization of AI Research

Synthetic datasets are lowering entry barriers for startups and small laboratories. Teams that could not afford to purchase or access real world data can now build models with comparable quality. This is expected to accelerate innovation outside the traditional hubs of large tech firms and elite universities.

Quality and Realism Advances

Generative models are closing the gap between synthetic and authentic datasets. Diffusion techniques in particular show progress in radiology and pathology images, producing scans with subtle detail. The trajectory suggests that by 2030 synthetic images may be indistinguishable from real ones in most diagnostic contexts, provided validation safeguards remain in place.

Industry Consolidation and Market Growth

The market for synthetic data is projected to expand from $0.3 billion today to $2.1 billion in 2028. This growth will not only attract startups but also trigger consolidation as larger players seek to acquire specialized synthetic providers. Investors see this as a high growth sector closely tied to privacy first AI.

Looking Forward: Privacy and Innovation in Balance

Synthetic data has moved beyond concept. Hospitals, banks, and public institutions are showing that innovation can thrive without exposing sensitive information. The method is not flawless. It demands audits, quality checks, and ongoing transparency. Yet when managed responsibly it opens access to reliable datasets while protecting individuals.

Healthcare Impact

The next stage of healthcare AI will depend on combining synthetic with real datasets. Models trained only on small or biased samples risk dangerous errors. Synthetic data helps fill gaps, improve fairness, and strengthen predictions for diverse populations. Hospitals that adopt these methods responsibly will not only maintain compliance but also build trust with patients.

Finance and Urban Planning

In finance, synthetic datasets enable safer testing of fraud detection systems and credit scoring models. Regulators remain strict, making real transaction sharing unlikely. Artificial but realistic data provides the scale that algorithms need.

Urban planners now use privacy safe demographic and traffic models to design safer cities. This avoids intrusive tracking while still giving officials the information required to reduce accidents and manage growth.

Strategic Lessons

- Synthetic data will never fully replace real information, but it can multiply its value.

- Combining synthetic and real datasets is the best way to prevent bias and improve robustness.

- Continuous audits and disclosure are essential to maintain regulatory trust and public confidence.

- Transparent reporting of limitations prevents misuse and ensures ethical adoption.

Final Perspective

Synthetic data is more than a technical fix. It signals a cultural change in how societies treat information. Privacy is shifting from being seen as an obstacle to becoming a design principle. The organizations that integrate synthetic methods with responsibility will set the pace. They will be first to deliver privacy conscious AI while remaining innovative and fair.

FAQ

It is artificially generated data that looks and acts like real datasets but does not reveal actual personal information.

No. It can supplement and expand research but must still be validated against real data to ensure accuracy.

Not always. If generated poorly, it can expose private details. This is why methods like differential privacy and audits are necessary.

It allows hospitals and researchers to test models for disease prediction and treatment optimization without using actual patient files.

It is projected to grow from $0.3 billion to $2.1 billion by 2028, which represents a 45.7%CAGR.