Sound is now central to digital life. It drives communication, learning, entertainment, and healthcare. From podcasts to telemedicine, audio quality shapes trust and usability. Developers need tools that are precise and adaptable, and Python has become the top choice thanks to its libraries and its integration with artificial intelligence.

In healthcare, the demands are higher. Accurate transcription supports clinical documentation. High fidelity recordings and preprocessing improve diagnostic models. Researchers are exploring voice markers as possible indicators of stress and depression, as shown in early studies in Frontiers in Psychology.

By 2025 Python is no longer just a convenient option. It is the backbone of experimental research and production systems across healthcare, education, media, and customer service.

Understanding Audio Manipulation

Audio manipulation means the modification of recorded sound signals in order to produce specific results. This can involve trimming, resampling, changing loudness levels, converting between formats, or combining tracks. The practical use cases are endless. A podcast editor may cut out unwanted background noise. A hospital may use automated resampling to standardize recorded voice notes to 16 kHz before analysis. A music app may merge clips with different frame rates.

Python libraries take over the heavy lifting and allow developers to focus on creative or research goals instead of building raw audio pipelines from scratch.

Why Python Works for Audio

The Python ecosystem is both wide and stable, which makes it uniquely suited for audio work. Libraries are actively maintained, their documentation is improving year by year, and a global developer community ensures quick support. This combination lowers the barrier for new projects while also giving professionals reliable tools for production.

Developers appreciate Python because scripts for even complex sound operations remain short and readable. An audio manipulation task that could take dozens of lines in C++ often takes only a few lines in Python. This matters in research and healthcare, where teams want to iterate fast without sacrificing precision.

Python also connects seamlessly with artificial intelligence frameworks such as TensorFlow and PyTorch. These frameworks are often used in speech recognition, emotion detection, and medical analytics. For example, a hospital could use Python libraries to record patient voice samples, preprocess them with pydub, and then feed them into a PyTorch model trained to detect markers of Parkinson’s disease. The same pipeline would be far more complex to build in lower level languages.

Another practical strength is format support. Audio exists in many container types and compression schemes. Hospitals may store uncompressed WAV for fidelity. Media companies prefer MP3 for distribution. Apple devices often use AIFF, while AAC is common on streaming platforms. With the help of FFmpeg, Python libraries can handle all of these. This ensures that teams working in healthcare or media can move data across systems without friction.

Python is also attractive because it integrates easily into larger workflows. An engineer can write one script for sound preprocessing, another for machine learning inference, and a third for visualization — all in the same language. This continuity reduces errors and improves productivity.

Recording Audio: Two Main Tools

Recording is the foundation of any audio project. Without clean capture, later steps such as transcription or analysis cannot succeed. Python provides two dominant options: PyAudio and Sounddevice.

PyAudio is a wrapper around PortAudio that allows detailed control over streams. It is the library of choice when precision is critical. Researchers working on acoustic analysis rely on its ability to configure sample rates, buffer sizes, and audio channels with precision. The tradeoff is installation complexity. On macOS, developers often install PortAudio with Homebrew. On Windows, PyAudio requires Microsoft Visual C++ build tools. On Linux, package managers help but still demand careful setup. Once installed correctly, PyAudio delivers low latency capture that is difficult to match.

Sounddevice is designed for simplicity. Its API is minimal and intuitive, which makes it easy for newcomers. A developer can capture microphone input in just a few lines of code. Startups building prototypes of voice controlled apps often prefer it because it reduces time to market. The limitation is that Sounddevice lacks advanced control over stream parameters.

In practice, many teams adopt a hybrid path. They begin with Sounddevice during the prototyping phase because it allows fast iteration. As the project matures, they migrate to PyAudio to benefit from its granular control and reliability in production. This pattern is common in both academic labs and commercial audio applications.

Playing Audio with Python Tools

Playback is just as important as recording, particularly in applications like telehealth, music production, or gaming. Here again, PyAudio and Sounddevice are the most common choices.

With PyAudio, developers gain fine grained control over playback streams. This makes it ideal for real time applications such as voice communication platforms or live audio effects engines. PyAudio allows developers to stream chunks of audio sequentially, control latency, and support custom audio pipelines. Once configured correctly, it provides performance comparable to lower level languages.

Sounddevice, especially when paired with the soundfile library, simplifies playback of audio files such as WAV or FLAC. The API is high level and concise, which makes it a strong choice for educational settings and rapid prototyping. For example, a coding bootcamp teaching audio processing often starts with Sounddevice because students can implement playback in only a few lines of code.

Both PyAudio and Sounddevice support cross platform use on Windows, macOS, and Linux. This universality is essential because audio projects often need to run on multiple systems.

Editing and Processing with pydub

Editing is the stage where most developers and audio engineers spend the most time, because raw recordings are rarely usable without adjustments. Python offers several libraries for this, but pydub has become the go-to option due to its balance of simplicity and flexibility.

Pydub provides high level functions that handle the most common editing needs: trimming, slicing, adjusting loudness, resampling, and combining or overlaying audio files. The design philosophy is to make audio editing accessible to developers who may not have a deep background in signal processing. Instead of writing complex algorithms, a developer can use short, readable commands.

For example, trimming is as simple as specifying a start and end time. This can be applied to cut silence from the beginning of a medical interview or to extract a clean segment for a podcast highlight. Resampling is another frequent task, especially in healthcare. Many machine learning models require a standard sample rate such as 16 kHz, and with pydub this can be achieved with a single method call while maintaining the pitch and natural quality of the voice.

Volume adjustment is equally straightforward. Pydub represents changes in decibels, which makes it easy to normalize different recordings. A podcast editor might increase one speaker by 3 dB to match the other voices. A clinical researcher might reduce loud background noises in patient audio files before analysis. Combining audio clips is also intuitive. Developers can either concatenate two recordings or overlay them to mix tracks, for example placing background music under a narration.

The syntax is one of pydub’s greatest strengths. Tasks that would require dozens of lines in C++ or Java can often be completed in fewer than ten lines of Python code. This efficiency speeds up experimentation and prototyping, which is why the library is popular in both academic projects and commercial audio products.

There is one important dependency. Pydub relies on FFmpeg to support a wide range of audio formats. FFmpeg is an open source multimedia framework used across the media industry, but it must be installed separately and added to the system path. While this adds an extra step to setup, it is also what gives pydub its flexibility. Once configured, the workflow becomes smooth and highly reliable.

Real World Examples of Pydub in Action

- Podcast production: Editors cut specific segments (e.g., minute 1 to 2:30), normalize all voice tracks for consistent loudness, overlay intro/outro music, and export the final product as MP3 for distribution on streaming platforms.

- Healthcare research: Researchers remove long silences from patient interviews, standardize recordings at 16 kHz sample rate, and prepare the cleaned audio files for machine learning models that detect speech or health patterns.

- Education: Teachers and edtech developers build language learning apps by combining words and phrases into exercises, adjusting timing and volume to create smooth and interactive lessons.

- Customer service analytics: Contact centers preprocess thousands of recorded calls to remove background noise, convert files into unified formats, and run sentiment analysis to identify customer satisfaction trends.

File Formats and Conversion

Audio comes in many shapes, and format handling is a constant challenge for developers. A WAV file is uncompressed and preserves full quality, making it ideal for studio work or archiving clinical voice data. An MP3 is compressed, reducing size and making it efficient for online streaming. AIFF is common in Apple ecosystems, especially in professional music workflows. AAC is widely used by streaming services because it provides better quality at similar bitrates compared to MP3.

Pydub, powered by FFmpeg, supports all of these and more. Converting between formats is straightforward. A musician may record a track in WAV at 48 kHz for studio quality, then export it as MP3 for sharing on social media. A hospital may store patient recordings in WAV for maximum fidelity, but later convert them to AAC when integrating them with mobile telehealth apps.

This ability to move between formats without losing critical information is a major reason Python libraries are so practical in production. Conversion workflows also allow developers to prepare audio for machine learning pipelines. A dataset may contain files in multiple formats, and with pydub a script can unify them into a single format in minutes.

In many industries, this step is critical. For example, in legal transcription, consistency of format ensures that downstream AI systems do not misinterpret recordings. In healthcare, standardizing files helps maintain compliance with data regulations by ensuring that files are stored in reliable, high quality formats.

A small but important tip: when exporting files, always confirm that metadata such as sample rate and channels are preserved. In some workflows, missing metadata can lead to errors in downstream applications. Developers often include a verification step to check the final file properties before deploying large scale conversions.

Transcription with SDKs

Transcription has become one of the fastest growing areas in audio technology. The demand is clear. Manual transcription is slow, costly, and prone to human error, especially when working with large volumes of audio. Automated transcription addresses this by improving accessibility, supporting legal compliance, and increasing efficiency across industries.

Deepgram SDK

The Deepgram SDK is a strong player in this space. It provides accurate speech to text capabilities with support for asynchronous processing. This feature is important because developers can upload multiple files at once and receive transcripts without blocking the rest of their application. For example, a customer support center can transcribe 1 000 calls per day and then use natural language processing to identify common issues or measure customer sentiment.

Deepgram also supports multiple languages and accents, which makes it suitable for global businesses. In research, asynchronous transcription has proven valuable for analyzing large collections of recorded interviews or medical voice notes. The JSON output includes confidence scores, allowing teams to evaluate the reliability of the transcript automatically.

Graphlogic Speech-to-Text API

Another robust option is the Graphlogic Speech-to-Text API. It is designed to integrate easily with applications in healthcare, customer service, and media. The service offers secure handling of audio data, which is particularly important in clinical settings where patient confidentiality is non-negotiable.

For teams that need not only text output but also natural sounding voice generation, the Graphlogic Text-to-Speech API completes the loop. This enables applications where the system listens to spoken input, processes it, and responds with lifelike synthesized speech. For instance, a telemedicine assistant could transcribe a patient’s spoken description of symptoms and then provide instructions or clarifications using text to speech.

Healthcare Applications

In healthcare, transcription has a direct impact on productivity and accuracy. Doctors can dictate notes during or after a consultation, and those notes can be automatically stored in electronic health records. This saves time and reduces administrative burden. Researchers benefit as well, since patient interviews and clinical trial data can be transcribed and analyzed at scale.

Accuracy and security are the two critical factors. Medical speech often includes technical terms or drug names that require high fidelity recognition. Specialized APIs are trained on domain specific vocabulary, which increases transcription quality. Secure data handling ensures compliance with HIPAA in the United States and GDPR in Europe.

Challenges Developers Face

Despite the maturity of Python audio tools, developers face recurring issues.

| Challenge | Details | Impact |

| Installation hurdles | PyAudio depends on PortAudio; setup differs across OS (Homebrew on macOS, MS Visual C++ on Windows, package managers on Linux). | Errors during installation can prevent PyAudio from running at all. |

| Codec errors | Many formats require FFmpeg; it must be installed and added to the system path. | Without FFmpeg, Pydub only supports a limited range of file types. |

| Sample rates & channels | Music typically requires 44.1 kHz stereo; speech recognition works best at 16 kHz mono. | Wrong settings cause poor fidelity or break ML model compatibility. |

Practical Advice

The most effective approach is to address technical challenges early in development to avoid costly refactoring later. A disciplined setup process ensures smoother workflows across teams and platforms.

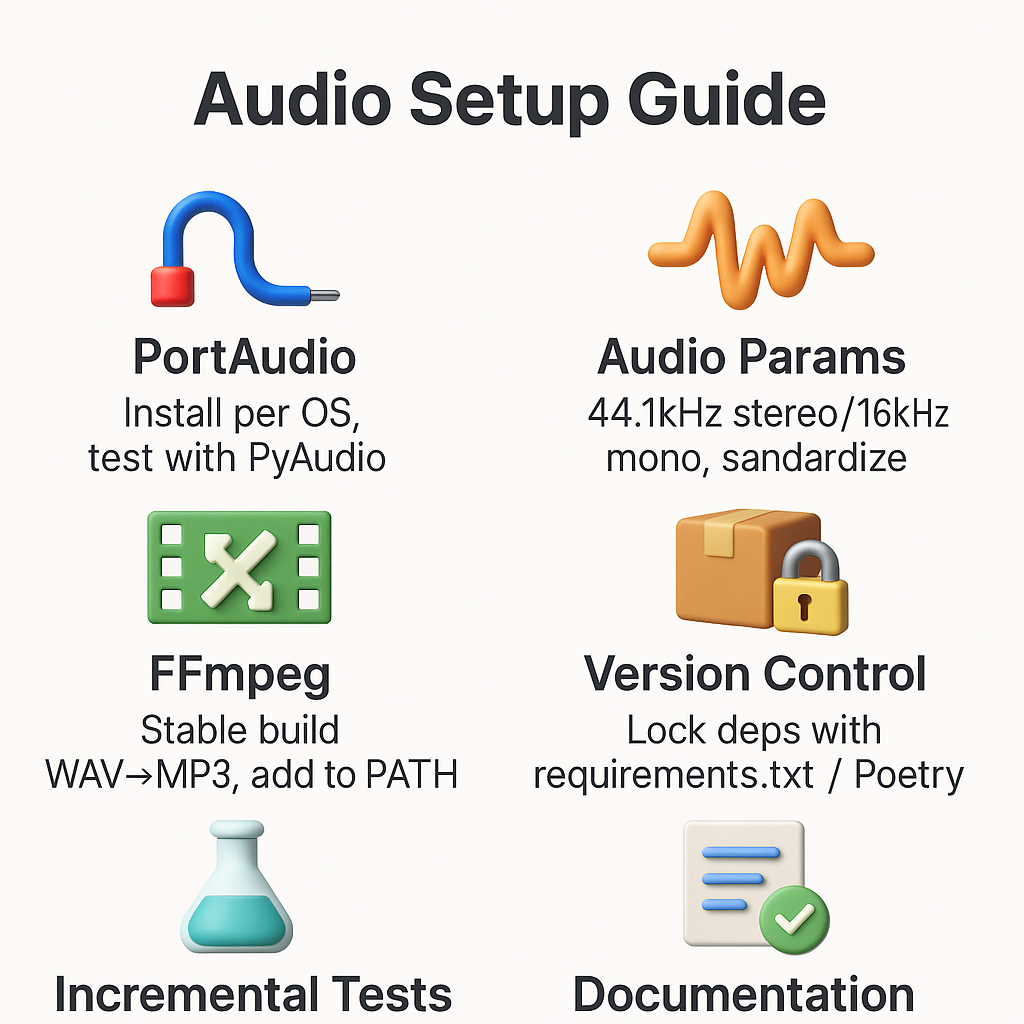

- Install PortAudio carefully

- Follow OS-specific instructions (Homebrew on macOS, MSVC Build Tools on Windows, apt/yum on Linux).

- Run a quick smoke test with PyAudio immediately to confirm that input/output streams work.

- Set up FFmpeg correctly

- Install the latest stable build.

- Verify functionality with small conversion tasks (e.g., WAV → MP3).

- Add it to the system path so libraries like Pydub can detect it automatically.

- Define audio parameters explicitly

- Document sample rates (e.g., 44.1 kHz stereo for music, 16 kHz mono for voice).

- Standardize these values across teams to ensure reproducibility in research and production.

- Use version control for dependencies

- Lock down library versions with requirements.txt or Poetry.

- This prevents unexpected behavior when libraries update.

- Test pipelines incrementally

- Start with short files and simple operations before scaling.

- Validate each step (recording → processing → conversion → transcription) separately.

- Document configurations clearly

- Share setup guides across the team.

- Note known pitfalls (e.g., codec support, latency tuning).

By treating installation and configuration as part of the development workflow rather than afterthoughts, teams reduce debugging time and ensure long-term stability.

Comparison of Main Libraries

The Python ecosystem offers several powerful audio libraries, each suited for specific stages of the workflow.

| Library / API | Primary Use Cases | Strengths | Limitations |

| PyAudio | Recording and playback with fine-grained control | Low-latency streaming, precise configuration (sample rate, channels, buffers). Ideal for real-time systems and acoustic research. | Complex installation (depends on PortAudio), steeper learning curve. |

| Sounddevice | Simple recording and playback | Lightweight, cross-platform, intuitive API. Perfect for prototypes, classrooms, and quick demos. | Limited advanced controls, less suitable for production-heavy tasks. |

| Pydub | Editing and audio processing | Trimming, resampling, volume adjustment, overlaying, format conversion. Syntax is concise and beginner-friendly. | Requires FFmpeg; not designed for ultra-low-latency applications. |

| Deepgram SDK | Large-scale transcription | High accuracy, multilingual support, asynchronous bulk processing. Great for customer service analytics and research. | Cloud-based only, requires API key and connectivity. |

| Graphlogic Speech-to-Text API | Healthcare, customer service, secure transcription | Domain-specific accuracy, HIPAA/GDPR compliance, seamless integration with enterprise platforms. | Internet access required, usage billed per request. |

| Graphlogic Text-to-Speech API | Natural voice synthesis for apps, assistants, IVR | Scalable, lifelike synthetic voices, multilingual. Supports accessibility, education, and customer service. | Cloud dependency, API setup needed. |

Takeaway

Together, these tools cover almost every audio manipulation scenario:

- PyAudio and Sounddevice for recording/playback.

- Pydub for editing, cleaning, and preprocessing.

- Deepgram and Graphlogic APIs for transcription and TTS at scale.

The right choice depends on project goals, team expertise, and production requirements. Many teams use these libraries in combination, starting with Sounddevice for prototyping, migrating to PyAudio in production, preprocessing with Pydub, and then applying transcription or TTS APIs for downstream applications.

Trends and Predictions

Looking forward, several trends are shaping the future of Python audio processing.

AI powered noise reduction is becoming standard. Algorithms can now remove background noise in real time while preserving speech clarity. In telehealth, where accurate communication is critical, this can improve diagnostic reliability.

Real time processing is expanding. Applications such as virtual concerts, live learning platforms, and interactive assistants require ultra low latency streaming. Developers will increasingly expect Python libraries to support sub second performance.

AI driven transcription is approaching near human accuracy. This will transform workflows in legal services, media production, and medical documentation. Meeting minutes, news interviews, and patient notes will be transcribed with minimal need for human correction.

Beyond these, further integration with generative AI platforms is expected. The Graphlogic Generative AI & Conversational Platform is one example of how audio APIs are combining with conversational intelligence. Developers will be able to create applications that not only transcribe speech but also understand context and respond naturally in real time.

Practical Tips for Developers

Start small before scaling

Test recording and playback with short audio files before running large pipelines. This makes it easier to catch configuration errors early and avoid wasted processing time.

Normalize audio consistently

Always normalize volume levels when combining multiple clips. This prevents distortion, balances voices, and ensures a smoother listening experience.

Use uncompressed formats for source data

In healthcare and research, always capture and store audio in uncompressed WAV format first. Compressed formats such as MP3 or AAC should only be used for distribution or non-critical workflows.

Standardize technical parameters

Document and enforce consistent sample rates (e.g., 16 kHz for speech, 44.1 kHz for music) and channel configurations (mono or stereo). This guarantees reproducibility across platforms and teams.

Keep FFmpeg current

Regularly update FFmpeg to take advantage of the latest codec support, security patches, and performance improvements.

Conclusion

Python audio tools have matured into a complete ecosystem. PyAudio, Sounddevice, and pydub cover recording, playback, and editing. Deepgram and Graphlogic APIs extend functionality to transcription and text to speech.

Audio manipulation is now central in healthcare, education, customer service, and media. The tools are not without challenges, but installation and format hurdles can be solved with careful preparation.

Looking forward, AI powered improvements in noise reduction, transcription, and real time processing will further expand possibilities. For developers, the key is to start small, choose the right libraries for each stage, and remain aware of new capabilities as they emerge.

The future of sound in digital systems is not only about clearer recordings. It is about intelligent interaction, accessibility, and efficiency. Python tools and APIs are the foundation of that future.

FAQ

For beginners, Sounddevice is the easiest starting point. It requires minimal setup and its API is straightforward. Developers can record or play sound with only a few lines of code.

PyAudio depends on PortAudio, which must be compiled for each operating system. This makes installation different on macOS, Windows, and Linux. Once installed, PyAudio is stable and powerful.

FFmpeg is essential for handling multiple formats. Without it, many files such as AAC or AIFF cannot be processed. Pydub requires FFmpeg to work with compressed formats.

Most accurate transcription services such as Deepgram or Graphlogic require internet access because they rely on cloud-based neural networks. Offline transcription is possible with libraries like Vosk, but accuracy is lower compared to cloud APIs.

Voice data typically uses 16 kHz mono, which is sufficient for speech recognition and reduces file size. Music should use 44.1 kHz stereo to preserve quality.