Voice AI is no longer just a cool experiment. It’s part of how modern healthcare, customer service, and finance operate today. But there’s a big gap between flashy demos and systems that work under pressure during a triage call, a pharmacy refill, or a late-night support request.

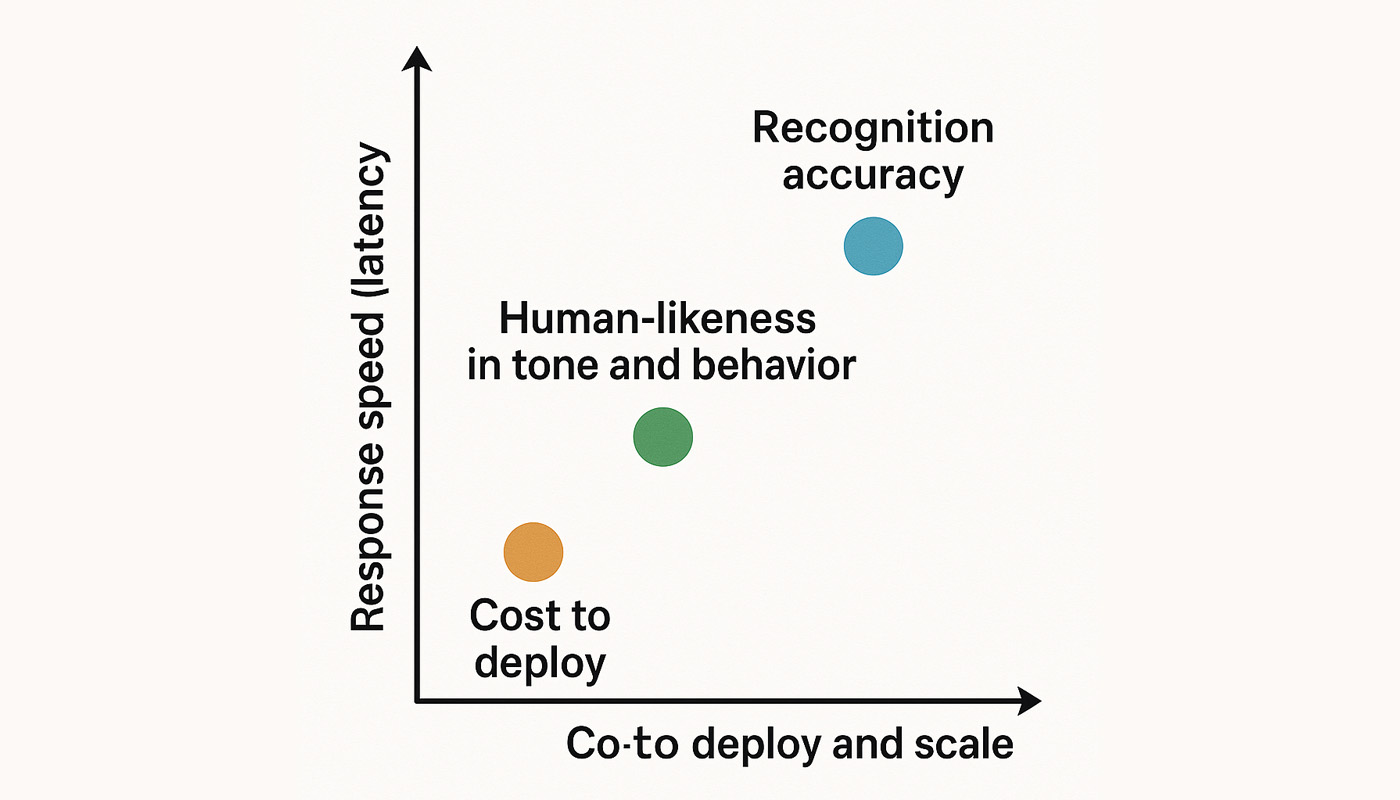

So what separates real-world-ready voice agents from fragile prototypes? It comes down to four things:

- Response speed (latency)

- Recognition accuracy

- Cost to deploy and scale

- Human-likeness in tone and behavior

This article breaks down each of these, not as abstract ideals but as things that must be designed and balanced in every deployment. We’ll also highlight how platforms like Graphlogic.ai are tackling these constraints with targeted tools for voice-based AI.

Latency: Why Speed Is the Foundation

Latency in voice AI is the time between receiving a user’s input and beginning a response. Even small delays, measured in milliseconds, can shape how natural or frustrating the interaction feels. In clinical or support environments, this can be the deciding factor in whether someone stays engaged or hangs up.

The impact is well-documented. A study in JAMA Network Open found that patient satisfaction during telehealth consultations drops significantly when systems introduce even slight delays. In time-sensitive scenarios, like post-surgical monitoring or emergency triage, delays over 250 milliseconds can hinder outcomes. NIH-funded research confirms this in the context of real-time medical decision-making.

To deliver real-time responses, voice AI needs to be optimized at every level.

Smaller model architectures reduce processing time and computational load. Models like Mistral 7B are gaining popularity because they balance performance with efficiency.

Reducing the number of tokens generated during output speeds up inference. This can be improved through prompt design and limiting unnecessary verbosity.

Running models on edge devices or local servers removes the round-trip delay of sending data to the cloud, resulting in much faster response times.

For frequently asked questions such as “What are the side effects of this medication?” or “Schedule my next appointment,” using cached, precomputed responses eliminates wait time entirely.

One commercial API that demonstrates this level of performance is Graphlogic’s Text-to-Speech API. It delivers sub-50 millisecond latency and offers on-premise deployment—an essential feature for regions with strict data privacy regulations or limited cloud infrastructure.

Low latency is not a luxury. It is a prerequisite for trust. People won’t wait for bots to think.

Accuracy: Why Getting It “Mostly Right” Isn’t Enough in Healthcare

Accuracy in voice AI isn’t about perfection. It’s about reliability. A voice assistant must not only hear the right words but also understand what the user actually means. In high-stakes sectors like healthcare, law, or finance, even a small error can lead to a misdiagnosis, a compliance violation, or the loss of a client.

The standard is demanding. Medical transcription tools, for example, need to maintain a Word Error Rate (WER) below 10% to be considered viable. But raw numbers only tell part of the story. The real challenge is nuance. Can the AI distinguish between “Advil” and “Ativan”? Can it catch a whispered “I’m in pain”? Can it detect a potentially urgent issue hidden in vague or hesitant language?

Truly accurate voice AI systems rely on three key capabilities.

First, they need strong intent recognition to understand what the user is trying to do, not just the literal words being spoken.

Second, they require contextual memory to handle follow-up questions, shifting tones, or long interactions without losing track of the conversation.

Third, they must have deep domain knowledge, including technical terms, abbreviations, and culturally specific phrasing.

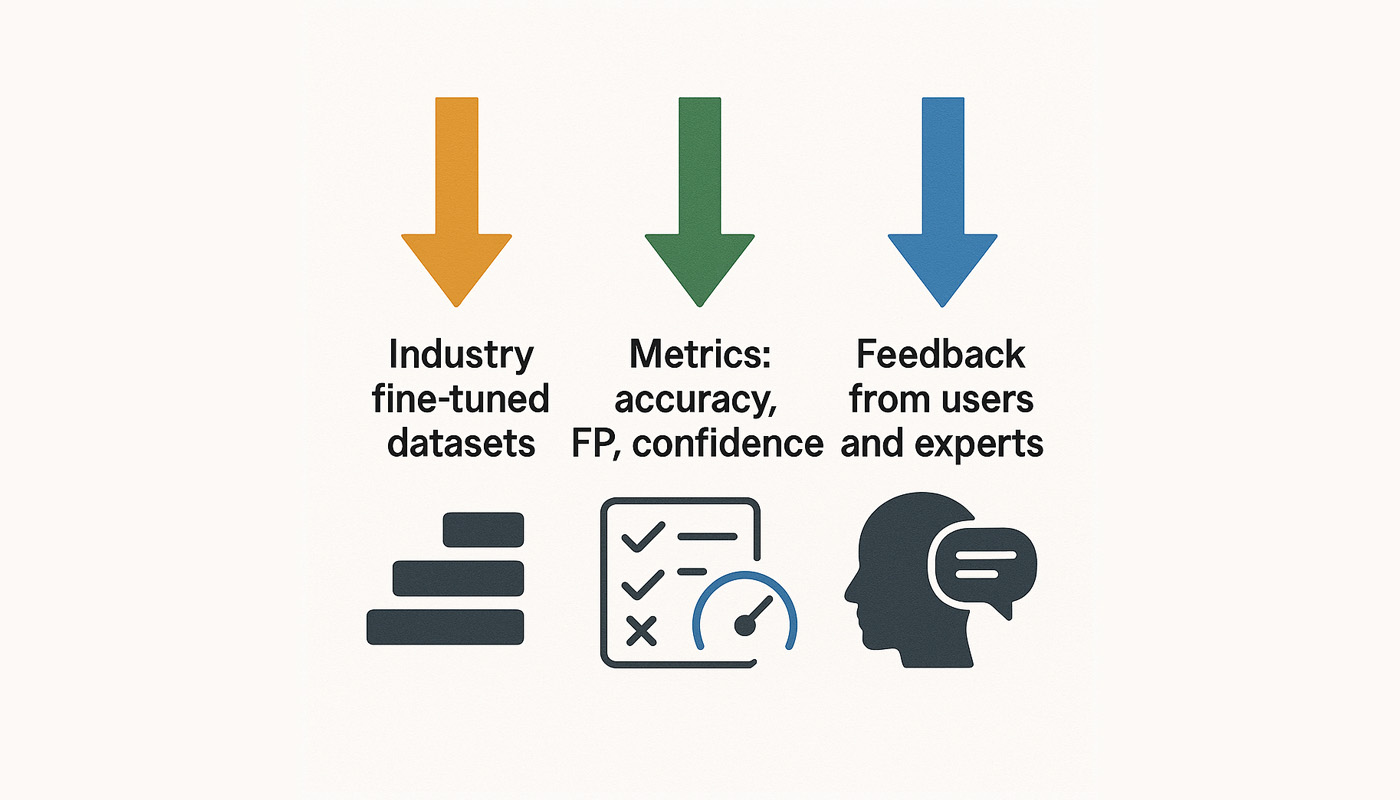

Training alone isn’t enough to reach this level of accuracy. High-performing systems also need:

- Fine-tuned datasets from the specific industry they serve

- Evaluation metrics that go beyond accuracy rates, such as false positives and confidence intervals

- Ongoing feedback from real users and subject-matter experts to improve performance over time

Graphlogic’s voice agents use Retrieval-Augmented Generation (RAG) to blend fast response generation with up-to-date information from external sources like documentation or CRM systems. This allows the AI to adapt responses dynamically, based on current data, a capability that’s becoming standard in clinical AI, as highlighted in a recent Nature review.

In sensitive domains, accuracy isn’t just a technical benchmark. It’s the only thing separating automation from risk and the foundation of trust.

Cost: The Hidden Challenge in Scaling Voice AI

Many teams get excited about voice AI until the first invoice arrives. Model licensing, GPU usage, and cloud infrastructure costs can escalate quickly, especially when the service runs continuously or demand increases.

Keeping AI agents affordable starts with early planning and smart decisions. Teams can choose open-source models when long-term flexibility matters more than quick setup. They can apply parameter-efficient fine-tuning methods instead of retraining full models, which saves both time and resources. Well-designed prompts and concise responses also reduce compute load, since every extra token adds to processing time and cost. Choosing between cloud infrastructure and edge computing early on is important too. Cloud is scalable but often more expensive, while edge solutions offer lower latency and better cost control in specific environments.

This challenge is especially apparent in healthcare. According to a 2023 McKinsey report, many hospitals are experimenting with generative AI, but few have scaled beyond pilot programs. The report notes that without clear strategies for managing costs and proving value, these technologies risk remaining stuck in early testing phases rather than becoming operational tools.

Graphlogic helps teams manage these challenges through modular deployments. Speech services like transcription and voice generation can be run independently, in the cloud, or on-premise, with billing based only on what is actually used. Combined with token-efficient model architecture, this approach keeps operational costs predictable even at a large scale.

In the end, building effective voice AI is not just about performance. It is about whether that performance can be delivered consistently and affordably across entire organizations.

Humanity: Why Users Stay or Leave Based on Tone

Most people do not care how your voice AI works. They care how it feels. Do they feel heard? Do the responses sound natural? Can the system recognize emotions such as frustration, fear, or urgency?

Creating a human-like experience is not about imitating casual speech. It means:

- Responding with empathy when users sound confused or distressed

- Adjusting to tone, slang, or culturally specific expressions

- Avoiding scripted replies that sound artificial or dismissive

A study published in Frontiers in Psychology found that perceived empathy in AI systems significantly improves user trust and engagement. This effect is especially strong in mental health and chronic care settings.

To achieve this, voice AI systems are built with features:

- Emotion recognition, using voice patterns and word choice to shape responses

- Personalization memory, which allows the system to remember names, preferences, and previous questions

- Tone control, which adapts vocabulary and pacing for different users, including children, older adults, and non-native speakers

Graphlogic’s VoiceBox API supports voice cloning and modulation in 32 languages. This allows companies to create voice interactions that reflect tone, age, gender, and regional accent, making AI more accessible and relatable for users in different regions and industries.

In the end, creating a human-centered voice experience is not about pretending to be human. It is about delivering enough empathy and nuance for users to feel respected and understood.

Final Word: Don’t Just Build Smart Voice AI, Build Usable Systems

Voice AI does not need to be flawless. It needs to be usable. That means it must be fast, accurate, affordable, and pleasant to interact with, all at the same time.

Trade-offs are always part of the process:

- Choose low latency when speed is the top priority

- Focus on accuracy when decisions carry real consequences

- Prioritize cost when scaling across multiple channels or regions

- Optimize for natural tone when building user trust is essential

Graphlogic provides a flexible set of tools to help teams balance these needs. From real-time APIs to generative platforms with built-in context management, the system is designed to support performance across a range of use cases.

But technology alone is not enough. Success with voice AI depends on how clearly you define your users, your goals, and your boundaries.

Because in the end, voice agents do more than answer questions. They speak on behalf of your brand, your service, and your values.

FAQ

Even small delays can disrupt the conversation or break trust. Fast responses are critical, especially in high-pressure settings like healthcare or support.

Accuracy means understanding what users mean, not just what they say. It also includes handling context and industry-specific language correctly.

Running voice AI takes real compute power and infrastructure. Without careful planning, costs rise quickly and systems stay stuck in pilot phases.

It’s about how natural and empathetic the AI sounds. Users need to feel understood, especially in emotional or sensitive conversations.

Graphlogic focuses on usable voice AI with fast APIs, real-time context handling, and support for personalization across languages and platforms.

Not fully. Trade-offs are always involved. The key is finding the right balance based on the use case.