Language models dominate AI research and industry. They power search, healthcare advice, education and even policy analysis. But their evaluation remains one of the hardest questions in modern AI. Without fair tests we cannot tell whether a new model truly improves or only changes style. Benchmarks define the field.

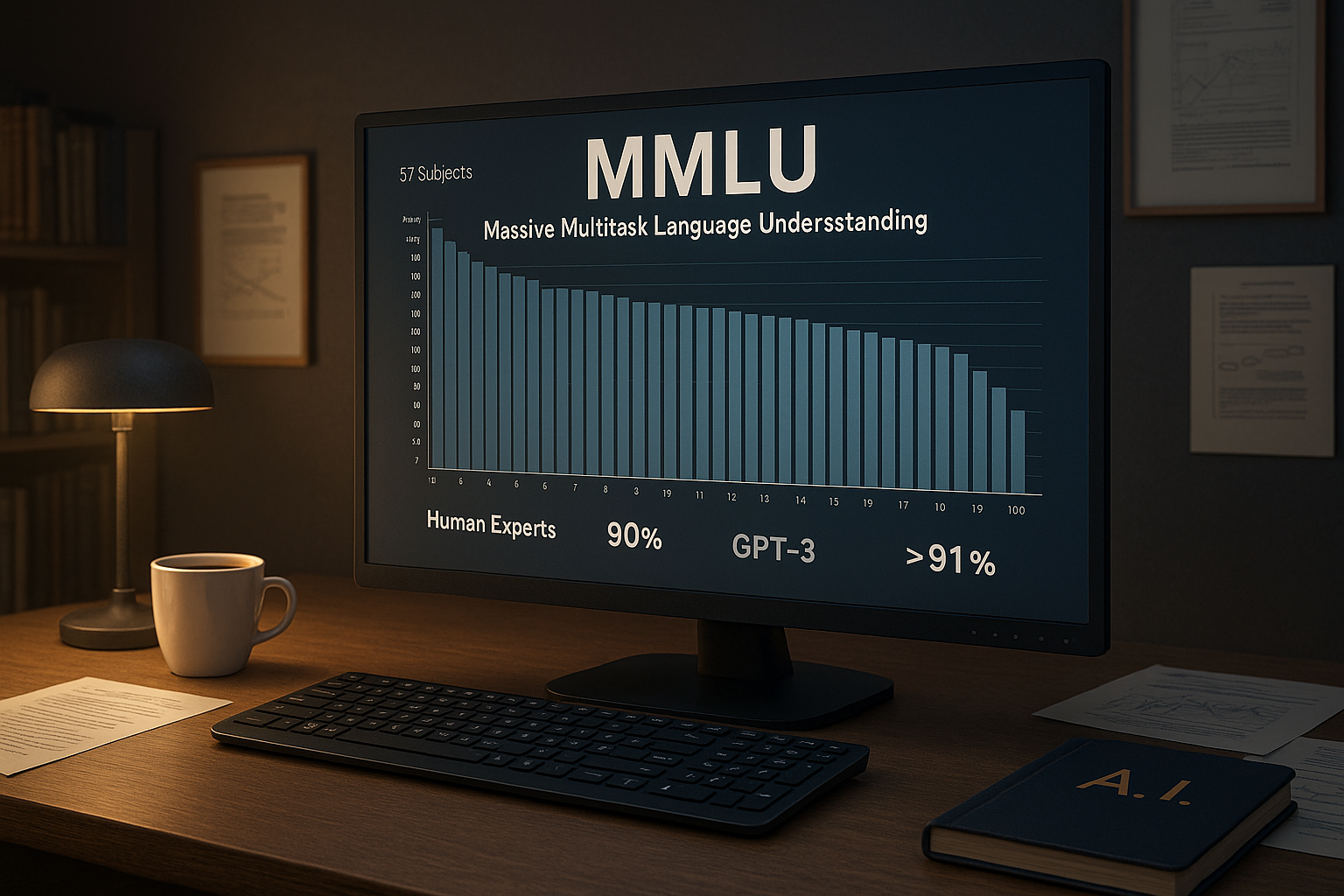

The Massive Multitask Language Understanding benchmark, or MMLU, stands out. It covers nearly 16 000 questions across 57 subjects. Human experts score near 90 %. When GPT-3 was released in 2020, its score was only 44 %. By early 2025 GPT-5 already achieves over 91 %. Average leading models reach 88 %.

This fast growth shows the benchmark’s value but also its limits. Are we seeing true reasoning or statistical tricks. This article explores MMLU from design to current status. It also explains why future benchmarks must go beyond.

History of Benchmarking in Language Models

Before MMLU the field relied on smaller tests that measured narrow abilities. The most visible benchmarks were GLUE and SuperGLUE. GLUE appeared in 2018 with 9 tasks such as natural language inference, paraphrase detection and sentiment analysis. At first it gave researchers a common ground. Within a short time models like BERT and RoBERTa passed human performance, showing how fast the field was moving.

SuperGLUE followed in 2019. It raised difficulty with reading comprehension and coreference tasks. For a while progress slowed, but by 2021 many models already scored above 90 %. The lesson was clear. Benchmarks that focus on small language tasks cannot capture the broader reasoning skills of new models.

Researchers then turned to larger frameworks. BIG-bench explored hundreds of tasks across logic, mathematics and creativity. HELM added dimensions like robustness, fairness and safety. Both helped broaden evaluation, but they also showed limits. BIG-bench was complex to score, HELM required heavy interpretation. Neither felt like a simple but deep test.

MMLU arrived at that turning point. It covered 57 subjects with nearly 16 000 multiple choice questions. Its design looked like a real exam instead of a research puzzle. A model that could pass MMLU would show real academic or professional knowledge. That made it harder to saturate and more appealing to both labs and companies.

Because of its timing and breadth, MMLU quickly became the main reference in AI papers and corporate reports. It linked earlier work on narrow tasks with the new wave of general models.

History of Benchmarking in Language Models

Before MMLU the field relied on smaller tests that measured narrow abilities. The most visible benchmarks were GLUE and SuperGLUE. GLUE appeared in 2018 with 9 tasks such as natural language inference, paraphrase detection and sentiment analysis. At first it gave researchers a common ground. Within a short time models like BERT and RoBERTa passed human performance, showing how fast the field was moving.

SuperGLUE followed in 2019. It raised difficulty with reading comprehension and coreference tasks. For a while progress slowed, but by 2021 many models already scored above 90 %. The lesson was clear. Benchmarks that focus on small language tasks cannot capture the broader reasoning skills of new models.

Researchers then turned to larger frameworks. BIG-bench explored hundreds of tasks across logic, mathematics and creativity. HELM added dimensions like robustness, fairness and safety. Both helped broaden evaluation, but they also showed limits. BIG-bench was complex to score, HELM required heavy interpretation. Neither felt like a simple but deep test.

MMLU arrived at that turning point. It covered 57 subjects with nearly 16 000 multiple choice questions. Its design looked like a real exam instead of a research puzzle. A model that could pass MMLU would show real academic or professional knowledge. That made it harder to saturate and more appealing to both labs and companies.

Because of its timing and breadth, MMLU quickly became the main reference in AI papers and corporate reports. It linked earlier work on narrow tasks with the new wave of general models.

Why Benchmarking Still Matters for LLMs

Benchmarks remain essential because they make claims measurable. Without them, progress would be based on marketing or personal impression. A chatbot may look fluent in conversation but fail when asked a technical question. MMLU exposes such gaps by showing how models handle knowledge under exam conditions.

Benchmarks also shape trust and investment. A score of 91 % on MMLU is not just a number. For companies it signals technical strength. For investors it suggests readiness for deployment. For regulators it helps compare risks across models.

Yet over time benchmarks face new risks. Public datasets can leak into training corpora. This means models may memorize instead of reason. Accuracy then reflects exposure rather than skill. Experts call this contamination. It is one reason why labs design harder sets like MMLU-Pro and multilingual versions such as ProX. These aim to test reasoning without relying on memorized items.

Another challenge is political. Benchmarks decide who looks ahead in the AI race. A strong MMLU score brings headlines. A weak one can affect reputation. This makes the design and use of benchmarks more than a technical task. They influence careers, funding, and policy debates.

In short, benchmarking remains the foundation of evaluation. But it must evolve to stay relevant. MMLU shows how much can be gained, while its limits remind us that no single test is enough.

Why Benchmarking Still Matters for LLMs

Benchmarking is the backbone of fair comparison. Without it, we would only trust claims. MMLU highlights model weaknesses that casual demos hide. A chatbot may seem fluent but fail on professional knowledge. Benchmarks expose that gap.

Benchmarks also influence funding. When investors see a 91 % accuracy claim, they treat it as proof of progress. Yet experts warn of risks. Models may overfit to public tests. They may memorize common benchmarks from training data.

To counter this, researchers now create contamination free versions. They rephrase prompts or build new sets like MMLU-Pro. These aim to preserve the challenge. The debate shows benchmarking is not only technical but political.

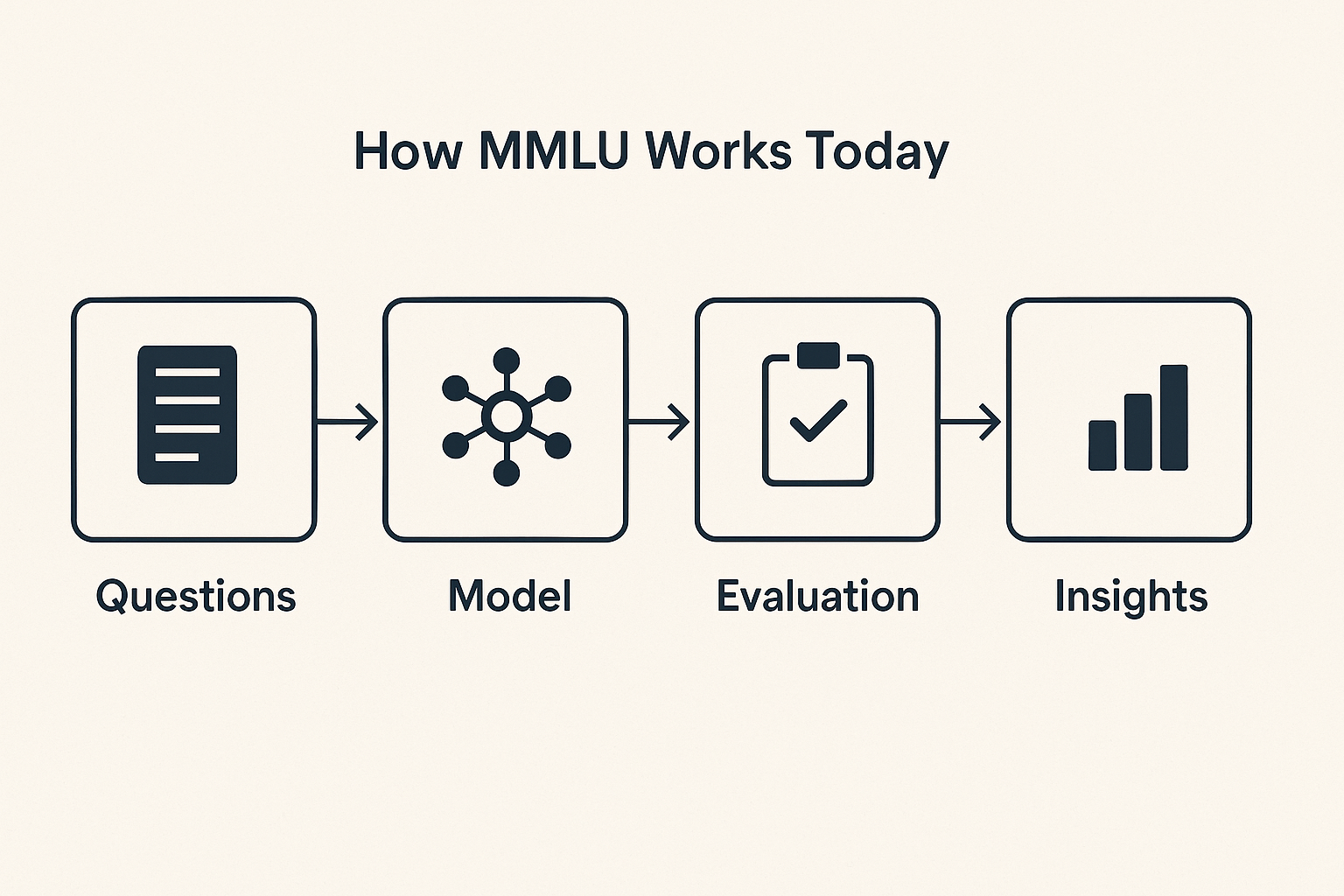

How MMLU Works Today

Running MMLU may look simple but in practice it requires care. Models are given the full set of 15 908 questions. They must answer without training directly on the dataset. Accuracy is calculated as the share of correct responses. Results are reported overall and by subject area.

A model with 88 % accuracy still fails more than one in eight questions. That margin is significant when the domain is medicine, finance or law. GPT-5 reached 91.38 % across ten sampled subjects in one published report. Its strongest scores were in physics, where accuracy exceeded 94 %. In college chemistry performance dropped to 64 %, showing that even top models have clear blind spots. These variations help developers identify where improvement is most urgent.

MMLU also provides a way to rank competing models. GPT-5, O3 and GPT-OSS differ by narrow margins. O3 is reported at 88.6 %. GPT-OSS comes close with 88.4 %. While the gap may look small on paper, in high stakes fields a difference of even half a percent can mean thousands of additional correct or incorrect answers. This is why leaders still rely on MMLU numbers for competitive comparison.

Another element of execution is prompt design. Consistent formatting is essential. Even minor changes in phrasing can shift results. Researchers often run multiple prompt versions to ensure reliability. This process highlights the balance between benchmark accuracy and real world robustness.

Key Features of MMLU

- Coverage across 57 expert domains from humanities to hard sciences

- Difficulty levels that range from basic recall to graduate level reasoning

- Results that can be broken down by individual subject for fine analysis

- Adaptability to many model architectures and scales

- Public availability, which allows both academics and industry teams to use it

These features make MMLU stand out from earlier benchmarks. Unlike GLUE or SuperGLUE, it is not limited to narrow natural language tasks. Instead it functions as a comprehensive exam that resembles professional or university level testing. This design gives MMLU more credibility outside of research. When a company claims high scores on MMLU, the implication is that its model can handle complex knowledge similar to what a trained professional might face.

Because of this unique combination of breadth, depth and accessibility, MMLU is now seen as a central point of reference for measuring progress in large language models.

Evaluating Models with MMLU in 2025

Developers follow consistent pipelines. They set up a clean test environment. They run models with standard prompts. They measure overall accuracy and per subject scores.

Interpreting results needs caution. A high global score may hide deep weaknesses. A model that excels in physics may still fail in law or medicine. Only breakdowns reveal this.

Companies use MMLU in public reports. They present results to prove progress. Yet insiders stress that results should be compared cautiously. Two models with 88 % may behave very differently in deployment.

Case Studies of Model Performance

GPT-5 achieved 91 % in controlled testing. GPT-4o remains closer to 88 %. Claude 3 Opus and LLaMA 3 hover in the mid 80 %. MiniMax-M1 reports about 81 %.

MMLU-Pro introduced harder versions with five answer choices and more quality control. Models scored lower on this variant. This shows that difficulty level matters.

The key lesson is that even top scores do not equal mastery. Each model still fails thousands of questions.

Broader Applications of MMLU

MMLU is not only a test for labs. It is used by educators to see whether LLMs can tutor in science. It is used in medicine to test clinical knowledge of AI assistants. Hospitals explore whether models that score high on medical sections can assist doctors.

In business, firms use results to decide which models to integrate into customer service. A higher score in law sections may matter for a firm working on contracts.

MMLU therefore acts both as a scientific benchmark and a practical filter.

Trends and Forecasts

Benchmarking is entering a new phase. Leading models now score above 90 % on MMLU, which signals partial saturation. This forces researchers and companies to design new benchmarks that can separate true reasoning from memorization. The focus is shifting from narrow accuracy to a broader view that includes fairness, safety and multimodality.

Multilingual expansion

MMLU-ProX now spans 13 languages with 11 829 questions in each. This makes it possible to compare performance across diverse cultures and scripts. Global-MMLU extends coverage to 42 languages and flags items that carry cultural or political bias. This matters for real applications, since most users of AI do not interact in English.

Safety benchmarks

MLCommons released AILuminate in 2024. It tests how models handle sensitive prompts such as hate speech, violence or self-harm. GPT-4o scored Good while Claude scored Very-Good. This reflects a wider industry move from focusing only on knowledge toward measuring risk control. Benchmarks are becoming tools for both developers and regulators.

Enterprise benchmarks

Some labs propose 14 task suites designed around Bloom’s taxonomy. These tasks focus on summarization, planning and analysis, which are core business functions. The goal is to check whether models can support enterprise use cases beyond casual conversation. This trend suggests that benchmarks will not only serve research but also guide procurement and deployment.

Multimodality

New benchmarks like MMMU introduce images, tables and diagrams. They test how models integrate vision and language. This matters in medicine, where doctors rely on scans, and in education, where students use charts. The ability to combine modalities is now a competitive frontier in LLM development.

Overall, the forecast is clear. Benchmarks will become more complex, more global, and more tied to safety. MMLU remains the baseline, but the ecosystem around it is expanding fast.

Challenges and Limits

MMLU is powerful but not perfect. Many of its questions are public, which creates a risk of contamination. Models may score high because they have seen similar data during training. The dataset is also English and Western focused, which limits fairness. When prompts are rephrased, accuracy can drop by 2 %, showing weakness in robustness.

Another challenge is that MMLU is static. Benchmarks age quickly once models learn their structure. MMLU does not include multimodal content like images or audio. It also does not measure how safe or unbiased a model is.

For these reasons labs now combine multiple benchmarks. They run MMLU for knowledge, HELM for robustness, AILuminate for safety, and ProX for multilingual coverage. This layered approach ensures a more fair evaluation. Even so, MMLU keeps its place as the main reference point.

Is MMLU the Ultimate Test

MMLU is highly valuable but it is not final. It is a strong baseline that shows how well models handle expert-level knowledge. Yet it does not cover planning, ethical risk, multimodality or cultural fairness.

The future of evaluation will mix MMLU with new datasets that are dynamic and contamination free. Safety and ethical evaluations will also be included. MMLU will remain important but it will stand alongside other benchmarks. It is better to think of MMLU as one part of a broader system rather than the single measure of intelligence.

Practical Advice for Practitioners

- Always run MMLU in a controlled and transparent pipeline

- Analyze accuracy by subject and not only by overall score

- Compare results against MMLU-Pro or ProX to test difficulty and multilingual strength

- Add safety and bias tests such as AILuminate to cover ethical risks

- Monitor multilingual coverage to ensure readiness for global deployment

- Remember that a 91 % score still means thousands of failed questions

These steps help researchers and companies avoid blind trust in one number. They also prepare teams for a future where evaluation is diverse and continuous.

Product Integrations

The Graphlogic Generative AI & Conversational Platform offers more than a development toolkit. It allows teams to test language models across tasks that reflect real workflows. The platform integrates retrieval augmented generation, which is critical for domains where accuracy and traceability are essential. For example, in healthcare a model evaluated on MMLU’s medical questions can be connected to retrieval modules that cite medical literature. This makes benchmarking more than a static score and helps validate models in applied scenarios.

The Graphlogic Text-to-Speech API expands evaluation into spoken interaction. Developers can run test flows verbally, which improves accessibility for users who prefer or require audio. This also helps human reviewers who want to hear how models present answers in realistic dialogue. Combined with MMLU, it creates a bridge between benchmark numbers and lived user experience.

For research teams, these integrations provide practical tools to complement academic evaluation. They turn static benchmarks into active systems that simulate real use. In this way, Graphlogic products support the transition from controlled testing to production-ready performance.

Conclusion

By 2025 MMLU remains central to the evaluation of large language models. It shaped progress from GPT-3 at 44 % to GPT-5 at 91 %. Over this period, it exposed strengths and weaknesses across domains and gave the community a shared baseline. It also showed saturation, as many leading models now cluster near 88 % to 91 %.

The benchmark proved valuable but it left important gaps. It does not fully measure safety, cultural fairness, bias robustness or multimodal reasoning. These areas are now at the front line of AI research. New benchmarks like MMLU-ProX, AILuminate and MMMU are emerging to meet these needs.

The future of benchmarking lies in diversity. Static, single-domain scores will no longer be enough. Evaluation must become dynamic, multilingual, multimodal and safety-aware. Knowledge and reasoning will remain essential, but robustness and ethical performance must join them.

MMLU will endure as a foundation. It will stand alongside other benchmarks in a growing ecosystem. Its role is shifting from the ultimate test to one of several pillars. The lesson is that progress cannot be judged by a single number. True evaluation requires a balanced set of tools, and MMLU will remain part of that set for years to come.

FAQ

It contains 15 908 questions across 57 subjects.

Experts average about 90 %. Students may score lower.

GPT-5 at about 91 % in reported tests.

Many models are trained on internet data. Benchmark items may leak. This inflates results.

GLUE had 9 tasks. It saturated fast. MMLU is larger and harder.

They are harder and multilingual variants. They add more answer options and languages.

Benchmarks like MMMU combine images with text. They are the next frontier.

Yes. Companies map subject results to use cases. High medical scores may matter for health apps.

No. It is knowledge based. Safety requires other benchmarks like AILuminate.