Speech-to-text APIs are no longer optional in sectors like healthcare, education, accessibility, and enterprise productivity. These APIs transform audio into searchable, structured text — enabling automation, analysis, and accessibility in real time. In 2025, speech processing tools power clinical transcription, customer support summaries, and global conferencing tools. The demand for speed, accuracy, and language support continues to grow.

The landscape has become more complex, with vendors offering dozens of competing APIs. Each claims accuracy, scalability, or advanced features, but not all deliver. This article breaks down what STT APIs are, how to evaluate them, which features really matter, and which APIs actually perform in production environments.

What Is a Speech-to-Text API?

A speech-to-text API (also known as STT, voice recognition API, or transcription API) takes audio input and returns the corresponding text output. These APIs rely on AI models trained to understand natural language, detect different speakers, and correct for noise or accents. They are integrated into mobile apps, web platforms, enterprise systems, and assistive devices.

Historically, early speech systems relied on statistical methods like Hidden Markov Models (HMMs) and Gaussian Mixture Models. In recent years, deep learning, especially transformer-based architectures, has reshaped the field. Tools like OpenAI’s Whisper introduced multilingual, large-context models capable of transcribing noisy or low-quality speech with high accuracy. You can read the full Whisper research report on OpenAI’s site.

Another leader, Deepgram, released its Nova-3 engine in 2025, using an end-to-end deep neural model trained on massive multilingual datasets. The model supports real-time streaming and achieves low word error rates even in noisy environments. Meanwhile, Google Cloud’s Speech-to-Text API continues to evolve with broader audio format support and increased regional language capabilities — including offline transcription. See details on supported formats and features in Google’s documentation.

APIs are typically accessed via REST or gRPC endpoints. Developers send audio (via file or stream), and receive back JSON transcripts, often with metadata like confidence scores, speaker labels, or timestamps. These APIs are deployed across devices in call centers, hospitals, courtroom recorders, and mobile phones — making them infrastructure rather than just software.

What to Consider Before Choosing a Speech-to-Text API

Choosing the right STT API requires more than comparing prices. The wrong API can result in poor data quality, slow transcription, or unusable output. Consider the following:

- Accuracy. Word Error Rate (WER) is the standard metric for transcription quality, but it’s not enough. You must also consider domain-specific vocabulary (e.g., medical or legal terms), speaker overlap, accent variability, and background noise resilience. A model with 5 % WER in ideal conditions may fail under real-world load.

- Latency. For live apps (e.g., live captioning or virtual consultations), latency below 300 ms is critical. Some APIs like Deepgram’s Nova-3 achieve sub-200 ms performance on streaming input. In contrast, batch-only models like OpenAI Whisper are better suited for post-processing than real-time use.

- Pricing Structure. Costs range from $0.005 to $0.02 per audio minute depending on volume and features. Some vendors charge extra for diarization or multi-language support. Be cautious of APIs that charge for partial transcriptions or metadata. Azure, for instance, provides flexible tiers but quickly scales up in price for enterprise-grade usage.

- Audio Modality. APIs vary in how they accept audio — some support real-time streaming, others only file uploads. For real-time scenarios (like live calls), look for WebSocket or gRPC support. If you only need asynchronous transcription, batch APIs are simpler.

- Customization & Security. Many modern platforms let you upload domain-specific vocabulary or fine-tune models using your own audio. Deepgram, AssemblyAI, and Google offer these options. Compliance with HIPAA, GDPR, and SOC 2 is essential in medical, legal, and educational settings. See AssemblyAI’s security overview for example protocols.

- Scalability and API Limits. Check how vendors handle burst traffic or high concurrency. Some cap the number of simultaneous streams or require pre-allocation of resources. If your use case involves thousands of recordings per hour, look for elastic scaling policies.

- Maintenance and Transparency. Frequent model updates, clear changelogs, and usage analytics are signs of a mature vendor. Providers that publish performance benchmarks — like Deepgram and AssemblyAI — are often more trustworthy long term.

Most Important Features in Modern STT APIs

Today’s best APIs do much more than basic transcription. These are the core features you should look for:

- Multi-language and dialect support. If your user base is global, language coverage matters. Google’s STT API supports over 125 languages. Whisper supports 99, with good handling of low-resource dialects.

- Speaker Diarization. This lets you differentiate between multiple speakers, labeling who said what in a transcript. It’s critical for meetings, interviews, and medical consultations. Many APIs require this to be manually enabled, so verify its availability per tier.

- Custom Vocabulary and Boosting. APIs should allow tuning for names, acronyms, or field-specific jargon. For example, a tool for endocrinologists should recognize “metformin” or “TSH” without phonetic errors.

- Profanity Filtering and PII Redaction. Many APIs allow sensitive content to be redacted or replaced. AssemblyAI, for instance, includes automatic redaction for phone numbers, names, and addresses. You can read about this feature in AssemblyAI’s documentation.

- Natural Formatting and Timestamps. Look for support for sentence segmentation, punctuation, and speaker-attributed time codes. These features improve readability and downstream processing.

- NLU and Summarization. Some APIs go beyond transcription by analyzing tone, detecting topics, or even summarizing the content. While not strictly STT, these extras reduce the need for post-processing.

A strong example of feature-rich infrastructure is the Graphlogic Speech‑to‑Text API. It offers multi-language transcription, real-time streaming, and integration with its broader AI platform. Developers can also combine it with Graphlogic’s Generative AI Platform to build full conversational agents, adding TTS, avatar rendering, and dialogue memory.

Where STT APIs Are Used Most

Speech-to-text APIs power a wide range of software products. These are the main sectors using STT in 2025:

Healthcare

Doctors use STT tools for clinical dictation, SOAP notes, and patient summaries. Voice input reduces screen time and improves documentation speed. Accuracy is vital, especially for medication names and diagnoses.

Customer Service

Contact centers use transcription for QA monitoring, real-time agent assistance, and automatic follow-ups. Transcripts can be mined for sentiment, intent, and compliance violations.

Accessibility

Real-time captions for video calls, public announcements, or classroom lectures help users with hearing loss. Government mandates in many countries now require captioning in public media, boosting demand for accurate live transcription.

Education and Research

Professors and students rely on transcription for indexing lectures, creating study materials, and analyzing interviews. STT APIs are often integrated into LMS platforms or knowledge bases.

Media and Content Creation

Podcasters and video editors use STT to create subtitles and searchable transcripts. Automatic editing tools often rely on speech-text alignment.

Voice Interfaces and Smart Devices

Apps like personal assistants, voice-activated dashboards, and wearable tech use STT to parse user commands. These systems often operate under challenging acoustic conditions.

Each of these sectors benefits from different strengths: healthcare requires compliance, contact centers prioritize real-time performance, and media teams need clean, well-formatted text. Before choosing a provider, test on your actual use case, not demo files.

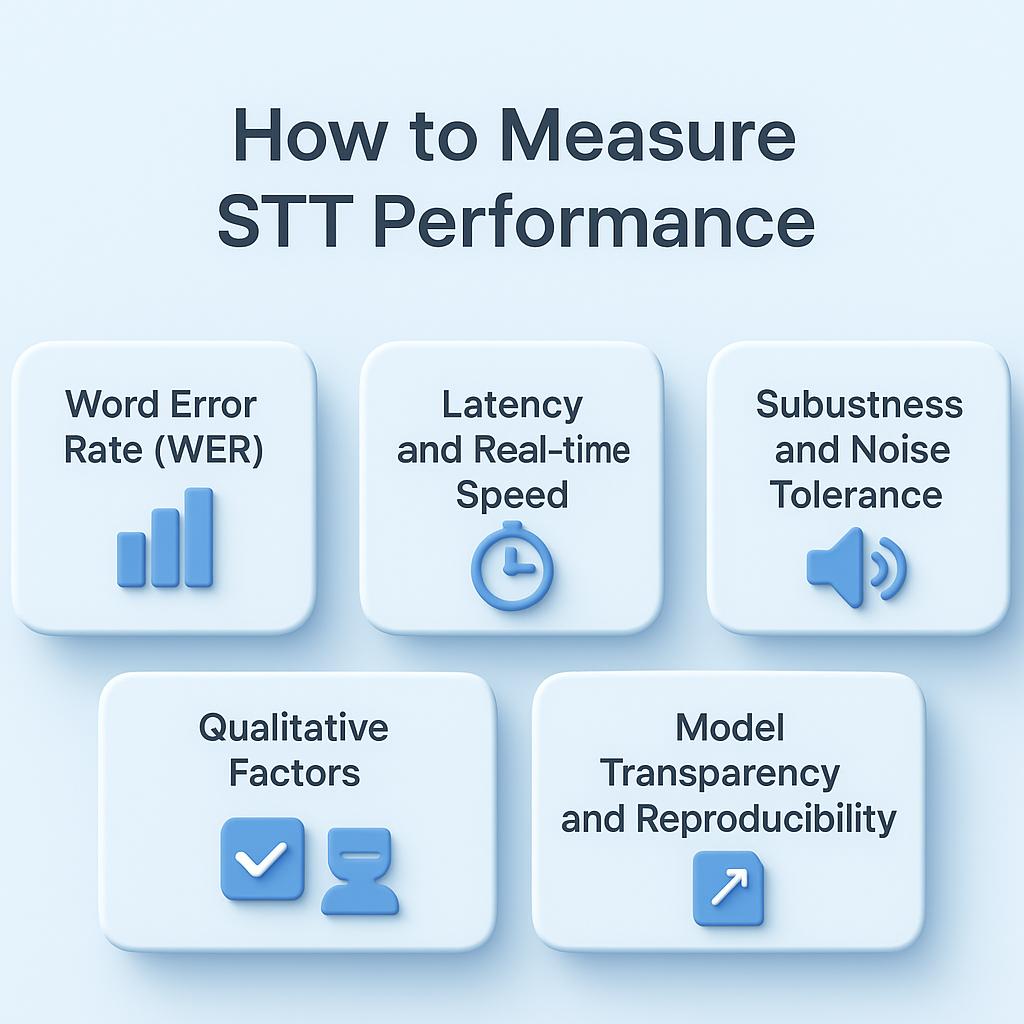

How to Measure STT Performance

Comparing STT APIs requires more than scanning documentation. You need structured evaluation across several metrics:

- Word Error Rate (WER). This is the most cited metric but can be misleading. WER = (substitutions + deletions + insertions) ÷ total words. However, WER doesn’t capture formatting or punctuation quality. A transcript may be readable but technically “wrong.” For example, “ten milligrams” vs. “10 mg” could be semantically equivalent but count as an error.

- Latency and Real-time Speed. If you need live feedback, sub-second latency matters. Some providers only update results in 2–5 second windows. Deepgram Nova-3 and Graphlogic’s STT engine both offer sub-300ms latency with incremental updates — ideal for smart assistants or captioning.

- Robustness and Noise Tolerance. Run benchmarks using your own audio — especially with cross-talk, accents, or imperfect microphones. Real-world audio differs significantly from clean datasets.

- Speaker Attribution and Timestamps. Test how accurately the system labels speakers and aligns text to time. This is essential for meetings, call logs, and legal transcripts.

- Qualitative Factors. Review transcript structure, readability, and error patterns. Some systems produce readable output even with modest WER scores, while others require significant cleanup.

- Model Transparency and Reproducibility. Some vendors, like Deepgram, publish third-party benchmarks and open model architectures. AssemblyAI and OpenAI publish model details and training data sources. This transparency can indicate long-term trustworthiness. You can read AssemblyAI’s benchmark methodology in their official benchmark report.

Trends and Predictions for Speech-to-Text in 2025

Speech-to-text technology is evolving fast, and 2025 is a year of major shifts. The field is no longer limited to transcription. It now includes real-time understanding, context analysis, and seamless integration with broader AI systems.

One major trend is tighter integration between STT and large language models. APIs are starting to include summarization, sentiment analysis, and keyword extraction as built-in options. This reduces the need for separate tools and speeds up automation in customer service, healthcare, and media.

Multilingual performance is also improving. More APIs support low-resource languages and regional dialects. Companies now train models on diverse global datasets, which boosts inclusivity and usability for international users.

Edge processing is another important direction. Some vendors now offer offline transcription models for mobile devices and wearables. This improves privacy and reduces latency, especially in healthcare and security-focused industries.

Enterprise-grade customization is gaining momentum. Developers want APIs that adapt to their data — not just general-purpose models. APIs that allow vocabulary uploads, domain fine-tuning, and private model deployment are becoming standard in medical, legal, and technical sectors.

Looking ahead, speech interfaces will continue to merge with multimodal systems. STT will be a layer in larger ecosystems involving avatars, knowledge retrieval, and virtual agents. Companies like Graphlogic are already building platforms that combine transcription with generative dialogue and contextual memory.

The market will likely shift toward platforms rather than standalone APIs. Users want not just raw text, but usable, structured outputs that feed directly into analytics, automation, or communication pipelines. The best tools in 2025 will be those that offer end-to-end value, not just transcription.

FAQ

It’s a tool that turns audio into text using AI. You send audio to the API, and it returns a transcript. It’s used in healthcare, apps, support systems, and more.

Test how it works with your real audio. Focus on accuracy, speed, language support, and security. Make sure it fits your needs, whether that’s real-time transcription, batch processing, or support for technical vocabulary.

Look for real-time response, speaker identification, support for multiple languages, accurate punctuation, time-aligned output, custom vocabulary support, and the ability to filter or redact sensitive content.

WER shows how many words in the transcript differ from the original audio. The lower the score, the better. It doesn’t reflect formatting or fluency, only word-level accuracy.

Yes. Whisper is easier to use and supports many languages. Kaldi is more flexible but requires technical setup. Open-source tools offer control, while commercial APIs are faster to integrate.